In machine learning, algorithms are designed to learn patterns and make predictions from data. There’s a hidden operation that plays a crucial role in training the machine learning models – Feature Engineering. In this blog, we will delve into the importance of feature engineering, its role in enhancing model performance, and develop a recommendation system for blog posts, recommending a random number of blog posts based on their interaction metrics.

What is Feature Engineering ?

A feature is a measurable input that can be used by predictive models; they are variables. Thus, feature engineering is the process of selecting, transforming, and creating relevant input variables (features) from raw data to improve the performance of machine learning models. Feature engineering bridges the raw data and the algorithm, shaping the data into a form that lends itself to effective learning. Its goal is to simplify and speed up data transformations, as well as enhance machine learning model accuracy.

Why is feature engineering important?

The quality and relevance of features are crucial for empowering machine learning algorithms and obtaining valuable insights. Overall, feature engineering is used to:

- Improve model performance: As previously noted, features are key to the optimal performance of machine learning models. Features can be thought of as the recipe, and the output of the model can be thought of as the meal.

- Lessen computational costs: When done right, feature engineering results in reduced computational requirements, like storage, and can improve the user experience by reducing latency.

- Improve model interpretability: Speaking to a human’s ability to predict a machine learning model’s outcomes, well-chosen features can assist with interpretability by helping explain why a model is marking certain predictions.

Component Processes of Feature Engineering

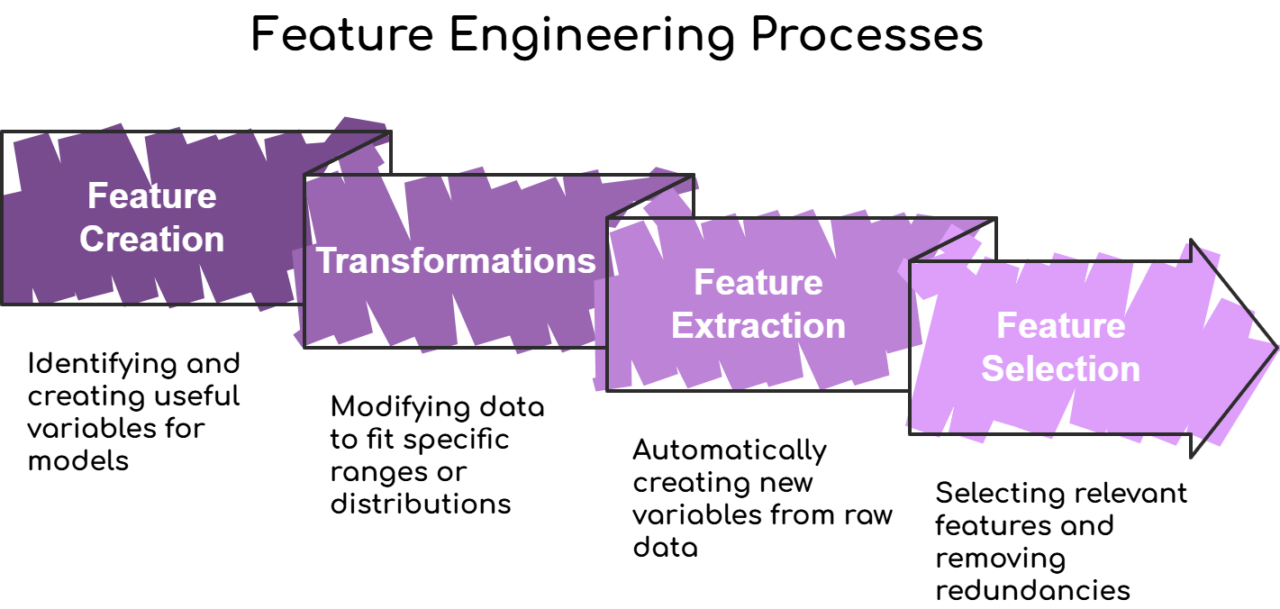

There are four processes that are essential to feature engineering—creation, transformation, extraction, and selection. Let’s explore each in more detail.

Feature Creation

You can think of this process in terms of an artist deciding which colors to use in a new painting. Engineers identify the most useful variables for the specific predictive model. They will look at existing features and then add, remove, multiply, or ratio what currently exists to create new features for better predictions.

Transformations

Transformations modify the data to fit a certain range or statistical distribution. This is especially important with small data sets and simple models where there may not be enough capacity to learn the relevant patterns in the data otherwise.

Feature Extraction

In this process, new variables are automatically created through extraction from raw data. The aim here is to reduce the data volume you are working with in order to create features that are more manageable for the model.

Feature extraction methods include:

- Cluster analysis

- Text analytics

- Edge detection algorithms

- Principal components analysis

Feature Selection

At this stage, engineers pick which features are relevant and which should be removed because they are either irrelevant or redundant. This process is all about deciding which parts are not needed, which parts are repeating the same information, and which parts are the most crucial for the model to be successful.

Blog Recommendation System

It builds a content-based blog recommendation system using a dataset of blog posts (medium_data.csv). The system recommends similar blog posts based on textual content and user engagement metrics like claps, responses, and reading time.

Preprocessing Steps

Before building the recommendation model, the following preprocessing tasks are performed :

1. Handling Missing Values : Missing values in the title, subtitle, and numerical columns (claps, responses, and reading_time) are filled with appropriate values. For instance, missing text is replaced with ‘NA’, and missing numerical values are filled with 0.

1 2 3 4 5 |

data['subtitle'] = data['subtitle'].fillna('NA') data['claps'] = data['claps'].fillna(0) data['responses'] = data['responses'].fillna(0) data['reading_time'] = data['reading_time'].fillna(0) |

2. Remove Duplicates : Duplicate entries based on the title and url fields are removed to ensure unique blog posts in the dataset.

1 |

data = data.drop_duplicates(subset=['title', 'url', 'subtitle'], keep = 'first') |

3. Text Combination : A new column of content is created by combining the title and subtitle fields to represent the blog’s textual content.

1 |

data['content'] = data['title'].fillna('NA') + ' ' + data['subtitle'].fillna('NA') |

Feature Engineering

Several new features are extracted from the raw dataset to improve the performance of the recommendation model:

1. Text-Based Features:

-

- content: A new feature combining the title and subtitle fields to represent the blog’s overall content.

- TF-IDF Vectors: The textual content is converted into numerical vectors using TF-IDF (Term Frequency-Inverse Document Frequency) to capture the importance of words across blog posts.

1 2 |

vector = TfidfVectorizer(stop_words = 'english', max_features = 1000) matrix = vector.fit_transform(data['content']) |

2. Engagement Metrics:

-

- Normalized Features: The numerical engagement metrics(claps, responses, and reading_time) are normalized to ensure they are on the same scale for similarity calculations.

1 2 |

ui_features = data[['claps', 'responses', 'reading_time']] ui_normalized = (ui_features - ui_features.mean()) / ui_features.std() |

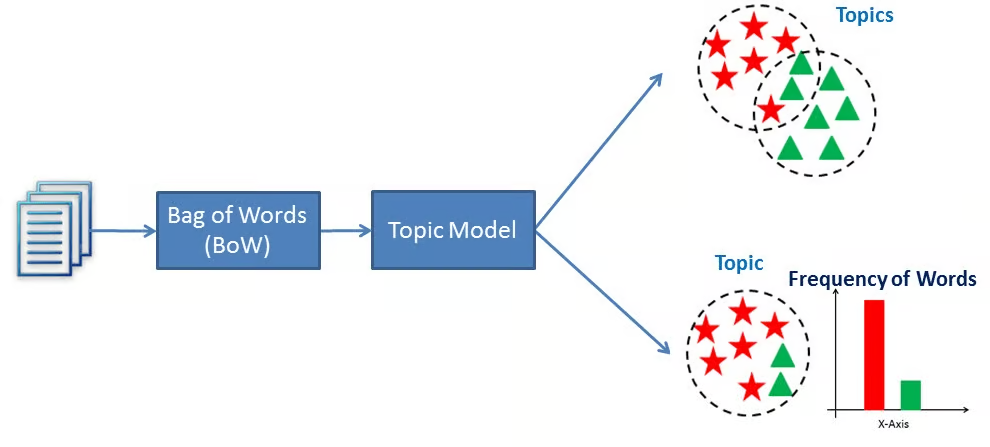

Topic Modeling

Topic Modeling is employed to discover the hidden thematic structure in the blog posts. This allows you to group blogs based on their content more effectively. Topics are the latent descriptions of a corpus of text. Intuitively, documents regarding a specific topic are more likely to produce certain words more frequently. The topic model would scan the documents and produce clusters of similar words. Essentially, topic models would work by deducing words and grouping similar ones into topics to create topic clusters.

link: https://www.datacamp.com/tutorial/what-is-topic-modeling

- Latent Dirichlet Allocation (LDA):

- LDA is a probabilistic model that assumes each blog post is a mixture of topics, and each topic is a mixture of words.

- Applying LDA to the content (combined text) and extract the main topics present in the dataset.

1 2 3 4 5 |

from sklearn.decomposition import LatentDirichletAllocation lda = LatentDirichletAllocation(n_components=1, random_state=42) topic_matrix = lda.fit_transform(matrix) data['topic_distribution'] = topic_matrix |

Cosine Similarity

Cosine similarity is a measure of similarity between two non-zero vectors in an n-dimensional space. It is used in various applications, such as text analysis and recommendation systems, to determine how similar two vectors are in terms of their direction in the vector space.

The recommendation system calculates the cosine similarity between blog posts based on a combination of textual features (from TF-IDF) and numerical engagement features (claps, responses, reading time). The top N most similar blog posts are recommended based on these similarities.

1 2 3 4 5 6 7 8 9 10 |

from sklearn.metrics.pairwise import cosine_similarity similarity_matrix = cosine_similarity(combined_features) def blog_recommendation(blog_index, top_n=5): sim_score = list(enumerate(similarity_matrix[blog_index])) sim_score = sorted(sim_score, key=lambda x:x[1], reverse=True) sim_score = sim_score[1:top_n+1] blog_indices = [i[0] for i in sim_score] return data[['title', 'url']].iloc[blog_indices] |

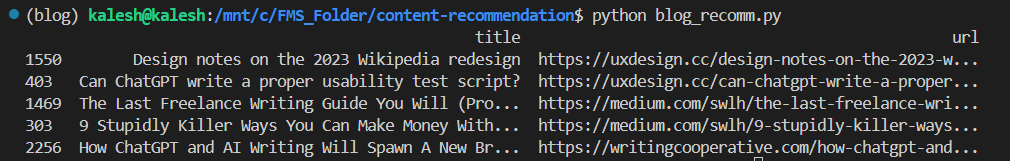

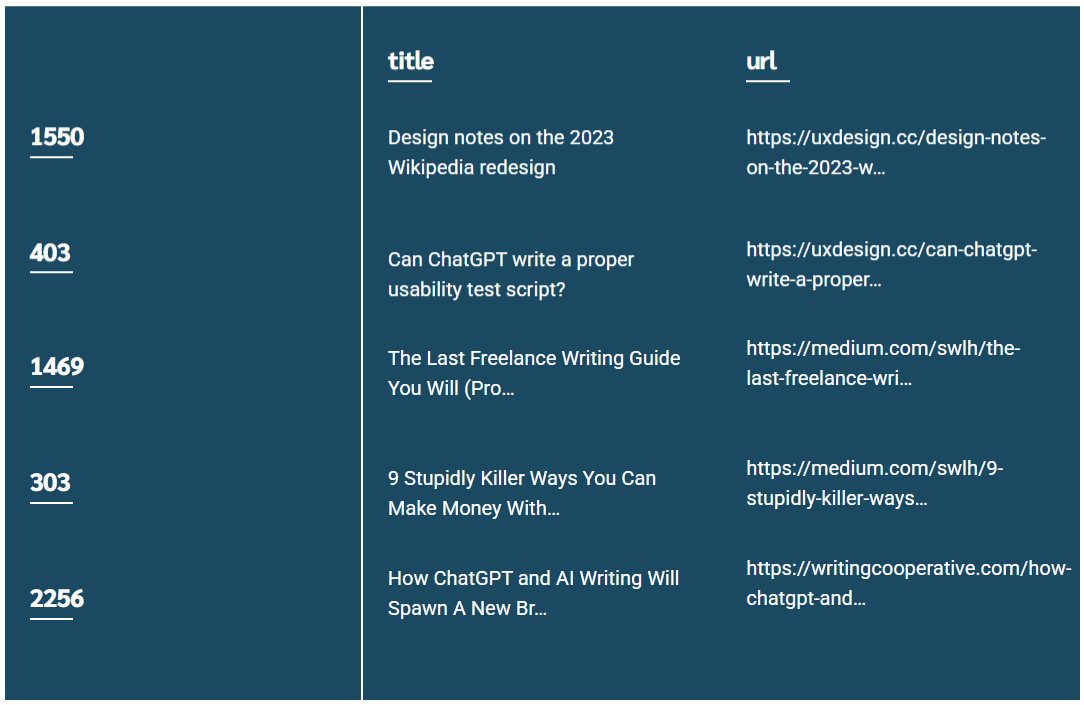

Output

title

url

1550

403

1469

303

2256

Design notes on the 2023 Wikipedia redesign

Can ChatGPT write a proper usability test script?

The Last Freelance Writing Guide You Will (Pro…

9 Stupidly Killer Ways You Can Make Money With…

How ChatGPT and AI Writing Will Spawn A New Br…

https://uxdesign.cc/design-notes-on-the-2023-w…

https://uxdesign.cc/can-chatgpt-write-a-proper…

https://medium.com/swlh/the-last-freelance-wri…

https://medium.com/swlh/9-stupidly-killer-ways…

https://writingcooperative.com/how-chatgpt-and…

About the Author

Kalesh, a graduate engineer, has been working as an Associate Software Engineer at Founding Minds for the past two years. With a keen interest in managing data related domains, he loves being involved in data handling for AI/ML projects. He possesses strong foundational knowledge in Python and SQL and is currently expanding his expertise in data analytics and engineering tools. Besides work, he has a personal interest in cooking new dishes, enjoys exploring different forms of art, and finds joy in engaging with friends and family.