Artificial intelligence(AI) has become a key tool for tackling complex problems that traditional methods struggle to solve. In turn, many organizations are adopting AI to stay competitive in today’s tech-driven world. This shift towards AI significantly impacts the tech industry, particularly developers and testers, who must rapidly upskill to navigate the challenges and seize the opportunities presented by this evolving landscape.

In traditional systems, testing primarily focuses on validating the reliability of the logic implemented and uncovering its potential weaknesses. In contrast, AI systems require testing the model’s self-learned logic, which is developed from patterns and distributions in the input data. In this context, the tester’s role broadens to encompass new responsibilities: assessing the quality and distribution of the data set, evaluating the performance of the deployed models, and scrutinizing the integrated algorithms.

Testing Machine Learning (ML) systems presents a dynamic landscape where challenges shift with each use case and model. This evolving field not only demands that testers sharpen their specialized skills but also empowers them to develop innovative solutions for enhancing system reliability. By deeply engaging with the complexities of ML, testers play a crucial role in ensuring these systems are robust and effective.

Decoding the Complexities: Challenges in Quality Assurance

While traditional testing approaches and methodologies can be effective in certain scenarios, they often fall short when applied to machine learning systems. Many common challenges can undermine the reliability of conventional practices, raising questions about their suitability as best practices in the context of evolving, data-driven models.

The challenges examined across distinct stages are discussed below, revealing critical insights at each phase of the testing process:

Grasping the Acceptance Criteria

In traditional software development, acceptance criteria are straightforward as the system operates purely on logic and determinism. However, in ML systems, acceptance criteria must be tailored to address the probabilistic nature of ML models. These criteria should be based on performance metrics such as accuracy, F1 score, etc. Testing these criteria is particularly challenging, as testers must generate an extensive set of test samples for each case.

For example, consider a document processing system tasked with capturing borderless tables. The acceptance criteria might state, “The system must capture borderless tables with 90% accuracy.” To validate this, the tester must rigorously assess the system’s performance against a variety of borderless tables, ensuring it meets this accuracy standard. Even when performance targets are met (e.g., the 90% accuracy in this scenario), testers still face the task of identifying and prioritizing critical issues among the failed cases (the remaining 10%).

Crafting Test Cases

To effectively formulate test cases, a thorough understanding of the basic workings of deployed ML models is essential. For instance, when creating test cases for a language model-based system, focusing on different font styles and sizes is irrelevant since the model operates based on context.

When designing test cases for AI-integrated systems, it’s crucial to evaluate both the model and the associated algorithm processing the model output. Ensuring the reliability of these algorithms can be challenging, as the model might struggle with test cases specifically designed to evaluate the algorithm’s performance.

Curate Test Data

Preparing test data is both essential and often time-consuming. To maximize effectiveness, it’s important to build test data with a clear understanding of the model’s learning after training. Focus should be placed on areas where the model has had less exposure. Therefore, testers must be good at interpreting data distribution and capable of drawing the necessary insights and conclusions.

Test data is generated based on test cases approved by the developers, but it’s important to note that a single test case can involve numerous possible data combinations. Capturing all these combinations may not always be feasible. In such cases, testers need a strong understanding on utilizing ML technologies to effectively automate the process of generating the test data.

Structuring Ground Truth

As projects scale, the concept of ground truths becomes increasingly important in order to automate continuous evaluation. However, creating, updating, and maintaining ground truths for regression testing is often a laborious process, as accurately predicting the exact output can be challenging in some use cases.

Imagine using a speech-to-text transcription system. The ground truth stores the expected output as “The quick brown fox.” However, the system processes the speech and splits it into two parts: “The quick” and “brown fox.” At first glance, you might think the output is incorrect. But in reality, the system simply interpreted the speech as two separate sequences, still delivering the right words. Such variations are common in ML systems, especially those based on language models, where different interpretations can lead to unexpected, but still accurate results.

Testing

Developing a universal best practice for testing machine learning systems is a challenge, as each use case demands its own tailored methods to ensure a thorough and effective evaluation.

Consider a personalized recommendation system, where an ML model suggests movies to users based on their viewing history, preferences, and behavior. Unlike traditional systems, this model may provide different recommendations to the same user at various times due to factors like retraining, evolving data, and stochastic elements within the algorithm. This inherent unpredictability in ML systems adds complexity to the validation process, requiring continuous monitoring and extensive test datasets to ensure its accuracy, reliability, and dependability.

Moreover, an ML system often resembles a black box filled with learned mathematical functions. Unlike traditional systems, pinpointing the source of an error within this complex model is notably challenging.

To accurately validate the model’s performance metrics such as F1 Score, Accuracy, and Precision, testers must know how to calculate these metrics using carefully curated test data. This process ensures that the weight distribution across different data types in the training set is correct and that the strategy used to split the data for testing is effective.

Formulating a testing strategy is the most challenging and crucial step in the process, a testing strategy for a classification model cannot be applied to a language model-based system. Therefore, testers can’t stick to a specific method for testing ML systems equipped with different models. This dynamic nature of ML system testing introduces a level of adaptability and complexity that traditional testing methods often lack.

Understanding and Logging Issues

Just as people have different perspectives on decisions, identifying the factors behind a model’s decisions can be equally complex. Discrepancies often arise in how data engineering teams, testers, and developers interpret data objects, making thorough discussions is crucial before escalating any issues. Unlike traditional systems, where errors are typically rooted in developed logic, ML systems can encounter problems from both the model and the algorithms.

Testers must know how to obtain the necessary intermediate outputs to diagnose problems accurately. When logging an issue, start by analyzing the model’s predictions to pinpoint whether the error is related to the model itself. Such issues should be tagged and flagged for ML engineers. Model-related issues may require additional training with similar data patterns, and clear communication among testers, developers, and stakeholders is vital to understand the problem and decide on resolutions.

Moreover, ML systems can become overfitted to specific data, precisely aligning with intended outcomes. It’s crucial to devise strategies during retesting to ensure these loopholes aren’t exploited when addressing issues.

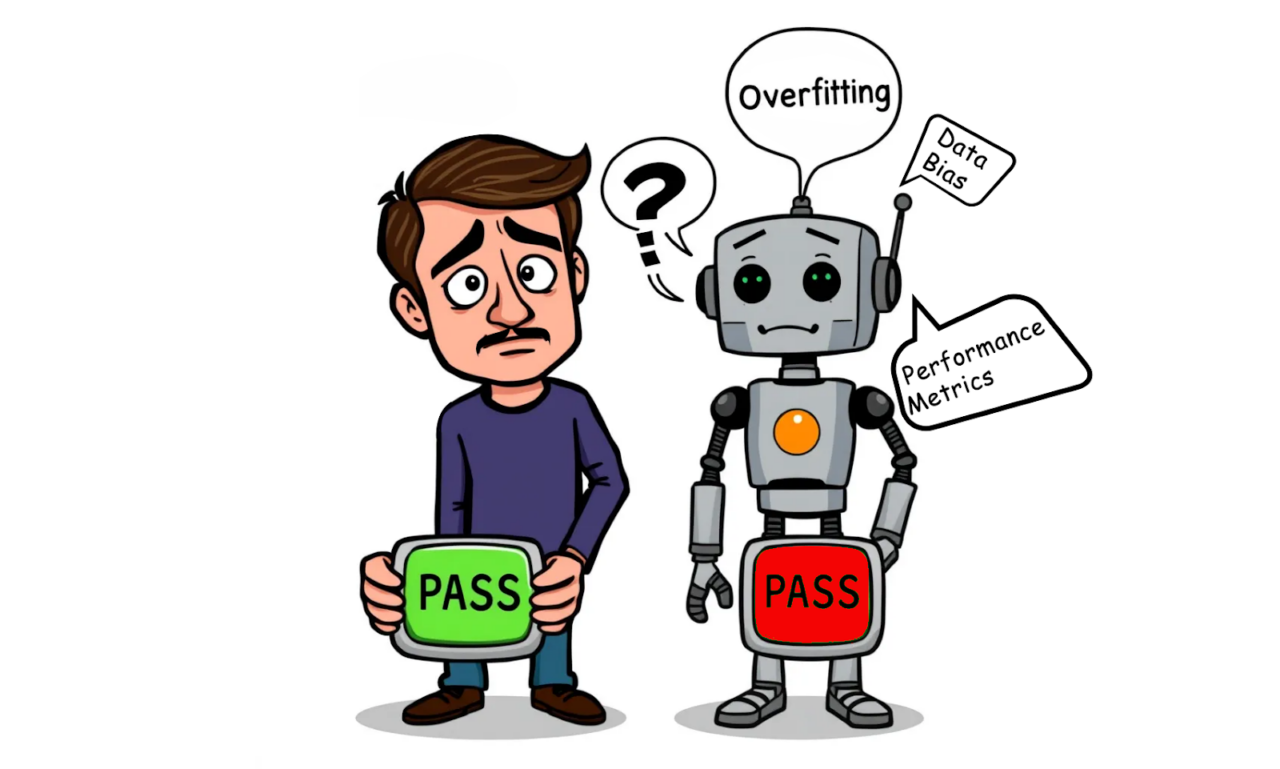

Defining Test Outcomes

While curating the test data, the possibility of multiple data combinations within a test case necessitates a metric to define its outcome. In traditional systems, a test case passes only when all possible scenarios execute flawlessly. However, with ML systems—where accuracy rarely hits 100%—it’s unrealistic to expect a perfect outcome for every data combination. To address this challenge, it’s crucial to establish a performance metric and threshold that effectively determines pass or fail, tailored to the specific requirements and criticality of the use case.

For example, in a customer sentiment analysis system, a tester might pass the test if the system performs as intended for 85% of the data combinations. However, applying the same threshold in a healthcare-related cancer prediction system would render the model unreliable. In such critical cases, testers may need to set the threshold as high as 98%. Thus, the criteria for passing a test case must be carefully tailored to the use case and its real-world implications.

Testing Cycle

To accommodate new requirements and improve the performance of the deployed model, periodic training with new data is inevitable. The preprocessing, feature engineering, and hyperparameters used for each training session can potentially introduce new errors or alter the system’s existing behavior, making regression testing crucial to ensure that new updates do not negatively impact performance.

In traditional systems, integrating new features or updating operations can be efficiently managed with regression and continuous testing using established frameworks and automation tools. However, in certain ML use cases where functionality relies on the deployed model, automating intermittent testing post-training presents challenges due to unstable ground truth.

Additionally, these traditional frameworks and tools often fall short when it comes to end-to-end testing of ML systems, revealing limitations that need addressing for robust ML model validation.

Conclusion

Emerging technologies naturally undergo continuous refinement and integration to reach their full potential. The recent strides in AI technology and its increasing reliability are accelerating its adoption across diverse applications. Testing is pivotal in ensuring the reliability and dependability of ML systems. While traditional testing strategies, frameworks, and tools have their limitations in achieving comprehensive end-to-end testing, this is where innovation shines.

Each unique ML use case presents its own set of challenges and opportunities, offering testers a rich learning experience and the chance to craft effective, reliable testing strategies. By tackling these unique challenges, we’re not just improving AI systems – we’re shaping a future where we can confidently rely on AI in our daily lives.

About the Author

Krishnadas, a Post-Graduate Engineer, is currently applying his skills as part of the dynamic Machine Learning (ML) team at Founding Minds. With a strong foundation in Data Science and Artificial Intelligence, Krishnadas transitioned into this field after pursuing a course in AI to align his career with emerging technologies. He specializes in the end-to-end testing of ML systems, particularly within agile environments. Fueled by a passion for exploration, Krishnadas enjoys a variety of hobbies, from taking road trips and singing to experimenting in the kitchen.