In the era of data-driven decision-making and machine learning, the quality and quantity of data play a crucial role in the success of AI models. However, obtaining large, high-quality datasets can be challenging due to privacy concerns, data scarcity, and the high cost of data collection. Synthetic data generation has emerged as a promising solution to address these challenges, providing a way to create realistic and diverse datasets without the constraints of real-world data acquisition.

Let’s begin by understanding what synthetic data is and its importance in the field of AI and machine learning.

What is synthetic data?

Synthetic data is artificially generated data that mimics the characteristics of real-world data without containing any actual information, which allows researchers and developers to test and improve algorithms without risking the privacy or security of real-world data.

In the context of instruction fine-tuning for language models, it refers to fabricated examples of human-AI interactions. It typically consists of an instruction or query paired with an appropriate AI response.

Example ▶

>> Human (instruction): What is 2 + 2 ?

>> AI (response): 2 + 2 is 4.

>> Human (instruction): What’s the recommended daily water intake?

>> AI (response): The general recommendation is about 8 cups (64 ounces) of water per day for most adults.

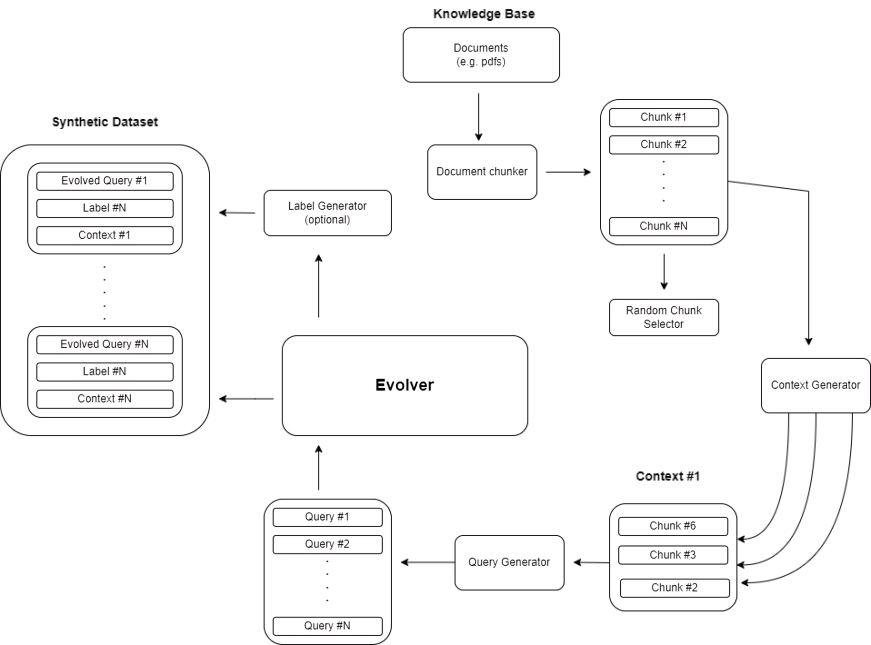

Distilabel Framework

Now, let’s explore a powerful tool for generating synthetic data, the Distilabel framework. Distilabel is an open-source framework for AI engineers to generate synthetic data and AI feedback for a wide variety of projects, including traditional NLP and generative and large language model scenarios (instruction following, dialogue, judging, etc). Distilabel’s programmatic approach allows you to build scalable pipelines for data generation and AI feedback. The goal of Distilabel is to accelerate your AI development by quickly generating high-quality, diverse datasets based on verified research methodologies for generating and judging AI feedback.

We emphasize the importance of data quality to combat the challenges of high computing costs and the need for superior output. Distilabel supports you in synthesizing and evaluating data, so you can concentrate on maintaining the highest standards for your synthetic data.

Ownership of data for fine-tuning your own LLM is not easy, but Distilabel can help you get started. It integrates AI feedback from any LLM provider out there using one unified API. Distilabel aims to improve AI model performance by focusing on data quality and efficient iteration.

What do people build with distilabel?

- The 1M OpenHermesPreference is a dataset of ~1 million AI preferences derived from teknium/OpenHermes 2.5. It demonstrates how Distilabel can be used to synthesize data on an immense scale.

- Model performance is enhanced by filtering out 50% of the original distilabeled Intel Orca DPO dataset using AI feedback, which contributes to improvements in the refined OpenHermes model.

- The haiku DPO data illustrates how datasets for specific tasks can be created using the latest research papers to improve dataset quality.

Why use LLMs for Synthetic Data Generation?

Next, let’s discuss why Large Language Models (LLMs) are becoming increasingly popular for synthetic data generation. Large Language Models (LLMs), like Mistral AI, are at the forefront of the synthetic data generation movement, offering a range of benefits for generating high-quality, scalable datasets. Here’s why synthetic data generation using LLMs is gaining traction:

-

Solving Data Scarcity

Many industries struggle with access to sufficient, high-quality data. LLMs can bridge this gap by generating vast amounts of synthetic data and providing training material in fields where real data is scarce, expensive, or hard to collect.

-

Enhancing Privacy

Privacy concerns are a growing challenge in today’s digital age. Synthetic data offers a way to protect sensitive information, allowing companies to build and test models without using actual personal data. This helps comply with regulations like GDPR while maintaining data utility.

-

Boosting diversity and edge case handling

Real-world datasets often lack diversity and fail to cover rare or edge-case scenarios. LLMs can generate synthetic data that fills these gaps, enhancing model generalization and robustness by simulating a broader range of situations.

-

Cost-effective and time-saving

Traditional data collection and annotation can be expensive and time-consuming. This process is streamlined by LLMs, which produce synthetic data fast and effectively, reducing the time and expense needed to create machine learning models.

-

Accelerating AI Research

LLMs are a catalyst for faster innovation. By generating synthetic data on demand, researchers can rapidly experiment with new models and algorithms, cutting down on the delays caused by waiting for real-world data collection.

Synthetic Data Generation using LLM

Now, let’s delve into the specific approaches used in LLM-powered synthetic data generation. With the advent of large language models (LLMs), both the motivation for generating synthetic data and the techniques for generating it have been supercharged. Enterprises across industries are generating synthetic data to fine-tune foundation LLMs for various use cases, such as improving risk assessment in finance, optimizing supply chains in retail, improving customer service in telecom, and advancing patient care in healthcare.

LLM-powered synthetic data generation

For improving language models using LLM-generated synthetic data for fine-tuning models, we broadly have two approaches: knowledge distillation and self-improvement.

-

- Knowledge distillation is the process of translating the capabilities of a larger model into smaller ones. This isn’t possible by simply training both models on the same dataset, as the smaller model may not “learn” the most accurate representation of the underlying data. In this case, we can use the larger model to solve a task and use that data to make the smaller model imitate the larger one.

-

- Self-improvement involves using the same model to criticize its own reasoning and is often used to further hone the model’s capabilities.

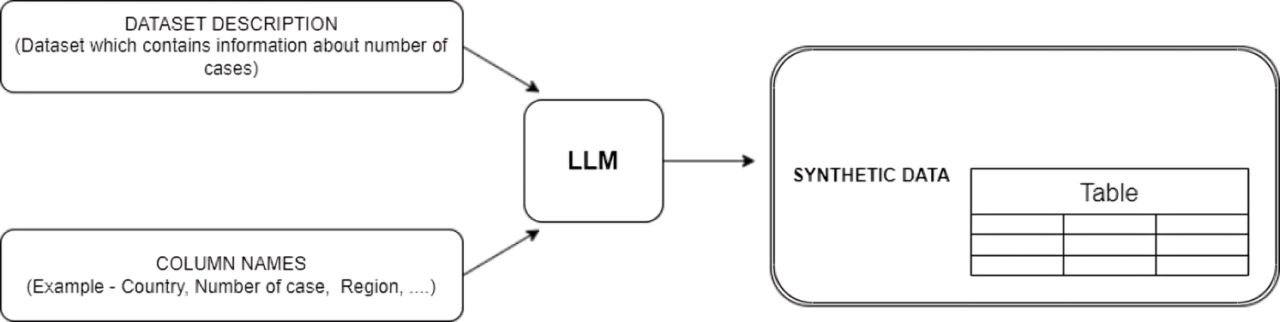

Architecture of Synthesizer

For example, a tabular dataset requires an appropriate prompt that describes the desired format and characteristics of the tabular data. It is then necessary to describe the desired data set, with the name of the columns and the number of rows. The LLM can then create a synthetic dataset with the corresponding columns and the required number of rows.

The prompt must then specify the context of the dataset in order to make the most of the LLM’s linguistic capabilities, as well as the column names and previous row values (except for the first few rows, which will have no previous values). The model can then progressively complete the dataset while maintaining consistency between all rows.

Mistral AI model

Let’s explore a specific LLM that’s gaining attention in the field of synthetic data generation: the Mistral AI model. The Mistral model is a type of language model designed to understand and generate human-like text. It has been fine-tuned to follow instructions and respond effectively to natural language prompts. This makes it suitable for various applications, such as answering questions, generating content, or performing tasks based on user input.

Mistral models are typically used in conversational AI, where they can handle complex dialogues, generate coherent responses, and follow the context of a conversation. The models are trained on large datasets to understand different nuances in language, making them highly versatile in understanding and generating text.

Mistral AI has gained attention for synthetic data generation due to several factors:

1. High-Quality Language models:

-

- Advanced Models: Mistral AI is known for developing high-performance language models that are competitive with other leading models like GPT. These models are effective at generating realistic and contextually accurate synthetic data.

- Customization: The models can be fine-tuned for specific tasks, making them ideal for generating data tailored to unique scenarios or industries.

-

- Smaller and Faster Models: Mistral has focused on creating models that are not only powerful but also efficient in terms of computation. This makes them suitable for tasks, where generating synthetic data, quickly and at scale, is crucial.

- Cost-Effectiveness: Due to their efficiency, Mistral models often require less computational power, which can reduce the cost of synthetic data generation.

-

- Wide Range of Applications: Mistral AI’s models can be used for various data generation tasks, including text, dialogue, and other structured or unstructured data. This versatility makes them a strong choice for different types of synthetic data needs.

- Integration Capabilities: Mistral models can be integrated into existing pipelines, making it easier for organizations to incorporate synthetic data generation into their workflows.

-

- Synthetic Data for Privacy: Mistral AI’s ability to generate realistic synthetic data helps in situations where data privacy is a concern. This is particularly useful in industries like healthcare, finance, and government, where using real data might be restricted.

-

- Active Development: Mistral AI benefits from an active community and continuous updates, ensuring that their models remain state-of-the-art. This support is critical for staying ahead in the fast-evolving field of AI.

In summary, Mistral AI stands out in synthetic data generation due to its powerful, efficient, and versatile models, making it a go-to choice for organizations that need high-quality synthetic data for various applications.

Getting started with Mistral AI

Finally, let’s look at how one can start using Mistral AI for synthetic data generation.

To begin using Mistral AI’s model, you’ll need to follow these steps:

1. Obtain an API key:

-

- Visit the official Mistral AI website.

- Sign up for the account if you haven’t already.

- Navigate to the API section to generate your API key.

1 |

pip install mistralai |

1 |

export MISTRAL_API_KEY = 'your_api_key' |

1 2 3 4 5 |

from mistralai.client import MistralClient import os api_key = os.environ['MISTRAL_API_KEY'] client = MistralClient(api_key=api_key) |

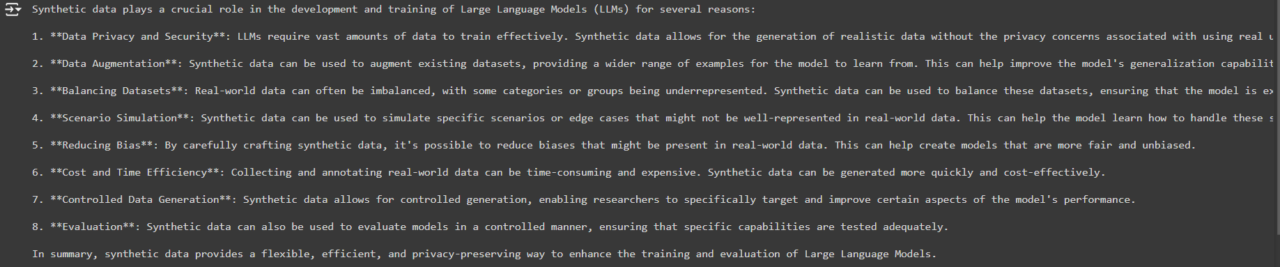

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

model = “mistral-large-latest" client = Mistarl(api_key='') chat_response = client.chat.complete( model = model, messages = [ { "role" : "user" "content" : "Why synthetic data in LLM ?" } ] ) print(chat_response_choice[0].message.content) |

NB: Remember to refer to Mistral AI’s official documentation for the most up-to-date instructions and best practices for using their models.

The above code generates a response as given below:

Conclusion

Synthetic data generation, powered by advanced Large Language Models (LLMs) like Mistral AI and frameworks such as Distilabel, is revolutionizing the field of AI and machine learning. This approach offers numerous benefits, including:

1. Addressing data scarcity and privacy concerns

2. Enabling cost-effective and efficient data creation

3. Improving model robustness and generalization

4. Facilitating research and development in AI

The process of using LLMs for synthetic data generation involves three key stages: pre-training, fine-tuning, and alignment. Each stage plays a crucial role in developing high-quality, task-specific models.

While challenges remain, particularly in ensuring diversity and complexity in evaluation datasets, the potential of synthetic data generation is immense. As tools and techniques continue to evolve, synthetic data is poised to play an increasingly important role in advancing AI capabilities across various industries and applications.

About the Author

Kalesh, a graduate engineer, has been working as an Associate Software Engineer at FoundingMinds for the past two years. With a keen interest in managing data-related domains, he loves being involved in data handling for AI/ML projects. He possesses strong foundational knowledge in Python and SQL and is currently expanding his expertise in data analytics and engineering tools. Besides work, he has a personal interest in cooking new dishes, enjoys exploring different forms of art, and finds joy in engaging with friends and family.