In the realm of big data, efficiency reigns supreme. As data volumes continue to surge, organizations must adopt formats that deliver both speed and storage efficiency.

Enter Parquet: a groundbreaking columnar storage file format that has transformed the way we handle massive datasets. But what exactly is Parquet, and why has it become the preferred choice for data engineers and analysts over the last decade?

What is Parquet?

Parquet is an open-source, columnar storage file format meticulously designed for optimal performance with big data processing frameworks such as Apache Hadoop, Apache Spark, and Apache Hive. Unlike traditional row-based formats, Parquet organizes data by columns. This columnar arrangement facilitates superior data compression and query performance, making it exceptionally well-suited for large-scale data operations.

Parquet File Format

- Magic Number: A Parquet file begins and ends with a magic number, PAR1, which helps in recognizing the file format.

- File Metadata: The file contains metadata at the beginning (optional) and at the end (required). This metadata includes information about the schema, the number of rows, columns, and the offsets of the row groups.

- Row Groups: Parquet files are divided into row groups, which are contiguous blocks of rows. Each row group contains column chunks, which store data for a specific column.

- Column Chunks: Each column in a row group is stored separately in a column chunk, making it easier to read specific columns without loading the entire dataset. Within each row group in a Parquet file, the metadata includes statistics that provide information about the data stored in the column chunks. This information is used for various optimizations, including filtering and querying. The relevant statistics include:

-

-

- Min Value: The minimum value in a column chunk.

-

-

-

- Max Value: The maximum value in a column chunk.

-

-

-

- Count: The number of values in a column chunk.

-

- Pages: Each column chunk is further divided into pages. There are different types of pages:

-

- Data Pages: Store the actual data

-

- Index Pages: Used for indexing.

-

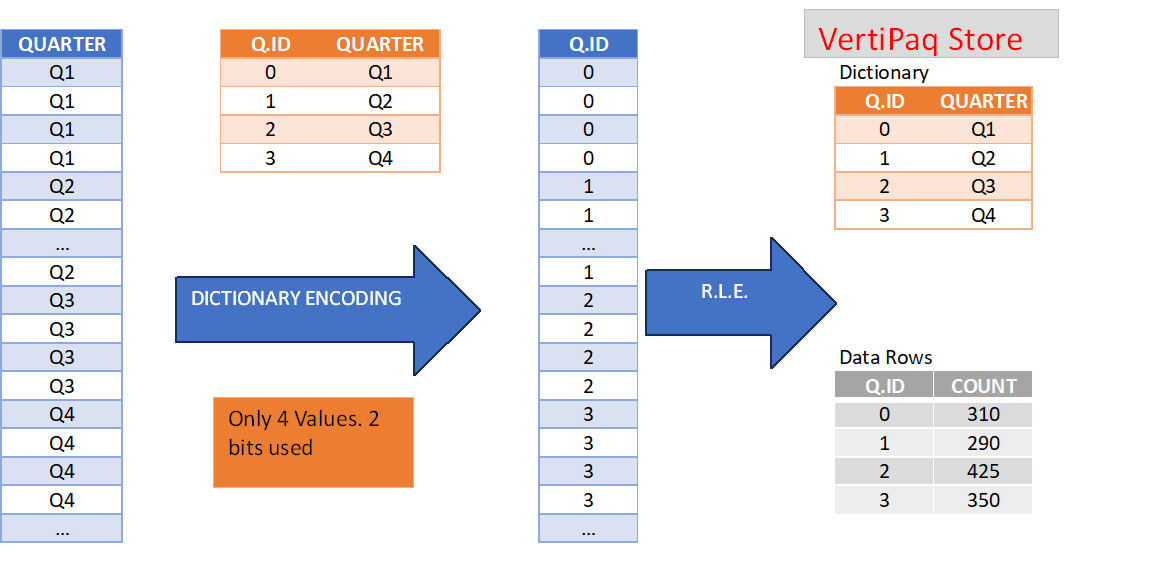

- Dictionary Pages: Pages can utilize various encoding schemes, such as dictionary encoding or run-length encoding.

Combination of Dictionary Encoding and Run Length Encoding. Data Source: Power BI Tips for Star Schema and Dimensional Data Modeling (corebts.com)

Why Parquet? The Advantages of a Columnar Format

Efficient Compression: Since similar data is stored together in columns, Parquet can achieve high compression ratios. This reduces the amount of storage space required and speeds up data transfer. This Python script demonstrates how to convert a CSV file into a Parquet file while applying different compression schemes. The script also measures and prints the size of the resulting Parquet files, allowing you to compare the effects of each compression method and the impact of dictionary encoding.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

import pandas as pd import os # Load the CSV file df = pd.read_csv('syntax_file.csv') # Get the size of the CSV file file_size = os.path.getsize('syntax_file.csv') print(f"Size of the CSV file '{'syntax_file.csv'}': {file_size} bytes") # Get the size of the Parquet file with different compression schemes compression_types = ['snappy', 'gzip', 'brotli', 'zstd'] for compression in compression_types: file_name = f'file_{compression or "none"}.parquet' # Specify the column to use dictionary encoding df.to_parquet(file_name, compression=compression,use_dictionary=['Type']) # Get the size of the Parquet file file_size = os.path.getsize(file_name) print(f"Size of the Parquet file '{file_name}': {file_size} bytes") |

Output

Size of the CSV file 'syntax_file.csv': 783924 bytes Size of the Parquet file 'file_snappy.parquet': 462097 bytes Size of the Parquet file 'file_gzip.parquet': 246460 bytes Size of the Parquet file 'file_brotli.parquet': 193447 bytes Size of the Parquet file 'file_zstd.parquet': 212575 bytes |

The performance of different compression schemes can vary depending on the characteristics of the input data.

- Faster Query Performance: Queries that access only a subset of columns can read just the necessary data, avoiding the overhead of processing irrelevant rows.

- Schema Evolution: Parquet supports schema evolution, which allows you to add new columns to your datasets without breaking existing queries.

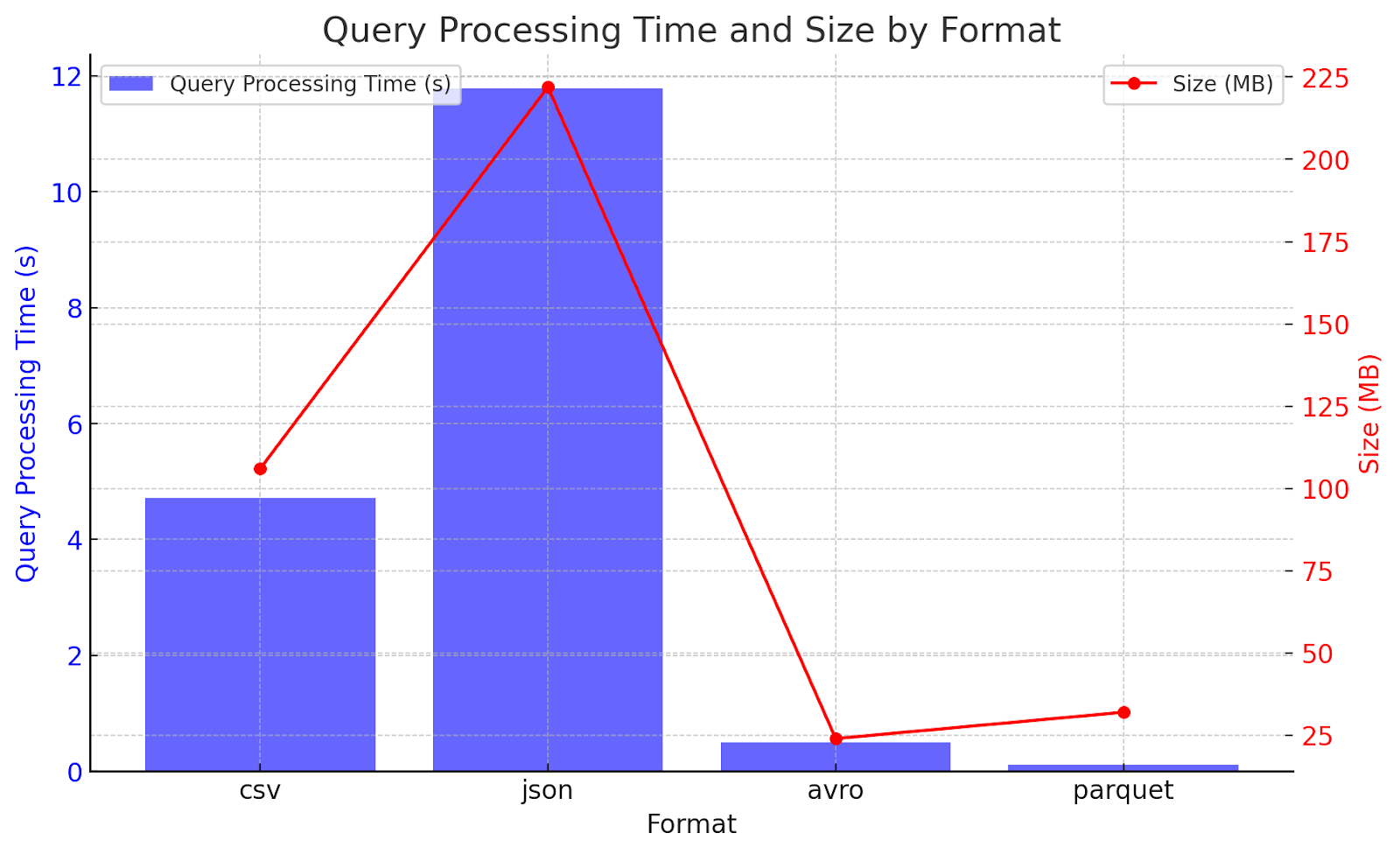

Comparative Performance Analysis of Data Storage Formats: Parquet vs. CSV, JSON, and Avro

Comparison of file formats based on query processing time and file size. The blue bars represent the query processing time in seconds, while the red lines represent the file size in megabytes (MB) for each format. Data Source:CSV vs Parquet vs JSON vs Avro – datacrump.com

This figure presents a comparative analysis of four data storage formats: Parquet, CSV, JSON, and Avro, evaluated across file size, read performance, write performance, and schema support. Parquet emerges as the most efficient format overall, offering significant advantages in file size and schema support, along with strong read and write performance. Avro also performs well, particularly in write performance and schema support. CSV, while efficient in read performance, is less effective in managing file size and schema support. JSON offers a balanced performance, excelling in schema support but lagging behind in file size and write performance. Overall, Parquet and Avro stand out as the most robust formats across the evaluated criteria.

Getting Started with Parquet: Tools and Frameworks

- Apache Spark: Spark natively supports Parquet, making it easy to read and write Parquet files in distributed environments.

- Apache Hive: Hive can query Parquet files efficiently, especially for analytical workloads.

- Python and Pandas: With libraries like pyarrow and fastparquet, you can work with Parquet files in Python.

1 2 3 4 5 6 7 |

import pyarrow.parquet as pq # Load Parquet file parquet_file = pq.ParquetFile('file_brotli.parquet') # Print Metadata print(parquet_file.schema) |

Future of Parquet: What’s Next?

As data volumes increase, the demand for efficient storage and processing will become even more crucial. Parquet is positioned to play a vital role in supporting modern data lakes and analytics platforms. Ongoing enhancements and wider adoption suggest that Parquet will continue to be a significant component in the big data environment.

Conclusion

Parquet is not just another data format—it’s a game-changer in the way we store and process large datasets. Whether you’re dealing with big data analytics, data warehousing, or machine learning, understanding and leveraging the power of Parquet can significantly boost your data infrastructure’s performance and efficiency. Dive into Parquet, and you’ll find a powerful ally in your big data endeavors.

References

About the Author

Aswathi P V is a certified Data Scientist with credentials from IBM and ICT, holding a Master’s degree in Machine Learning. She has authored international publications in the field of machine learning. With over Six years of teaching experience and two years as a Data Engineer at Founding Minds, she brings a wealth of expertise to both academic and professional settings. Beyond her technical prowess, she finds joy in creative activities like dancing and stitching and stays grounded through yoga and meditation.