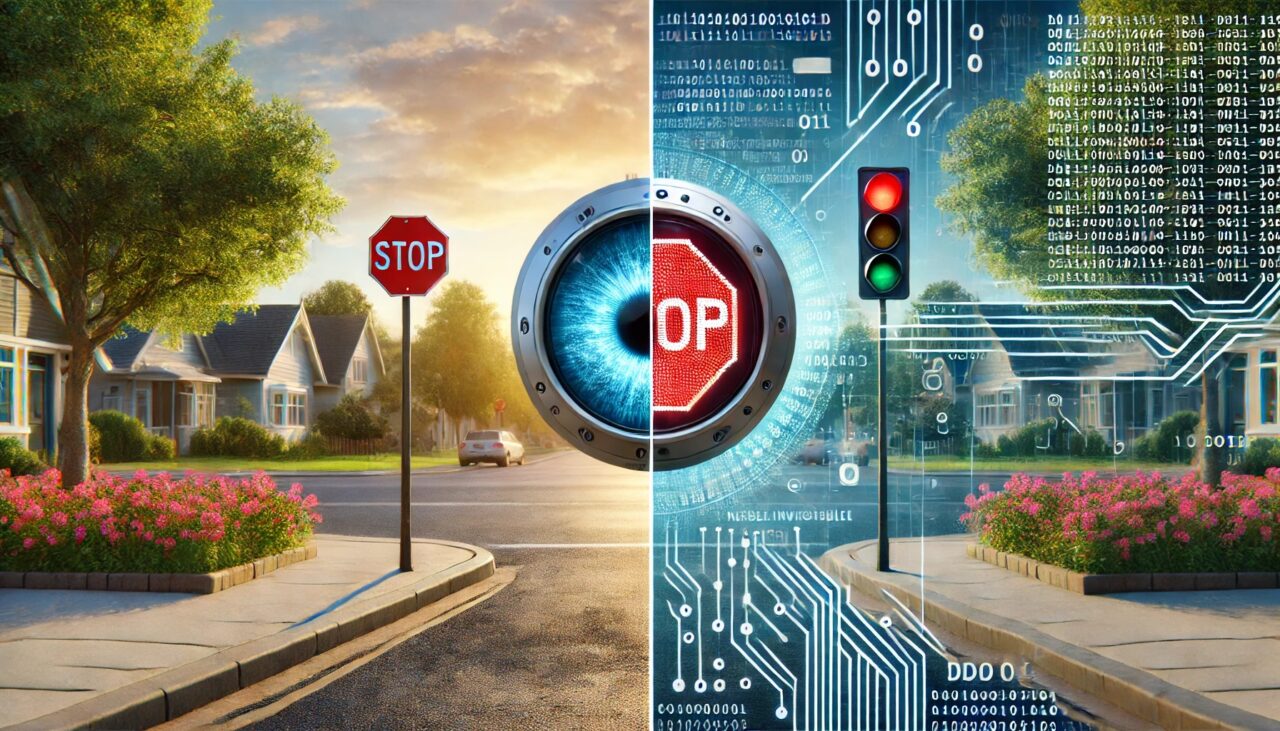

The need for robust AI defense mechanisms

In the rapidly evolving landscape of artificial intelligence, a critical challenge looms large: the need for robust AI defense mechanisms. The rise of adversarial attacks on AI has cast a shadow over the technology’s promising future, raising serious questions about trust and reliability. Can we truly rely on AI-powered diagnostic tools in healthcare? How can we ensure the fairness and accuracy of AI-driven hiring processes?

The economic implications of these challenges are staggering. Industries investing heavily in AI technologies risk massive losses if these systems prove unreliable or easily compromised. Moreover, the safety implications extend beyond financial considerations, potentially putting human lives at risk in critical applications like autonomous transportation or robotic surgery.

Before delving into the complexities of securing AI systems, it’s crucial to gain a comprehensive understanding of the risks posed by adversarial attacks. We recommend exploring our previous blog post, “Securing AI: The Need for Reliability and Safety” which provides an overview of this pressing threat. In this blog, we’ll explore the various defense mechanisms, strategies and innovations aimed at creating a more secure and trustworthy AI-powered future.

Layered Defense: A Multi-Pronged Approach

Securing AI systems against adversarial attacks requires a comprehensive, layered approach. Here are key strategies being developed and implemented:

Adversarial Training: Teaching AI to Punch Back

Imagine you’re training a boxer. You wouldn’t just have them practice punching a stationary bag, right? You’d put them in the ring with sparring partners who throw unexpected punches. That’s essentially what adversarial training does for AI – it toughens up our models by exposing them to the very attacks they might face in the wild. This method involves creating and using “adversarial examples” – deceptive inputs designed to fool AI systems.

The process begins with generating these adversarial examples using various techniques. Popular methods include the Fast Gradient Sign Method (FGSM), which adds subtle noise to data, the more advanced Projected Gradient Descent (PGD), and the highly precise Carlini & Wagner (C&W) Attack. These techniques create a diverse set of challenges for the AI to overcome.

A crucial aspect of adversarial training is finding the right balance between clean and adversarial data. Too many adversarial examples can skew the training, while too few may not provide adequate protection. Advanced techniques even employ dynamic mixing, adjusting the ratio based on the model’s evolving vulnerabilities. The core of adversarial training lies in the retraining process. Here, the AI model learns to handle both normal and perturbed data, developing a dual objective of minimizing standard and adversarial loss. This process enhances the AI’s ability to detect subtle adversarial perturbations, showcasing its adaptive learning capabilities.

Adversarial training is an ongoing, iterative process. As the AI improves, researchers continually escalate the challenges, crafting more sophisticated adversarial examples. This creates a valuable feedback loop, where insights from each training round inform the next, allowing for focused attention on discovered vulnerabilities.

The impact of adversarial training is evident in real-world applications. Tech giants like Google and Microsoft employ these techniques to create resilient image recognition systems capable of accurate performance even when faced with clever manipulations.

Input Preprocessing and Reconstruction: The AI’s First Line of Defense

Input preprocessing refers to the techniques used to modify or clean data before it’s fed into an AI model. Think of it as a meticulous data sanitization process. Reconstruction, on the other hand, involves rebuilding or transforming the input data in a way that preserves its essential features while potentially removing harmful elements. This approach employs various techniques to enhance AI robustness, including JPEG compression to smooth out subtle adversarial perturbations, feature squeezing to reduce color depth and apply spatial smoothing, total variation minimization to filter out noise, bit-depth reduction to simplify pixel representation, and randomization to add unpredictable noise to inputs. These methods offer benefits such as simplicity, model-agnostic application, and adaptive defense. Preprocessing techniques shine in various applications, including image classification systems like facial recognition, autonomous vehicles, malware detection, speech recognition systems, and medical imaging.

Ensemble Methods: Assembling AI’s Avengers for Enhanced Security

Ensemble methods in AI security are like assembling a team of superheroes, each with unique strengths, to combat adversarial threats. This approach harnesses the strength of multiple AI models, each contributing unique perspectives to create more robust predictions. By employing diverse voting mechanisms and aggregating predictions, ensemble methods boost overall confidence and resilience. The key to their effectiveness lies in diversity – combining different model architectures, varying training data and features, and applying an array of adversarial training techniques. Real-world applications span from facial recognition systems to malware detection. Despite the inherent challenges in implementation and management, ensemble methods stand as a cornerstone in enhancing AI security, forging more resilient systems capable of navigating the complexities of our digital landscape.

Defensive Distillation: Teaching AI to Stay Cool Under Pressure

Defensive distillation is an innovative technique in AI security that trains models to make more measured decisions when faced with adversarial attacks. The process involves training a “teacher” model, softening its output probabilities, and then using these to train a “student” model. This results in an AI system with smoother decision boundaries, making it more resilient to various attacks.

The method has proven effective against gradient-based attacks, iterative attacks, and various norm-constrained attacks. It even offers some protection against black-box attacks by making the model’s decision process less predictable.

Advanced AI Defense Techniques: Staying Ahead of Adversarial Threats

As AI systems become increasingly prevalent, defending them against adversarial attacks is crucial. Three cutting-edge approaches are leading the charge in bolstering AI defenses:

- Certified Defenses: These provide provable guarantees about an AI model’s robustness. Techniques like randomized smoothing, convex relaxation, and interval bound propagation offer strong theoretical assurances.

- Generative Model-Based Defenses: Leveraging generative models, techniques such as Defense-GAN, MagNet, and Pixeldefend aim to detect and mitigate adversarial examples by projecting or reforming inputs onto the legitimate data manifold.

- Detection and Rejection Methods: These focus on identifying and filtering out adversarial inputs. Approaches include feature squeezing, kernel density estimation, Bayesian uncertainty modeling, and ensemble detection methods.

Adversarial Training in Action: Real-World AI Defense Success Stories

Adversarial training is making significant impacts in real-world AI applications. These notable examples showcase its effectiveness:

- Google’s Cloud Vision API: Google enhanced its Cloud Vision API’s resilience against adversarial attacks using Adversarial Logit Pairing. This improvement allows the system to maintain high accuracy in image recognition tasks even when faced with subtly perturbed inputs. This implementation demonstrates the importance of robust AI in consumer-facing products.

- Microsoft’s MNIST Challenge: Microsoft Research applied adversarial training to the classic MNIST dataset of handwritten digits. Using a multi-step process that gradually increased attack strength during training, they created a model with state-of-the-art robustness. This achievement significantly outperformed standard training methods, even on a seemingly simple dataset.

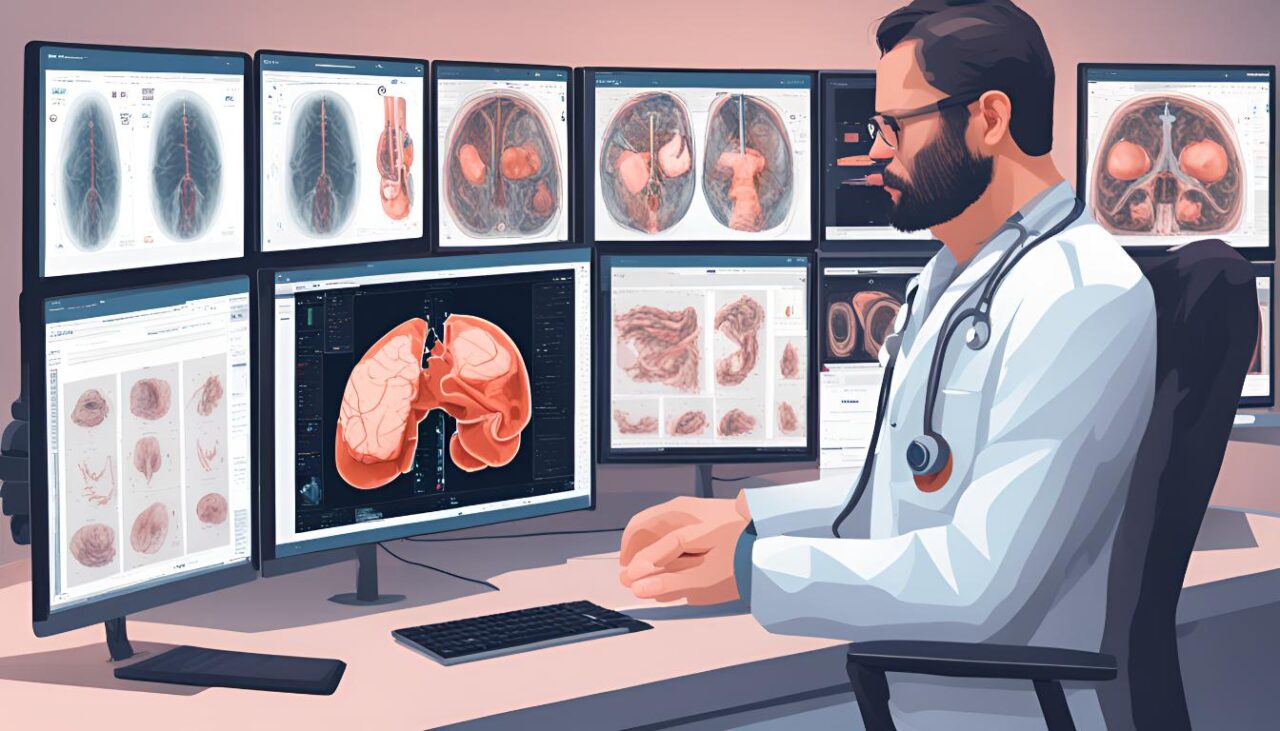

- Adversarial Training in Medical AI – Enhancing Cancer Detection Reliability: A groundbreaking collaboration between AI researchers and radiologists has demonstrated the potential of adversarial training in improving lung cancer detection. The project tackled a critical challenge: preventing subtle adversarial perturbations from causing false negatives in cancer diagnosis. Using YOPO (You Only Propagate Once), a computationally efficient variant of adversarial training, the team developed a model that maintained high accuracy while significantly improving robustness against potential attacks. This advancement is crucial in healthcare, where misdiagnoses can have life-threatening consequences.

Why Implementing AI Defenses Is More Complex Than You Think

AI security practitioners face challenges implementing robust defenses against adversarial attacks. Real-world hurdles include high computational costs, scalability issues, and performance impacts. Striking a balance between enhancing model robustness and maintaining accuracy on unperturbed inputs remains a delicate task, with improvements in one area often coming at the expense of the other. The rapidly evolving nature of attacks further complicates defense efforts, creating an ongoing arms race between attackers and defenders. To navigate these challenges, AI practitioners must adopt best practices that prioritize security from the outset of development. This includes implementing diverse defense strategies, conducting rigorous testing and validation, and staying informed about the latest developments in the field. Collaboration with cybersecurity experts, prioritizing data quality and security, embracing transparency, implementing robust monitoring systems, and planning for incident response are all crucial elements of a comprehensive AI security strategy.

Regulatory Considerations

Current and Proposed AI Security Regulations

- European Union’s AI Act: This comprehensive legislation aims to categorize AI systems based on risk levels and impose stricter requirements on high-risk applications. It includes provisions for cybersecurity and adversarial attack prevention.

- U.S. National AI Initiative Act: While not specifically focused on security, this act emphasizes the importance of developing trustworthy AI systems, which inherently include robust defense mechanisms.

- China’s New Generation Artificial Intelligence Development Plan: This strategy outlines the need for safe and controllable AI systems, including measures to prevent and mitigate security risks.

- ISO/IEC Standards: The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) are developing standards for AI security and risk management.

Ethical Implications of AI Defense Strategies

The implementation of AI defense strategies presents a complex ethical landscape that demands careful consideration. As organizations strive to protect their AI systems from potential attacks, they must navigate a delicate balance between security and individual rights. Privacy concerns arise when defense mechanisms require access to vast amounts of data, potentially infringing on personal information. The tension between transparency and security poses another challenge, as openness about defense strategies could inadvertently expose vulnerabilities. Ensuring fairness and avoiding bias in these protective measures is crucial to prevent discrimination against specific user groups. Additionally, the question of accountability in the event of a successful attack becomes more intricate with advanced defense systems in place. Perhaps most concerning is the dual-use potential of AI defense technologies, which could be repurposed for harmful intentions, raising ethical questions about their development and distribution.

Final Thoughts

Balancing AI innovation with security requires a multi-faceted approach. Incorporating “security by design” from the start mitigates potential conflicts. Collaboration among researchers, industry leaders, and policymakers is crucial to address both advancement and protection needs. Adaptive regulations help keep pace with rapid AI progress. Fostering awareness and education about AI security risks and best practices is essential for all stakeholders. This approach nurtures innovation while maintaining strong security commitments.

About the Author

Vishnu Prakash is a Quality Assurance professional at Founding Minds with over 7 years of experience in the meticulous testing of web and mobile applications. With a strong foundation in ensuring software excellence, he has developed a keen eye for identifying bugs, improving user experience, and enhancing product quality across diverse platforms. Beyond traditional testing, he is passionate about the integration of Artificial Intelligence (AI) and Machine Learning (ML) into the realm of software testing and development. Vishnu’s personal interests rev up around motorcycles and the excitement of discovering new destinations through travel.