What is MLOps?

Machine Learning Operations, or MLOps, is a crucial aspect of ML engineering that focuses on efficiently deploying, managing, and monitoring machine learning models in production environments. It merges machine learning practices with DevOps principles to create a streamlined workflow for developing, implementing, and maintaining ML models.

MLOps aims to ensure that models perform as intended, meet business objectives, and comply with regulatory standards. By implementing MLOps practices, organizations can enhance model quality, simplify management processes, and automate the deployment of complex ML and deep learning systems at scale. This approach bridges the gap between model development and practical application, enabling smoother integration of ML solutions into real-world business operations.

Why do we need MLOps

As the adoption of Machine Learning (ML) continues to grow across industries, the need for a structured approach to managing the end-to-end ML lifecycle has become increasingly evident. MLOps addresses this need by offering several key benefits. They are as follows:

- Integration: MLOps unifies the entire ML workflow, from data preparation to model deployment and monitoring, streamlining previously disparate processes.

- Consistency: It ensures models perform reliably across different environments, reducing risks when transitioning from development to production.

- Scalability: As ML initiatives grow, MLOps manages increasing data volumes and model complexity, enabling organizations to meet real-world demands.

- Collaboration: MLOps fosters teamwork among data scientists, engineers, and business stakeholders. It establishes standardized practices, ensuring harmonized efforts across teams.

- Compliance: In regulated industries, MLOps helps maintain required standards and governance, ensuring ML models and deployments adhere to relevant regulations.

By adopting MLOps, organizations can improve model reliability, enable scalable deployments, and ensure compliance. This approach drives successful and sustainable implementation of machine learning initiatives, ultimately enhancing efficiency and effectiveness in ML operations.

A Clear Case for MLOps

It has been some time since the Digital Revolution hit us and the world started generating immense amounts of data through day-to-day living. Data is ever present and is being created, waiting to be utilized.

Techjury reports that in 2020, each person created about 1.7 MB of data every second. This massive increase in data provides numerous opportunities to explore new ideas, conduct experiments, and develop innovative machine-learning models.

However, the abundance of data doesn’t always ensure its use in ML applications. Many organizations are data-rich yet insight-poor. Even when utilized, success isn’t guaranteed.

According to a study by NewVantage Partners, only 15% of 70 leading enterprises have widely implemented AI in their operations. This shows that most AI projects remain costly experiments without generating real value. MLOps addresses this issue by helping companies easily deploy, monitor, and update AI models in production, potentially leading to better returns on investment.

In essence, MLOps bridges the gap between AI experimentation and real-world impact. As data volumes surge, it serves as the catalyst that transforms raw potential into scalable, business-driving solutions.

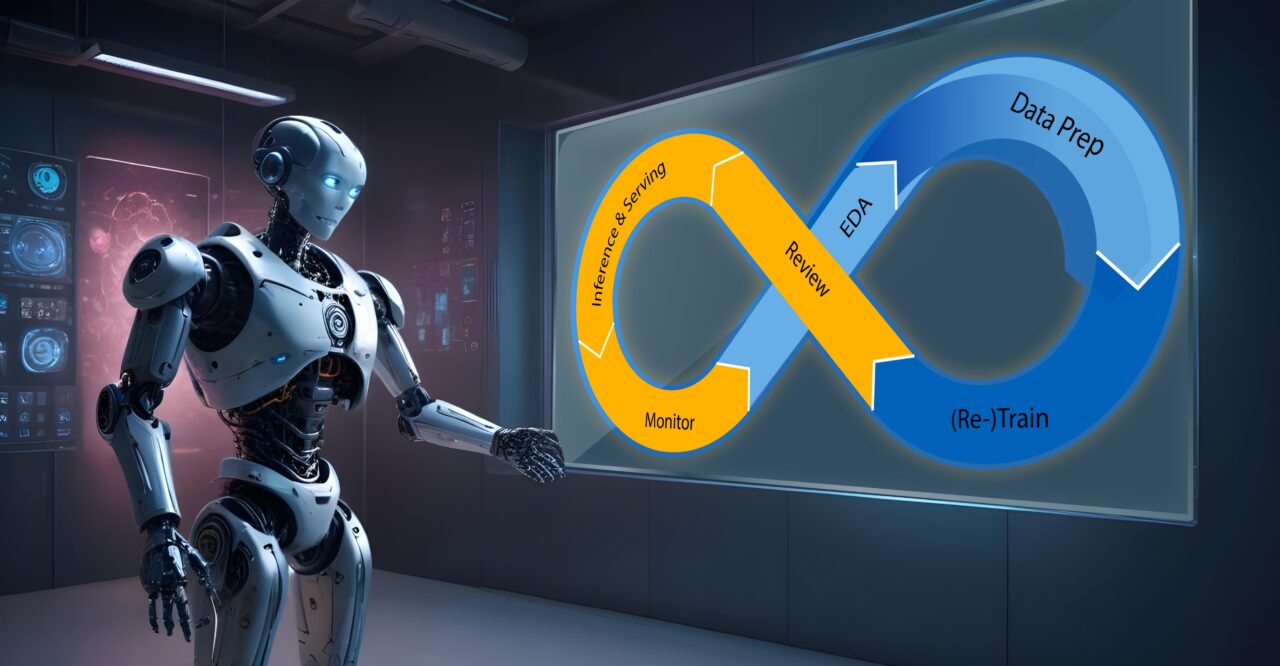

Key Phases of MLOps and Best Practices

MLOps can be adjusted to meet the needs of any machine learning project, whether it’s just focused on model deployment or involves managing the entire process from start to finish.

In most cases, enterprises implement MLOps principles across multiple stages, ensuring a seamless flow from data management to model operation. Let’s explore these steps and the associated best practices to ensure a smooth and successful MLOps adoption.

1. Exploratory Data Analysis (EDA): EDA is the crucial first step, where understanding data characteristics and patterns sets the foundation for modeling. The best practice here is to create reproducible, editable, and shareable datasets, tables, and visualizations for iterative exploration and preparation, allowing for seamless collaboration and iterative refinement.

2. Data Preparation and Feature Engineering: This stage involves cleaning data and creating new features to enhance model performance. The best practice is to iteratively refine data, creating and sharing features across teams using a feature store, promoting consistency and reusability throughout the ML lifecycle.

3. Model Training and Tuning: Model Training and Tuning is the cornerstone of the process, where developing and optimizing machine learning models takes center stage. Best practice recommends utilizing open-source libraries like scikit-learn and hyperopt, or leveraging AutoML tools for efficient model creation and improvement, streamlining the model development phase.

4. Model Review and Governance: This stage is essential for assessing models for accuracy, fairness, and compliance. The best practice is to track model lineage and versions, manage artifacts, and facilitate collaboration using open-source MLOps platforms like MLflow, ensuring traceability and enabling cross-team coordination.

5. Model Inference and Serving: Deploying models to generate predictions in production is the next step, known as Model Inference and Serving. Best practice suggests managing model refresh frequencies and inference times, and employing CI/CD tools to automate the pre-production pipeline, ensuring seamless and reliable model deployment.

6. Model Monitoring: Continuous Model Monitoring is crucial for tracking performance and data drift, maintaining model efficacy. The best practice is to automate permissions and cluster creation for productionizing models and enable REST API endpoints for easy access, facilitating proactive issue detection and mitigation.

7. Automated Model Retraining: The final stage of Automated Model Retraining involves updating models with new data to maintain accuracy. The best practice is to implement alert systems and automation to address model drift caused by discrepancies between training and inference data, ensuring that models remain current and aligned with evolving business requirements.

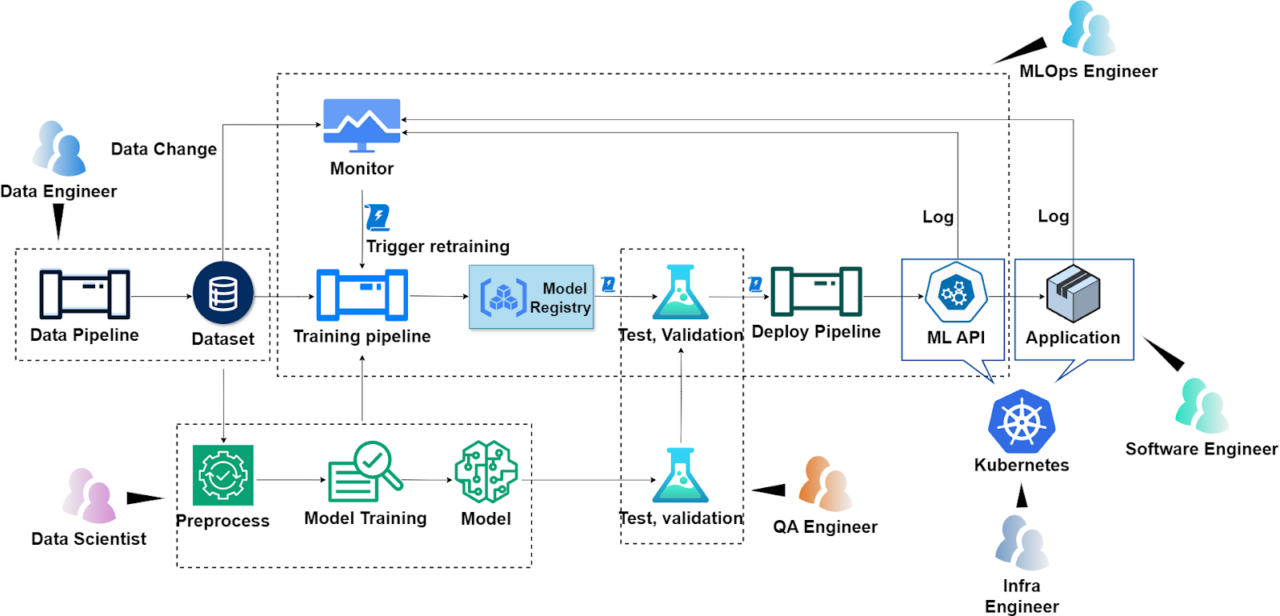

Example architecture of a full MLOps automated retraining

How To Implement MLOps In Your Organization?

When implementing MLOps in an organization, there are several key points to consider. Understanding the different steps is a crucial one. Let’s dive right in and look at the different steps involved in implementing MLOps!

Establishing version control

Version control is essential in ML projects, extending beyond code to include data and models. This broader approach ensures reproducibility and manages the complexities of experimentation. It allows multiple collaborators to work simultaneously while maintaining the integrity of production assets. Version control in MLOps helps track changes, manage iterations, and facilitate collaboration across the entire ML lifecycle.

Several open-source tools are available for versioning data, models, and pipelines in machine learning projects, a few among are mentioned below:

- DVC (Data Version Control): An open-source tool for versioning data, models, and pipelines. It’s lightweight and easily integrates with other tools.

- Pachyderm: An open-source data platform built on Docker and Kubernetes. It focuses on version control and automated, parallelized workloads.

- MLflow: A popular open-source tool that covers various aspects of the ML lifecycle, including process and experiment versioning. It’s known for its broad integration capabilities.

- Git LFS (Large File System): A Git extension developed by Atlassian, GitHub, and others. It enables the handling of large files within Git repositories, useful for sharing big data files and models while leveraging Git’s versioning capabilities.

Automating CI/CD processes

Implementing CI/CD for ML pipelines automates the build, testing, and deployment of ML systems. This streamlines workflow, ensures efficiency, and minimizes errors. Popular platforms for CI/CD in MLOps include:

- CML (Continuous Machine Learning): Designed for ML projects, automates model training, evaluation, and dataset management.

- GitHub Actions: Versatile automation tool with strong community support and extensive marketplace.

- GitLab CI/CD: Provides a robust environment for automating the entire ML lifecycle.

- Jenkins: Widely-used with extensive plugin support, suitable for complex ML pipelines.

- Circle CI: Offers cloud-based services with flexible configurations, good for custom ML workflows.

These platforms help organizations implement efficient, automated ML pipelines from development to production.

Monitoring model performance and logging

Continuous monitoring of model performance is crucial for detecting issues and maintaining optimal accuracy. Logging captures performance data, enabling debugging and troubleshooting.

Some key open-source tools for monitoring and managing ML models are:

These tools offer various functionalities, from basic monitoring to comprehensive observability and troubleshooting in production environments.

Implementing model governance

Model governance involves setting up policies and procedures for the development, deployment, and maintenance of Machine Learning (ML) models. It ensures models are robust, compliant, and produce reproducible results, while managing risks like bias, security breaches, and performance issues. Model governance includes tracking model origin, transformations, and history.

To effectively implement model governance, organizations should assess their needs and choose tools that automate the process. Essential features include role-based access control, audit logs, and model lineage tracking.

Some open-source tools for model governance include:

- DataRobot: Comprehensive platform with role assignment, access control, audit logs, and model lineage tracking.

- Dataiku: Supports governance with monitoring, drift detection, and permissions management.

- Domino Data Lab: Facilitates monitoring, reproducibility, and security with strong collaboration features.

- Datatron: Focuses on bias detection, anomaly detection, and model explainability.

- neptune.ai: Provides full model lineage, artifact tracking, and version control.

- Weights & Biases: Offers experiment tracking with personalized dashboards and auditability.

Key considerations for successful MLOps include having a clear ML strategy aligned with business goals, investing in robust infrastructure, and continuously improving models through monitoring and feedback. These steps ensure a sustainable MLOps implementation and maximize the impact of ML initiatives.

Conclusion: Unlocking the Full Potential of Machine Learning

As the focus shifts from ML inception to successful project execution, MLOps has emerged as a game-changer. These practices help organizations bridge the gap between experimentation and real-world application, enabling streamlined workflows, enhanced model reliability, and scalable deployments.

With exponential data growth, the importance of MLOps will only increase. By embracing these practices, companies can overcome historical challenges, such as high failure rates and ineffective model operationalization. The future of MLOps looks brighter than ever, with innovative solutions on the horizon that promise to revolutionize the way organizations harness the power of machine learning.

Looking ahead, rapid ML adoption across industries is expected, integrating personalized experiences and optimized operations. Supported by robust MLOps frameworks, organizations can confidently leverage the full potential of machine learning to transform ideas into reality and unlock new avenues for growth. The possibilities are truly endless as we enter a new era of machine learning-driven innovation.

About the Author

Vishnu is a Software Engineer at Founding Minds, specializing in software development and machine learning. With a background in customer support and data science, he combines technical expertise with business acumen. At present, Vishnu contributes to strategic initiatives, offering valuable insights that help the team drive innovative solutions. Vishnu’s multifaceted experience allows him to make critical contributions across various aspects of Founding Minds’ operations.