Natural language processing has developed much like a meandering river—its path winding and unpredictable. Bringing together ever-changing AI methods while keeping them true to real facts is no easy task, much like following a stream through a foggy dawn. Today, as we stand in awe of the vast Large Language Models (LLMs), we notice a gentle truth: these remarkable systems are like curious, wide-eyed children. They learn from countless words—books, articles, websites, and everyday chats—and, with a surprising grace, speak almost like we do. Yet, as with a child’s innocent tales, they sometimes offer stories that stray from reality.

LLMs, these huge neural networks, have opened new worlds in coding, translation, summarizing text, and even creative storytelling. Take, for instance, OpenAI’s O1 with its impressive capacity to process language; Claude 3.5 Sonnet, which blends swift thinking with deep insight; and Meta’s Llama, celebrated for its open-source nature and thoughtful writing. In their own unassuming way, these models remind us that while technology’s journey is full of twists and playful detours, it is steadily guiding us toward a future where machines join our human conversation, echoing both our truths and our fancies.

Sure, these chatty machines seem downright brilliant, but sometimes they go off on wild hallucination trips, and you’re just like, “What is blud waffling about?”.

These models, for all their successes, can still confabulate, weaving fictional facts with effortless confidence.

This quirk—“hallucination”—stems from how they learn: they excel at predicting words but lack a firm grip on truth. When factual accuracy is critical—in news, medicine, or law—this illusion poses real concerns, driving researchers to refine everything from training data to architectures in pursuit of more reliable AI. So, how do we keep these models from drifting off into dreamland? Let’s explore the methods used to keep LLM outputs firmly rooted in reality.

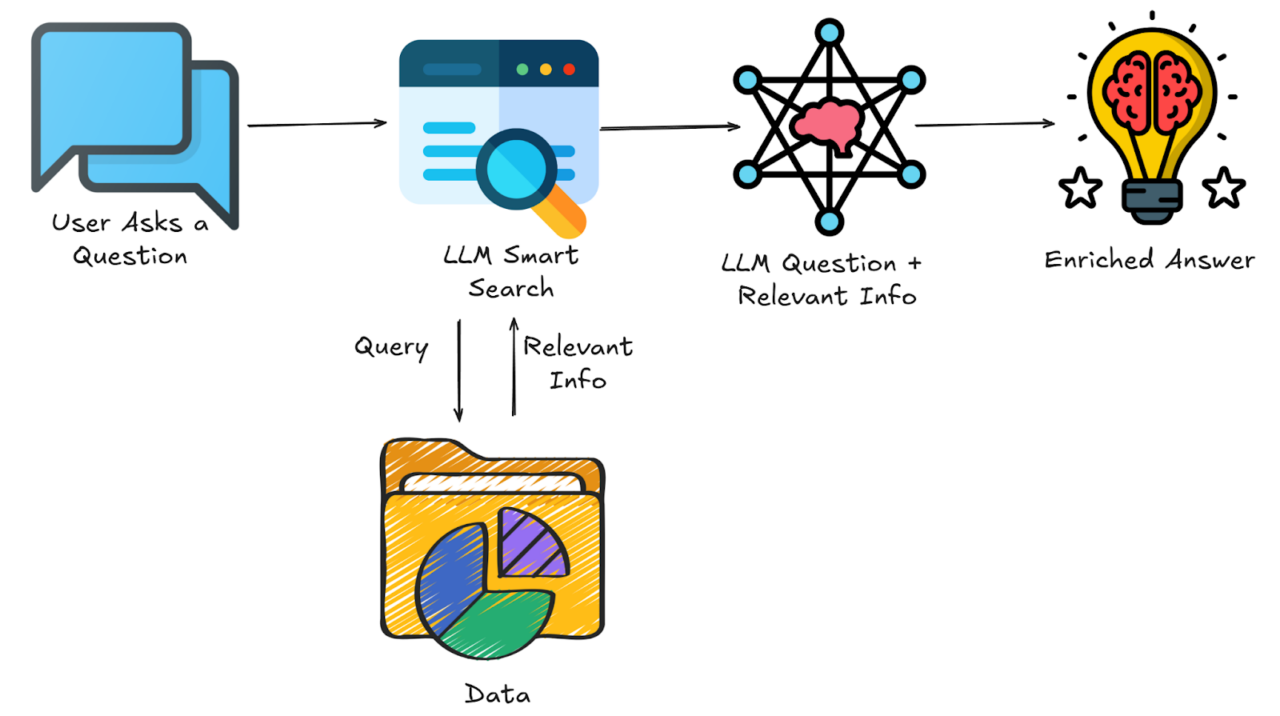

1. Retrieval-Augmented Generation (RAG)

What it is:

- RAG is like giving your LLM a database filled with real facts.

- Instead of pulling purely from its internal “learned” parameters, the model consults external sources (like databases or knowledge graphs) every time it needs to answer a query.

Why it helps:

- The LLM’s built in-knowledge can be dated or incomplete. So by hooking the model up to current vetted sources, you reduce the chances it will make things up.

- It’s akin to an open book exam—the model doesn’t have to guess if it can look up the actual information.

Under the hood

- Retrieve: A search module finds relevant documents or evidence based on your query.

- Generate: The LLM then uses the retrieved text to form its answer, grounding its response in real information.

- Combine: You might see approaches where the model’s generated text is directly mixed with the retrieved info, or it’s used as context in a single prompt.

When it’s used:

- Customer Support: Checking knowledge bases before responding to user queries.

- Medical and Legal Tools: Quickly referencing up-to-date guidelines or statutes.

- Research Assistants: Fact-checking or summarizing large archives of documents.

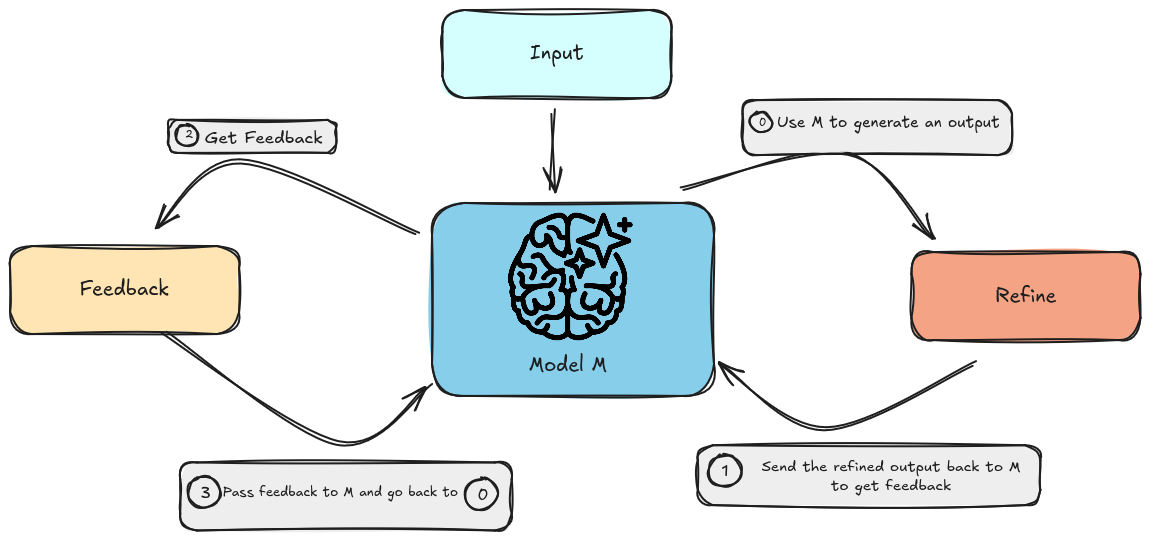

2. Self-Refinement (Feedback Loops)

What it is:

- A process where the mode’s own output is reviewed—either by the model itself, a second “judge” model, or a human in the loop—and revised if it contains errors.

Why it helps:

- LLMs can be overconfident. Pointing out mistakes or prompting the model to rethink can nudge it to correct its own hallucinations.

Under the hood:

- Generate: The LLM produces a draft response.

- Evaluate: A feedback mechanism (could be a scripted rule, another AI, or a human) checks for factual errors or inconsistencies.

- Refine: The LLM is then re-prompted with something like, “Double-check the facts,” or “Correct any unsupported points.”

When it’s used:

- Interactive Q&A: Where the user or another module can say, “Wait, that doesn’t sound right —check again.”

- Automated fact-checking tools: The model cross-references each statement with a knowledge graph or trusted source.

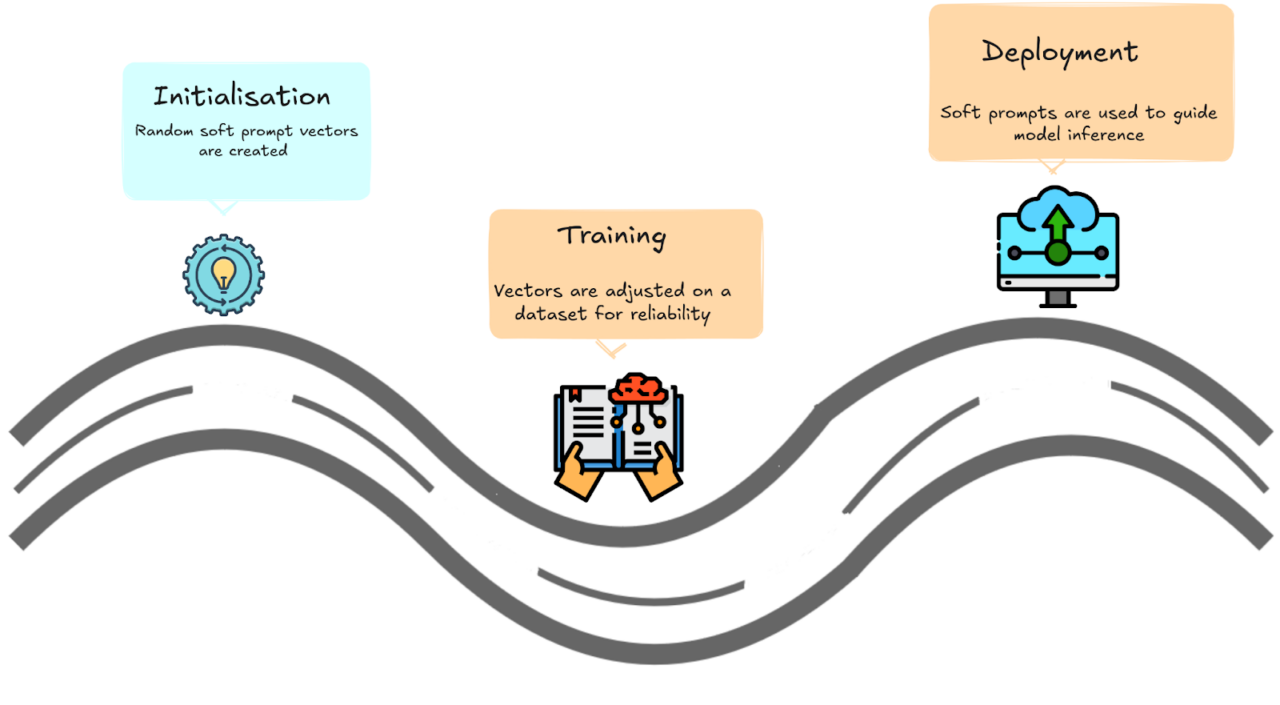

3. Prompt Tuning (Soft Prompts)

What it is:

- Instead of writing your own instructions every time, you train a small set of prompt tokens that “prefix” your input. The model then learns to follow these specialized instructions during generation.

Why it helps:

- The model can be nudged to be more cautious, more factual, or to rely on certain knowledge segments.

- It’s more lightweight than full model fine-tuning—often just a few hundred or thousand parameters get updated.

Under the hood:

- Initialization: You start with random “soft prompt” vectors.

- Training: On a dataset that emphasizes factual reliability, the model adjusts these vectors.

- Deployment: During inference, these “soft prompts” are prepended to the user input, guiding the LLM’s style and accuracy.

When it’s used:

- Company or domain specialization: Tuning a small prompt to ensure the LLM always references the correct domain facts (like product specs).

- Persona or style alignment: Making the AI consistent in tone and correctness.

4. Supervised Fine-Tuning & Data Quality

What it is:

- The “traditional” method: gather labeled examples (correct question–answer pairs, factual references, etc.) and directly train the model to produce the right outputs.

Why it helps:

- If the fine-tuning data is high quality and factual, the model learns stronger “paths” for truth vs. guesswork.

- Each supervised example acts like a road sign pointing to correctness, discouraging random tangents.

Under the hood:

- Collect data: You build (or buy) a dataset of prompts and verified answers.

- Train: The LLM’s weights adjust to minimize error on these examples—often done with a smaller learning rate than the original pre-training.

- Evaluate: You test the updated model on new prompts to confirm a drop in hallucinations.

Data curation matters

- If you let mistakes or biases slip into your dataset, the model “learns” them as truths. This is why data can’t be curated casually. It must be faithful and well-verified.

When it’s used:

- Enterprise or mission-critical contexts: Healthcare chatbots, financial advisors, etc.

- Domain adaptation: Fine-tuning on specialized corpora (e.g., legal documents, medical guidelines) to ensure correctness.

Ultimately, no single approach is perfect. Researchers are still mixing and matching these techniques in creative ways because hallucination is a deeply rooted phenomenon in how these models predict text. But each solution peels back a layer of complexity and gets us closer to an AI that can confidently generate text anchored in truth, without losing its flair for creativity. Taming imagination may seem like an oxymoron. Still, we seek to preserve the remarkable creativity of LLMs while grounding them in truth. We want models that can engage in open-ended conversations and solve new problems, but also know when to say “I’m not sure.” Mitigating hallucinations is about aligning AI with human values of honesty, reliability, and intellectual humility—creating language models that expand human knowledge without compromising on reality.

May this quest guide us as we push the limits of language AI. The potential benefits are immense, but so is the need to get it right. Let our imaginations soar, yet remain tied to veracity. With diligence, ingenuity, and integrity, we can craft models that are both brilliantly creative and reliably truthful.

About the Author

Hrishikesh is an Associate Machine Learning Engineer at Founding Minds, designing and deploying advanced AI applications. He is actively building expertise in Large Language Model (LLM) solutions and SportsAI, focusing on harnessing data-driven insights to solve real-world challenges. By leveraging diverse machine learning frameworks, he transforms raw data into actionable outcomes. Beyond his professional pursuits, Hrishikesh enjoys exploring emerging tech trends, diving into books, and engaging with games—constantly seeking new ideas and inspiration.