Ever wondered how your phone predicts what you’re about to type, how Netflix seems to know your taste in movies, or how voice assistants like Siri and Alexa understand you? It’s all thanks to powerful neural networks! These AI models have completely changed the game, making everything from chatbots to facial recognition smarter and more intuitive. Today, we’re diving into three of the most important types—Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformers—to see how they work and why they matter in our daily lives. Let’s get started!

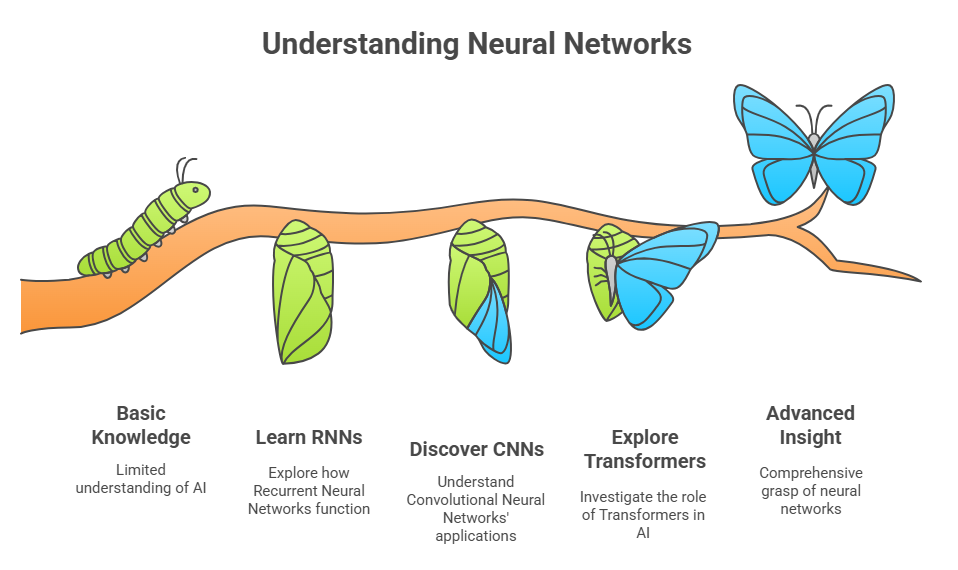

Understanding Neural Networks

At its core, a neural network mimics the way the human brain processes information. By using interconnected nodes (or neurons), these networks can learn patterns and make predictions based on input data. As the field of deep learning has evolved, different architectures have emerged to tackle various types of data and tasks. Let’s take a look at our three main players!

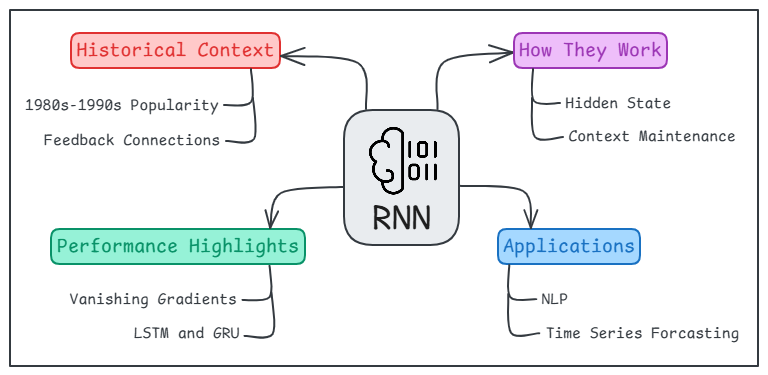

Recurrent Neural Networks (RNNs)

What Are They?

Recurrent Neural Networks, or RNNs, are designed to handle sequential data. They excel in tasks where current inputs depend on previous ones, making them ideal for time series and language tasks.

Historical Context

RNNs gained popularity in the late 1980s and 1990s when researchers sought ways to model time-dependent and sequential data. Unlike traditional feedforward networks, RNNs introduced the concept of feedback connections, which enabled them to remember previous inputs and maintain context.

How Do They Work?

The unique feature of RNNs is their hidden state, which carries information across time steps. This allows RNNs to maintain context in a sequence, such as understanding a sentence word by word.

Applications in the Real World

- Natural Language Processing (NLP): RNNs are widely used in language modeling, machine translation, and sentiment analysis.

- Time Series Forecasting: From predicting stock prices to weather patterns, RNNs are effective in analyzing temporal trends.

Performance Highlights

While RNNs are powerful, they struggle with long-term dependencies due to issues like vanishing gradients. However, advancements like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) help overcome these limitations.

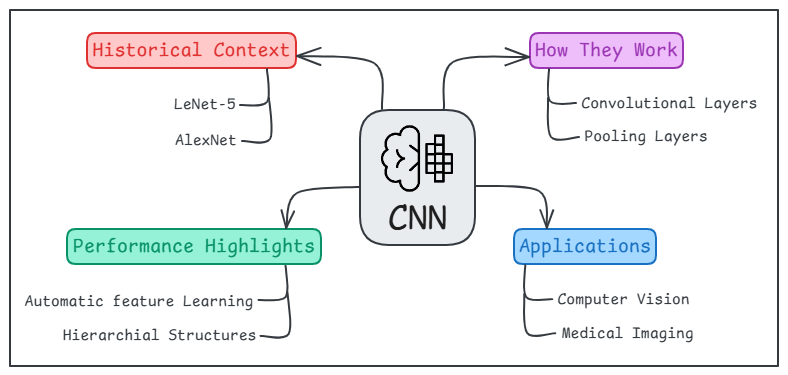

Convolutional Neural Networks (CNNs)

What Are They?

Convolutional Neural Networks, or CNNs, are a game-changer for processing spatial data, such as images. Their architecture is tailored to extract spatial hierarchies from visual data.

Historical Context

CNNs were inspired by the visual cortex in animals and gained attention in the 1990s through the pioneering work of Yann LeCun on handwritten digit recognition (LeNet-5). However, their widespread adoption took off after the success of AlexNet in the ImageNet competition in 2012, which outperformed traditional image classification algorithms by a significant margin.

How Do They Work?

CNNs use layers of convolutional filters to scan the input image, detecting features like edges, shapes, and textures. These layers are typically followed by pooling layers, which reduce dimensionality and retain essential information.

Applications in the Real World

- Computer Vision: CNNs dominate image classification, object detection, and facial recognition tasks.

- Medical Imaging: They’re instrumental in diagnosing diseases through analysis of X-rays and MRIs.

Performance Highlights

CNNs are known for their superior performance in image-related tasks, thanks to their ability to learn features automatically and efficiently through hierarchical structures.

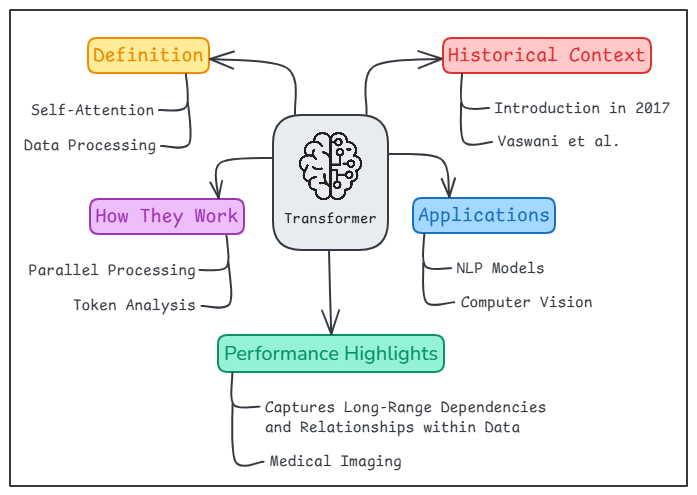

Transformers

What Are They?

Transformers have revolutionized NLP and beyond with their unique approach to processing data sequences. They rely on self-attention mechanisms, which allow them to weigh the importance of different input elements dynamically.

Historical Context

Transformers were introduced in 2017 by Vaswani et al. in their landmark paper “Attention is All You Need”. They transformed the landscape of NLP by eliminating the limitations of RNNs, such as sequential processing, and enabling parallel computation.

How Do They Work?

Instead of processing input sequentially, transformers analyze all input tokens simultaneously, capturing relationships regardless of their position. This parallelization leads to faster training times and improved performance.

Applications in the Real World

- Natural Language Processing: Transformers power advanced models like BERT and GPT for tasks such as text generation, translation, and summarization.

- Computer Vision: Vision Transformers (ViT) apply transformer architectures to images, achieving impressive results.

Performance Highlights

Transformers excel at capturing long-range dependencies and relationships within data, outperforming both RNNs and CNNs in many applications.

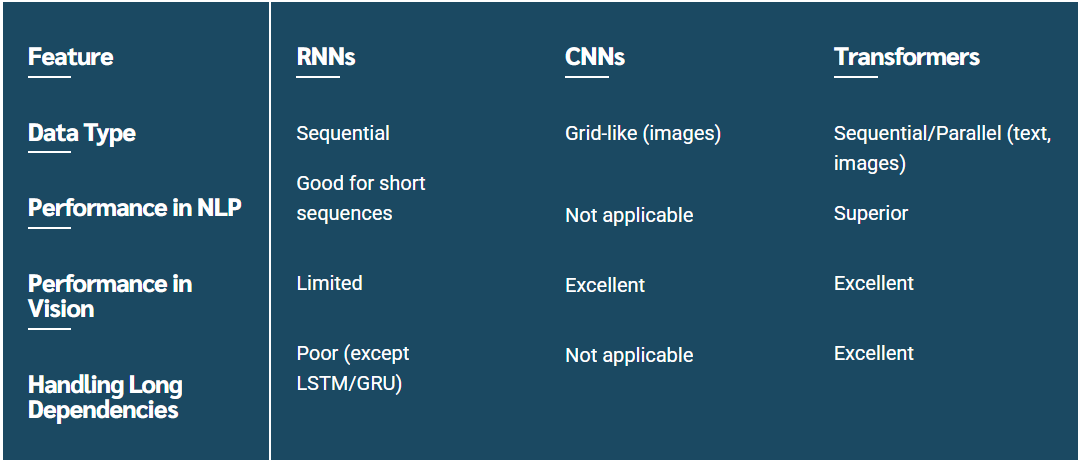

Feature

Data Type

Performance in NLP

Performance in Vision

Handling Long Dependencies

RNNs

Sequential

Good for short sequences

Limited

Poor (except LSTM/GRU)

CNNs

Grid-like (images)

Not applicable

Excellent

Not applicable

Transformers

Sequential/Parallel (text, images)

Superior

Excellent

Excellent

Conclusion: The Path Ahead

Neural networks have revolutionized AI, with DNNs forming the foundation and specialized architectures like RNNs, CNNs, and Transformers advancing capabilities in sequential data, vision, and contextual learning. RNNs introduced memory to AI but struggled with long-term dependencies, CNNs transformed image processing, and Transformers redefined NLP and beyond with unparalleled efficiency.

As AI evolves, these technologies are unlocking new possibilities—from real-time translation to autonomous systems. What new breakthroughs will emerge next? How will these models shape the future? The answers may redefine how we interact with technology in the years to come!

About the Author

Nandagopal J is an Associate Software Engineer at Founding Minds. He is a graduate of the College of Engineering, Chengannur, where he completed his B.Tech in Computer Science and Engineering. Passionate about technology and software development, Nandagopal enjoys solving problems and contributing to innovative solutions. With a strong academic foundation and practical experience, he is always eager to learn and grow in the tech field.