Introduction

In today’s data-driven world, businesses often feel that data is frozen and too hard to make sense of due to its sheer volume, complexity, and lack of structure. This challenge is particularly pronounced with unstructured data, which can seem overwhelming and impenetrable without the right tools and techniques to process and analyze it. However, advanced analytics and Machine Learning methods have the power to unlock valuable insights from unstructured data, transforming it into a dynamic and actionable resource.

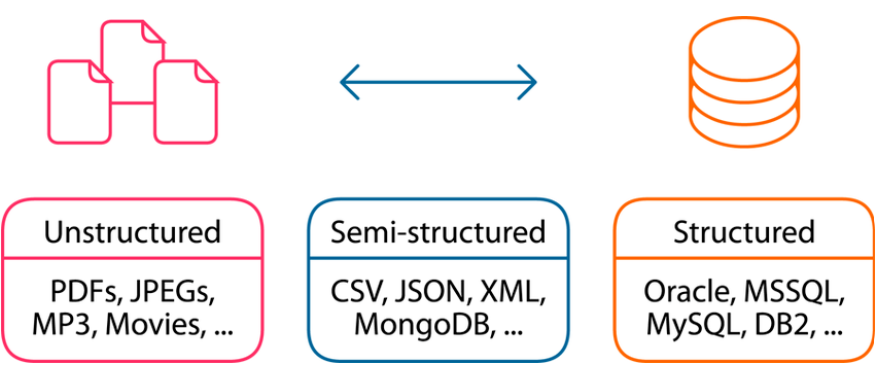

Much of the data generated by humans and machines in the real world is unstructured, making it crucial for businesses to utilize this resource to comprehensively understand trends and behaviors. Unstructured data can provide deeper insights that structured data alone might not reveal, especially when combined with advanced analytics and machine learning techniques. Unlike structured data, unstructured and semi-structured data can’t easily be transferred to a spreadsheet or other predefined data model. Instead, it requires intelligent processing to extract useful information.

Different data types: structured, semi‐structured, and unstructured. Data Source: (PDF) Steam computing paradigm: Cross‐layer solutions over cloud, fog, and edge computing (researchgate.net)

Intelligent Document Categorization

Effectively managing unstructured data remains a significant challenge in today’s digital environment. However, with the application of advanced techniques like Machine Learning (ML) and Artificial Intelligence (AI), we can automate the organization and classification of these documents into specific categories.This process, known as Intelligent Document Categorization, goes beyond basic keyword methods by utilizing sophisticated algorithms. These algorithms enable a deeper understanding of documents’ content, context, and nuances, leading to more accurate and efficient categorization.

In the process of Intelligent Document Categorization, feature extraction serves as a critical step that bridges the gap between raw text data and machine learning models. By transforming unstructured PDF documents into structured feature vectors—representing key elements such as the top five important keywords—feature extraction ensures that relevant information is captured and made usable for further analysis. With these feature vectors in hand, machine learning models can be effectively trained to classify documents into various categories. The integration of advanced tools like Amazon Comprehend for natural language processing and keyword extraction, combined with AWS Athena for data processing, enhances the accuracy and efficiency of this feature extraction process. You can also use Amazon QuickSight to create visualizations from your data. Amazon QuickSight is a serverless business intelligence tool that helps you extract and present insights with ease.

Process Flow of Intelligent Document Categorization

In this article, we will explore how two powerful AWS tools—Amazon Comprehend and AWS Athena—can be used to extract and analyze information from documents. We’ll delve into how these tools work together to enhance document processing capabilities, offering a comprehensive solution for categorizing and understanding unstructured data.

Amazon Comprehend

Amazon Comprehend is a cutting-edge Natural Language Processing (NLP) service provided by AWS (Amazon Web Services). Leveraging advanced machine learning algorithms, it meticulously analyzes text data to extract meaningful insights and uncover relationships within the content. This powerful tool is designed to simplify the complexities of unstructured data by performing a range of analytical tasks. By utilizing Amazon Comprehend, businesses can transform intricate data challenges into valuable resources, gaining actionable insights that drive informed decision-making and support strategic growth.

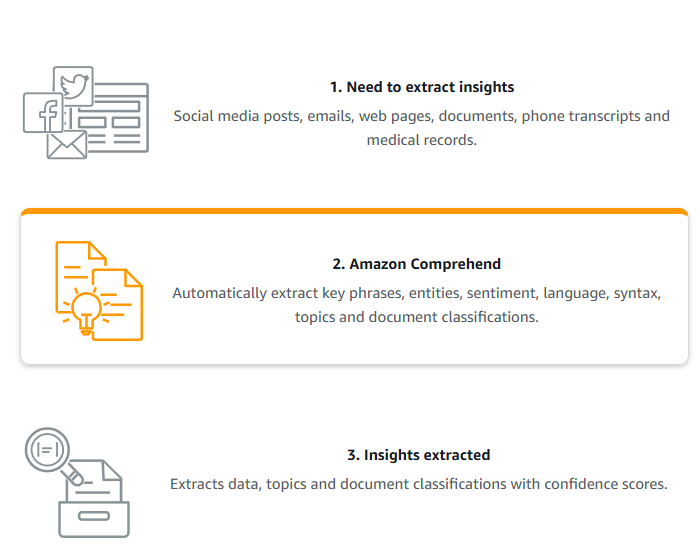

When working with Amazon Comprehend, the process begins by feeding PDF files into the service. In response, Amazon Comprehend generates three distinct CSV files for each PDF. These files encompass key phrase data, syntax data, and entity data, respectively, providing a comprehensive analysis of the document’s content.

Overview of Steps in Amazon Comprehend Data Source: Amazon Comprehend | ap-southeast-2

1. Entity Recognition

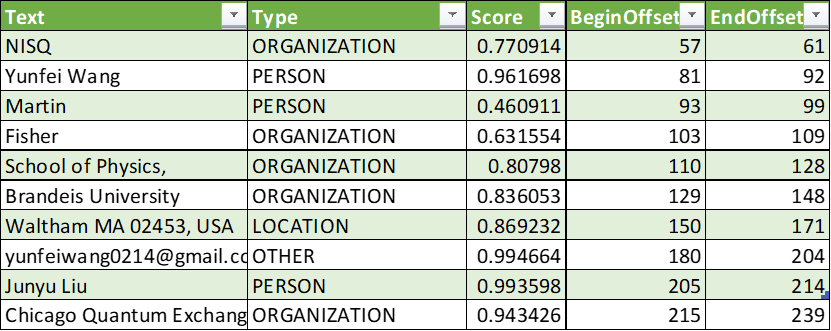

Entity recognition using Amazon Comprehend involves identifying and classifying named entities in text into predefined categories such as people, organizations, locations, dates, and more. Amazon Comprehend provides this capability through its detect_entities API, which uses natural language processing (NLP) to extract these entities and categorize them.

2. Syntax Analysis:

Syntax analysis using Amazon Comprehend involves analyzing the grammatical structure of text to understand how words and phrases are organized. This process helps in identifying parts of speech, such as nouns, verbs, adjectives, and their relationships within sentences. We use the detect_syntax operation to analyze the grammatical structure of the text.

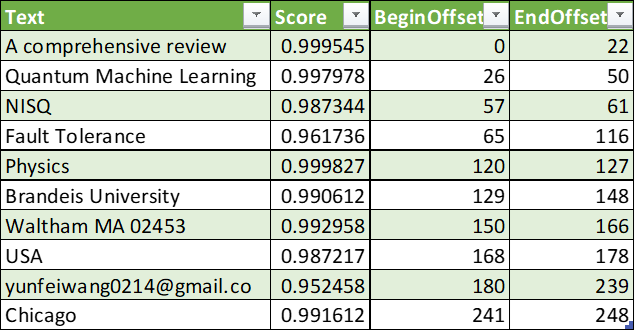

3. Key Phrase Extraction:

Keyphrase extraction using Amazon Comprehend involves identifying and extracting relevant phrases or terms from text data that captures the essential topics and themes. The detect_key_phrases API call processes the provided text and returns the keyphrases along with their relevance scores. This functionality helps in extracting meaningful phrases from the text for various analytical and operational purposes.

Amazon comprehend with python

1. Create a client object for interacting with the Amazon Comprehend service

1 |

comprehend = boto3.client('comprehend') |

2. We can use fitz to open and work with PDF files, such as extracting text, images, or metadata, or even modifying documents.

1 2 3 4 5 |

import fitz doc = fitz.open(file_path) file_prefix = os.path.basename(file_path)[:6] for page_num, page in enumerate(doc): detect_text = page.get_text() |

3. For each page in the PDF, extract key phrases, entities, and syntax using Amazon Comprehend. The extracted information can be saved to a DataFrame for further analysis. In this case, we’re saving the entity information to a DataFrame. Similarly, we can save the syntax and key phrases as well.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

for page_num, page in enumerate(doc): detect_text = page.get_text() text_analysis = { "Entities": comprehend.detect_entities(Text=detect_text, LanguageCode='en')['Entities'], "KeyPhrases": comprehend.detect_key_phrases(Text=detect_text, LanguageCode='en')['KeyPhrases'], "SyntaxTokens": comprehend.detect_syntax(Text=detect_text, LanguageCode='en')['SyntaxTokens'] } # Save Entities entities_df = pd.DataFrame([{ 'Text': entity['Text'], 'Type': entity['Type'], 'Score': entity['Score'], 'BeginOffset': entity['BeginOffset'], 'EndOffset': entity['EndOffset'] } for entity in text_analysis["Entities"]]) entities_df.to_csv(os.path.join(output_path, f"entity{file_prefix}.csv"), mode='a', index=False, header=not os.path.exists(os.path.join(output_path, f"entity{file_prefix}.csv"))) |

Feature Extraction using AWS ATHENA

AWS Athena is an interactive query service offered by AWS (Amazon Web Services) that enables you to analyze data stored in Amazon S3 using standard SQL. AWS Athena is a powerful tool for data preprocessing, especially when dealing with large datasets stored in Amazon S3. As a serverless solution, Athena eliminates the need for infrastructure management, and you only pay for the queries you execute. You can use Athena to create a database from the CSV files generated by Amazon Comprehend. Once the database is set up, you can write SQL queries to extract keywords that are either nouns or pronouns and belong to a specific entity category.

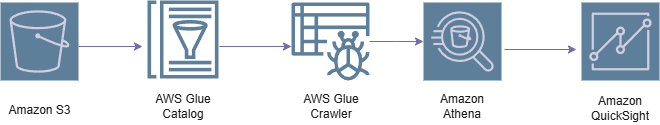

Flow Diagram of Intelligent Document Categorization

1. Set Up AWS Athena: Ensure you have an AWS account and have set up the necessary permissions for using Athena and S3. Navigate to the Athena console in the AWS Management Console.

2. Create a Database: To create a database in AWS using CSV files as input, you can use AWS services like Amazon S3 and AWS Glue or AWS Data Pipeline to import the CSV data. Amazon S3 is a cloud storage service offered by Amazon Web Services (AWS). It allows users to store and retrieve any amount of data, at any time, from anywhere on the web. Amazon S3 is designed for scalability, durability, and security, making it an ideal choice for a wide range of use cases.

3. Define Tables: Create tables by defining the schema for the data stored in S3. First, create an S3 bucket and upload all the data to the bucket following its schema. Then, configure an AWS Glue crawler to scan the contents of the S3 bucket and save the database information in the Glue Data Catalog. Alternatively, you can use a CREATE TABLE statement to specify the S3 location of the data.

Data Preprocessing with AWS Athena

1. Data Cleaning: Use SQL queries to clean data, such as removing duplicates, filtering out invalid entries, and handling missing values.

2. Data Transformation: Transform data using SQL functions. You can create new columns, format data, and perform aggregations.

3. Joining Data: Join multiple tables to enrich data or combine datasets

4. Aggregations and Analysis: Perform aggregations to summarize data, which is useful for generating insights. By using the GROUP BY, ORDER BY, LIMIT, IN and EXISTS clauses we can identify the top five most frequent keywords that fall into categories such as noun, proper noun, title, or miscellaneous. Next, the selected top 5 keywords are label encoded, converting each into a unique integer representation essential for ML algorithms. These encoded keywords are then transformed into a feature vector, a numeric representation capturing their characteristics for machine learning models. Finally, the feature vectors are used to train a machine-learning model, which learns from these vectors to identify patterns and relationships. Once trained, the model can generate predictions for new, unlabeled data based on the learned patterns.

Overall, the synergy between Amazon Comprehend and AWS Athena, combined with various machine learning models, creates a robust framework for feature extraction and classification, enhancing the efficiency and accuracy of data-driven tasks. Key machine learning models for Intelligent Document Categorization include Logistic Regression, which estimates probabilities for categorization tasks; Support Vector Machines (SVM), which find the optimal hyperplane to separate document categories in high-dimensional spaces; and Decision Trees, which offer clear interpretability by constructing a tree-like structure of decisions based on feature values.

In summary, leveraging Amazon Comprehend advanced natural language processing and machine learning capabilities, along with Athena’s powerful querying functions, provides a comprehensive solution for extracting and analyzing unstructured data. This integration not only improves the accuracy and efficiency of document categorization but also reveals actionable insights that drive informed decision-making. As organizations navigate the complexities of unstructured data, the combined use of these AWS tools can significantly streamline processes and unlock new opportunities for growth and innovation.

Future Work

Leveraging Amazon Comprehend with AWS Kinesis for continuous data streams and immediate insights enables the integration of real-time sentiment analysis and custom entity recognition with predictive analytics. Developing domain-specific entity recognition models, such as for healthcare or finance, would enhance the relevance of extracted information, while advanced visualization tools like Amazon QuickSight could be used with Athena for more intuitive data exploration. Additionally, incorporating predictive analytics using AWS SageMaker could allow sentiment and entity data to forecast trends, further enhanced by multilingual analysis capabilities.

References

About the Author

Aswathi P V is a certified Data Scientist, holding prestigious credentials from IBM and ICT along with a Master’s degree in Machine Learning. She has contributed to the field through internationally recognized publications and brings over six years of teaching experience. In addition, her two years as a Data Engineer at Founding Minds have equipped her with hands-on expertise in both academic and professional environments. Beyond her technical prowess, she finds joy in creative activities like dancing and stitching and stays grounded through yoga and meditation.