The Test Data Dilemma

Perfect test data is the holy grail of QA engineering. We need data that’s realistic, varied, and thorough enough to catch edge cases – but our current options all fall short. Production data masking gives us realism but raises privacy flags. Random generators pump out quantity without quality relationships. And creating test data manually? That’s like painting the Golden Gate Bridge with a watercolor brush.

While these methods have served us historically, today’s rapid development cycles demand something better. The hunt for perfect test data continues.

What exactly is Synthetic Data?

Within the rapidly transforming realm of computational intelligence, a new player has emerged, promising to revolutionize how we train and test our models. Synthetic data – the artificial yet remarkably realistic datasets created from scratch to mirror the key features of real data. It’s like having a genie that can grant you endless wishes for data – but as with all wishes, we need to be careful what we ask for.

But here’s the million-dollar question: Can something artificially created truly capture the nuances, complexities, and unpredictability of real-world data? As we dive deeper into this topic, we’ll explore whether synthetic data is the silver bullet it appears to be, or if we’re at risk of creating a testing echo chamber that fails to prepare our models for the messy realities of the real world.

Enter LLMs: A Game-Changing Approach

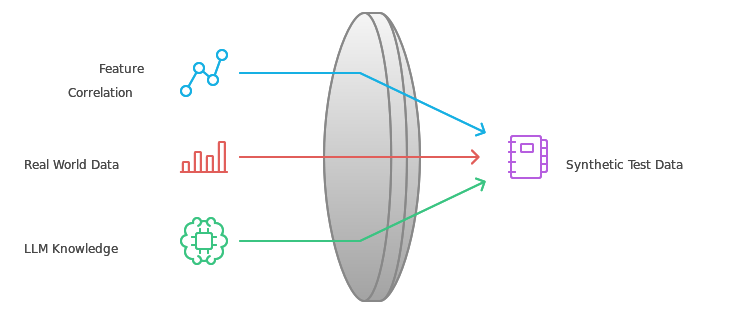

Large Language Models (LLMs) – the breakthrough that’s reshaping how we approach test data generation. Unlike their conventional counterparts, LLMs bring a level of intelligence to data creation that feels almost intuitive. These models don’t just generate data; they comprehend context, follow business rules, and craft meaningful data points that maintain logical relationships. It’s like having a sophisticated data architect working at the speed of automation.

Assessing LLM Capabilities: Key Dimensions

LLMs excel in three key areas for test data generation, but their standout strength is data realism. Unlike traditional random generators, these models create lifelike customer profiles where everything connects naturally – age influences buying patterns, location affects shipping, and income shapes product choices. The difference is in these subtle, realistic relationships.

The second strength lies in schema adherence. Modern LLMs don’t just generate data; they respect the rules. They maintain data type constraints, handle complex business logic, and ensure all generated values stay within specified ranges. Think of it as having a meticulous database administrator built into your data generation tool.

Finally, LLMs excel at edge case coverage – perhaps their most valuable feature for testing. They automatically identify boundary conditions and create unusual yet valid data combinations that human testers might never think to try. They’re particularly adept at generating negative test scenarios, helping catch potential issues before they reach production.

Harnessing LLMs for Domain-Specific Challenges

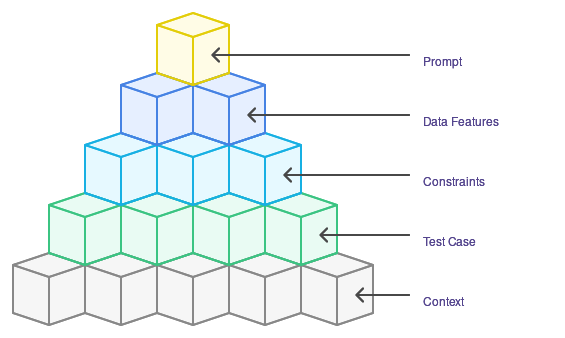

Unlocking the true potential of Large Language Models (LLMs) in generating synthetic test data requires a nuanced approach tailored to specific use cases. The effectiveness of an LLM can vary significantly between Natural Language Processing( NLP) tasks, classification problems, and regression scenarios. To address this variability, we’ve found that developing a flexible prompting template is key. This template serves as a customizable framework, guiding the LLM to produce synthetic data that’s both accurate and relevant to the task at hand.

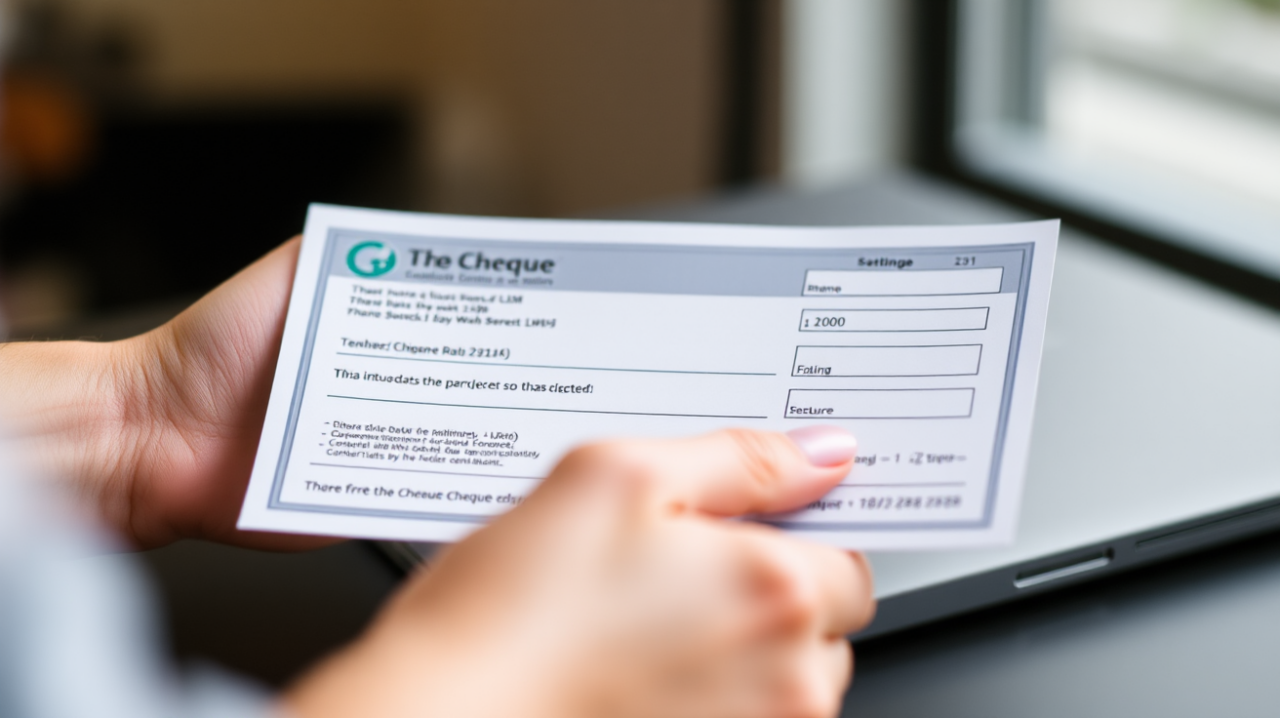

Tailoring LLM-Generated Test Data for NLP Tasks: A Case Study in Cheque Digitalization

When it comes to NLP-based use cases, the ability to generate bespoke test data on demand is particularly valuable. By carefully crafting a template that incorporates the right context, test cases, and necessary constraints, we can shape the output to meet specific requirements. A prime example of this approach is in cheque digitalization systems. In our experiments with the Llama-3.1-70B model, we’ve successfully generated synthetic data that closely mimics real-world cheque information.

Code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

from groq import Groq client = Groq(api_key="your-api-key") testcase_1="To verify if the cheque document processing system can accurately recognize various phrasings of 'Rupees in Words'." use_case="This is a Union Bank cheque book. I need to create synthetic test data to evaluate the cheque document processing system. Here’s the sample data: Bank: Union Bank, Branch: Thrissur, Name: Gokul, Amount: 123,000, Date: 23-08-2024, Rupees in Words: One Lakh Twenty Three Thousand, Account Number: 1234567890." prompt_1="generate synthetic test data to meet the:"+testcase_1+" for the given use case: "+use_case +", you must provide me the test data generated instead of code" def generate_test_data(prompt): completion = client.chat.completions.create( model="llama-3.1-70b-versatile", messages=[ { "role": "user", "content": prompt }], temperature=1, max_tokens=900, top_p=1, stream=True, stop=None, ) return completion output_response = generate_test_data(prompt_1) for chunk in output_response: print(chunk.choices[0].delta.content or "", end="") |

Output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

Here`s some synthetic test data to check the cheque document processing system`s ability to identify different paraphrasing of "Rupees in Words" for the given use case: 1. Original: One Lakh Twenty Three Thousand - Test Data 1: One Lakh Twenty-Three Thousand - Test Data 2: One Lakh and Twenty-Three Thousand - Test Data 3: One Lakh Twenty Three Thousand Only - Test Data 4: Rupees One Lakh Twenty Three Thousand - Test Data 5: One Lakh Twenty Three Thousand Rupees 2. Different wordings: - Test Data 6: One Hundred Twenty Three Thousand - Test Data 7: One Lakh and Twenty Three Thousand - Test Data 8: Twenty Three Thousand One Hundred - Test Data 9: One Lakh Twenty Three Thousand and Zero Paise - Test Data 10: One Lakh Twenty Three Thousand and Zero Paisa 3. Abbreviations and short forms: - Test Data 11: One Lakh Twenty Three K - Test Data 12: 1 Lakh Twenty Three Thousand - Test Data 13: One L Twenty Three Thousand - Test Data 14: 1 Lakh 23 Thousand - Test Data 15: One Lakh 23 K 4. Misspelled words: - Test Data 16: One Lak Twenty Three Thousand - Test Data 17: One Lakh Twent Three Thousand - Test Data 18: One Lakh Twenty Three Thousnad - Test Data 19: One Lakh Twenty Three Thousend - Test Data 20: One Lakh Twenty Three Thousan |

In the above approach, the LLM develops a richer understanding of the test data requirements by following a structured input template, proving far more effective than simply issuing a generic prompt for a dataset. This template provides essential context, constraints, and details that guide the model, yielding data precisely tailored to testing needs. The output reveals the nuanced variations the LLM can introduce—subtle distinctions that a tester might overlook.

Synthetic Data Generation for Classification: A Credit Processing Case Study

When generating synthetic test data for classification tasks, such as a credit processing system, the complexity increases significantly. Consider a dataset with attributes like Loan ID, Name, Gender, Marital Status, Dependents, Education, Employment, Income, Loan Amount, Loan Term, Credit History, and the crucial “Issue Status.” While LLMs can generate data that appears accurate at first glance, the real challenge lies in validating the “Issue Status” column.

The key questions we face are:

- How can we ensure the LLM’s classification for each synthetic data point is accurate?

- As testers, how do we verify that the classifications in the generated data align with the expected output of the actual system?

To enhance the effectiveness of LLMs in this scenario, we’ve found that refining our prompting template is crucial. Our approach involves guiding the LLM to consider the intricate correlations between various dataset features and the target classification. By leveraging the LLM’s vast embedded knowledge, we can improve its ability to classify data based on recognized patterns within the dataset.

We’ve also introduced an innovative feature to support classification accuracy: an “additional_info” field in our template. This field provides a rationale for each classification, offering testers transparent insights into why the LLM assigned a specific “Issue Status” to each generated data point. This addition not only aids in verification but also enhances the interpretability of the synthetic data.

Code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

from groq import Groq import pandas as pd import json import csv import re client = Groq(api_key="your-api-key") df = pd.read_csv("path-to-the-csv") training_data_str = "" for index, row in df.iterrows(): training_data_str += f"Loan_ID: {row['Loan_ID']}, Gender: {row['Gender']}, Married: {row['Married']}, Dependents: {row['Dependents']}, Education: {row['Education']}, Self_Employed: {row['Self_Employed']}, ApplicantIncome: {row['ApplicantIncome']}, CoapplicantIncome: {row['CoapplicantIncome']}, LoanAmount: {row['LoanAmount']}, Loan_Amount_Term: {row['Loan_Amount_Term']}, Credit_History: {row['Credit_History']}, Property_Area: {row['Property_Area']}, Loan_Status: {row['Loan_Status']}\n" testcase_1 = "To verify if the credit processing system can make the correct decision when the applicant's income is lower than the loan amount." use_case="This is a credit processing system of a bank, I want to generate synthetic test data to test the credit processing system, here is the data:"+training_data_str+"study this data provided in the df in detail and understand the relationship between the columns and how its affecting the decision (Loan_Status) of the credit processing system" constraint = "The test data generated should be in the same format as the input data provided in the df" additional_info = "Provide the reason for deciding the Loan_Status for each test data generated" json_format={"Synthetic_test_data":[{"Sl_No":1,"Loan_ID": "LP001002","Gender":"married","Married":"Yes","Dependents":0,"Education":"Graduate","Self_Employed":"No","ApplicantIncome":5849,"CoapplicantIncome":0,"LoanAmount":146,"Loan_Amount_Term":360.0,"Credit_History":1.0,"Property_Area":"Urban","Loan_Status":"Y","Reason":"The applicant is married and has a good credit history, so the loan is approved"}, {"Sl_No":2,"Loan_ID": "LP001003","Gender":"married","Married":"Yes","Dependents":0,"Education":"Graduate","Self_Employed":"No","ApplicantIncome":849,"CoapplicantIncome":0,"LoanAmount":16,"Loan_Amount_Term":36.0,"Credit_History":1.0,"Property_Area":"Urban","Loan_Status":"Y","Reason":"The applicant is married and has a good credit history, so the loan is approved"}]} prompt_1 = "try to generate synthetic test data to meet the:"+testcase_1+" for the given use case: "+use_case +", considering the constraint: "+constraint+", and provide the additional information: "+additional_info+" in the following JSON format: "+str(json_format) def generate_test_data(prompt): completion = client.chat.completions.create( model="llama-3.1-70b-versatile", messages=[ { "role": "system", "content": "'JSON'" }, { "role": "user", "content": prompt } ], temperature=1, max_tokens=1024, top_p=1, stream=False, stop=None, ) return completion output_response = generate_test_data(prompt_1).choices[0].message #print(output_response.content) #print(completion.choices[0].message) json_data_match = re.search(r'```json\n(.*?)\n```',output_response.content, re.DOTALL) if json_data_match: json_data_str = json_data_match.group(1) else: raise ValueError("Could not find JSON data in the input string.") data = json.loads(json_data_str) synthetic_test_data = data.get("Synthetic_test_data", []) if not synthetic_test_data: raise ValueError("Could not find 'Synthetic_test_data' in the parsed JSON.") # Step 4: Convert the test data to a CSV file csv_file = "synthetic_test_data.csv" headers = synthetic_test_data[0].keys() with open(csv_file, mode='w', newline='') as file: writer = csv.DictWriter(file, fieldnames=headers) writer.writeheader() writer.writerows(synthetic_test_data) |

Output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 |

Based on the data provided in the df, I`ve identified the following relationships between the columns and how they affect the decision (Loan_Status) of the credit processing system: 1. **ApplicantIncome** and **LoanAmount**: There is a positive correlation between these two columns. Applicants with higher incomes are more likely to be approved for larger loan amounts. 2. **Credit_History**: A good credit history (1.0) is a strong indicator of a loan being approved, while a poor credit history (0.0) is a strong indicator of a loan being rejected. 3. **Loan_Amount_Term**: Loans with shorter terms (e.g., 360 months) are more likely to be approved than loans with longer terms. 4. **Education**: Applicants with a graduate education are more likely to be approved for a loan than those with a non-graduate education. 5. **Property_Area**: The location of the property (Urban, Semiurban, or Rural) does not have a significant impact on the loan approval decision. 6. **Gender** and **Married**: These columns do not have a significant impact on the loan approval decision. Considering the constraint of generating test data in the same format as the input data, I`ve created the following test data: **Test Data 1: High-Income Applicant with Good Credit History** * Loan_ID: LP002991 * Gender: Male * Married: Yes * Dependents: 0 * Education: Graduate * Self_Employed: No * ApplicantIncome: 800000 * CoapplicantIncome: 0.0 * LoanAmount: 50000.0 * Loan_Amount_Term: 84.0 * Credit_History: 1.0 * Property_Area: Urban Loan_Status: Y (Approved) Reason: High income, good credit history, and a short loan term make this applicant a low-risk borrower. **Test Data 2: Low-Income Applicant with Poor Credit History** * Loan_ID: LP002992 * Gender: Female * Married: No * Dependents: 3+ * Education: Not Graduate * Self_Employed: Yes * ApplicantIncome: 15000 * CoapplicantIncome: 0.0 * LoanAmount: 10000.0 * Loan_Amount_Term: 360.0 * Credit_History: 0.0 * Property_Area: Rural Loan_Status: N (Rejected) Reason: Low income, poor credit history, and a long loan term make this applicant a high-risk borrower. **Test Data 3: Moderate-Income Applicant with Good Credit History** * Loan_ID: LP002993 * Gender: Male * Married: Yes * Dependents: 2 * Education: Graduate * Self_Employed: No * ApplicantIncome: 45000 * CoapplicantIncome: 20000.0 * LoanAmount: 20000.0 * Loan_Amount_Term: 180.0 * Credit_History: 1.0 * Property_Area: Semiurban Loan_Status: Y (Approved) Reason: Moderate income, good credit history, and a short loan term make this applicant a moderate-risk borrower. The large coapplicant income also reduces the risk. **Test Data 4: High-Income Applicant with Poor Credit History** * Loan_ID: LP002994 * Gender: Female * Married: No * Dependents: 0 * Education: Graduate * Self_Employed: Yes * ApplicantIncome: 70000 * CoapplicantIncome: 0.0 * LoanAmount: 40000.0 * Loan_Amount_Term: 360.0 * Credit_History: 0.0 * Property_Area: Urban Loan_Status: N (Rejected) Reason: Poor credit history, despite high income, makes this applicant a high-risk borrower. These test data examples cover different scenarios and demonstrate how the relationships between columns affect the loan approval decision. |

Validating Synthetic Test Data for Regression Models: A Comprehensive Approach

When generating synthetic test data for regression use cases, it’s crucial to ensure that the artificial data closely mirrors the characteristics of real-world datasets. This validation process involves several key steps:

1. Feature Distribution Analysis

Begin by comparing the statistical attributes of synthetic data against real data. This includes examining the mean, standard deviation, skewness, and correlations of features. Aligning these properties helps maintain the natural variability and diversity found in real-world datasets, which is essential for realistic testing scenarios.

2. Range and Outlier Verification

Carefully review the range of each feature to confirm that synthetic data values fall within expected bounds. Identify and compare any unusual outliers with those in the real data. Discrepancies in outlier representation could introduce bias, potentially making the model either overly sensitive or insensitive to rare but realistic cases.

3. Class Balance Assessment

Even in regression tasks, categorical variables play a crucial role. Ensure that the distribution across classes in the synthetic data aligns with the real dataset. Imbalances in class representation can skew predictions, particularly if certain categories are tied to specific trends in the data.

4. Correlation and Relationship Examination

Assess both linear and non-linear correlations between features and the target variable, as well as between features themselves. Synthetic data that accurately mirrors these relationships can produce more reliable model outcomes, as it represents the true dependencies within real-world data.

5. Feature Interactions and Multicollinearity Replication

For features that interact, evaluate whether the synthetic data captures these relationships as accurately as the real data. Additionally, multicollinearity—when two or more variables are highly correlated—should be replicated in the synthetic data. This is crucial as it influences the model’s interpretation of feature importance and interaction.

By meticulously following these validation steps, we can ensure that our LLM-generated synthetic test data for regression models closely resembles real-world data. This approach enhances the reliability and effectiveness of our testing processes, ultimately leading to more robust and accurate regression models.

Final Thoughts

As we conclude our exploration of LLM-generated synthetic data, it’s clear we’re on the cusp of a data revolution. This technology has the potential to transform AI development, testing, and deployment. However, we must proceed with caution.

The path from synthetic data to real-world AI solutions is complex. We must remain vigilant about data quality and relevance, ensuring it truly reflects real-world scenarios. The challenge lies in balancing the power of LLMs to fill data gaps with the need to prepare AI models for real-world complexity.

As we move forward, we’re walking a tightrope between synthetic idealism and real-world messiness. But it’s a journey worth taking. The future of AI, powered by realistic synthetic data, is both promising and exciting. Are you ready to dive in?

About the Author

Krishnadas, a Post-Graduate Engineer, is currently applying his skills as part of the dynamic Machine Learning (ML) team at Founding Minds. With a strong foundation in Data Science and Artificial Intelligence, Krishnadas transitioned into this field after pursuing a course in AI to align his career with emerging technologies. He specializes in the end-to-end testing of ML systems, particularly within agile environments. Fueled by a passion for exploration, Krishnadas enjoys a variety of hobbies, from taking road trips and singing to experimenting in the kitchen.

Vishnu Prakash is a Quality Assurance professional at Founding Minds with over 7 years of experience in the meticulous testing of web and mobile applications. With a strong foundation in ensuring software excellence, he has developed a keen eye for identifying bugs, improving user experience, and enhancing product quality across diverse platforms. Beyond traditional testing, he is passionate about the integration of Artificial Intelligence (AI) and Machine Learning (ML) into the realm of software testing and development. Vishnu’s personal interests rev up around motorcycles and the excitement of discovering new destinations through travel.