In the fast-evolving field of artificial intelligence, optimizing large language models (LLMs) has become a key challenge. Fine-tuning has emerged as a practical solution to adapt these models for specific tasks, enhancing their performance without the need for complete re-training. However, traditional fine-tuning requires substantial resources, including powerful GPUs, large datasets, and expertise, making it expensive and time-consuming.

To overcome these challenges, Parameter-Efficient Fine-Tuning (PEFT) methods like Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA) offer a more accessible alternative. These methods adjust only a small subset of a pre-trained model’s parameters while keeping the rest unchanged, significantly reducing memory and computational costs.

This blog explores how to fine-tune GPT-2 efficiently using PEFT techniques and the Stanford Question Answering Dataset (SQuAD). We will walk through the fine-tuning process step-by-step, leveraging tools from Hugging Face’s library to create a specialized instruct model. Whether you’re developing domain-specific chatbots or building models for education, healthcare, or customer support, PEFT provides a way to achieve high performance with fewer resources.

What is LLM Fine-Tuning?

Fine-tuning a Large Language Model (LLM) like GPT involves adapting a pre-trained model to a specific domain or task using a smaller, targeted dataset. This approach is crucial because it leverages the model’s existing knowledge(acquired from massive datasets), to achieve high performance on specific tasks with minimal additional data and computational resources.

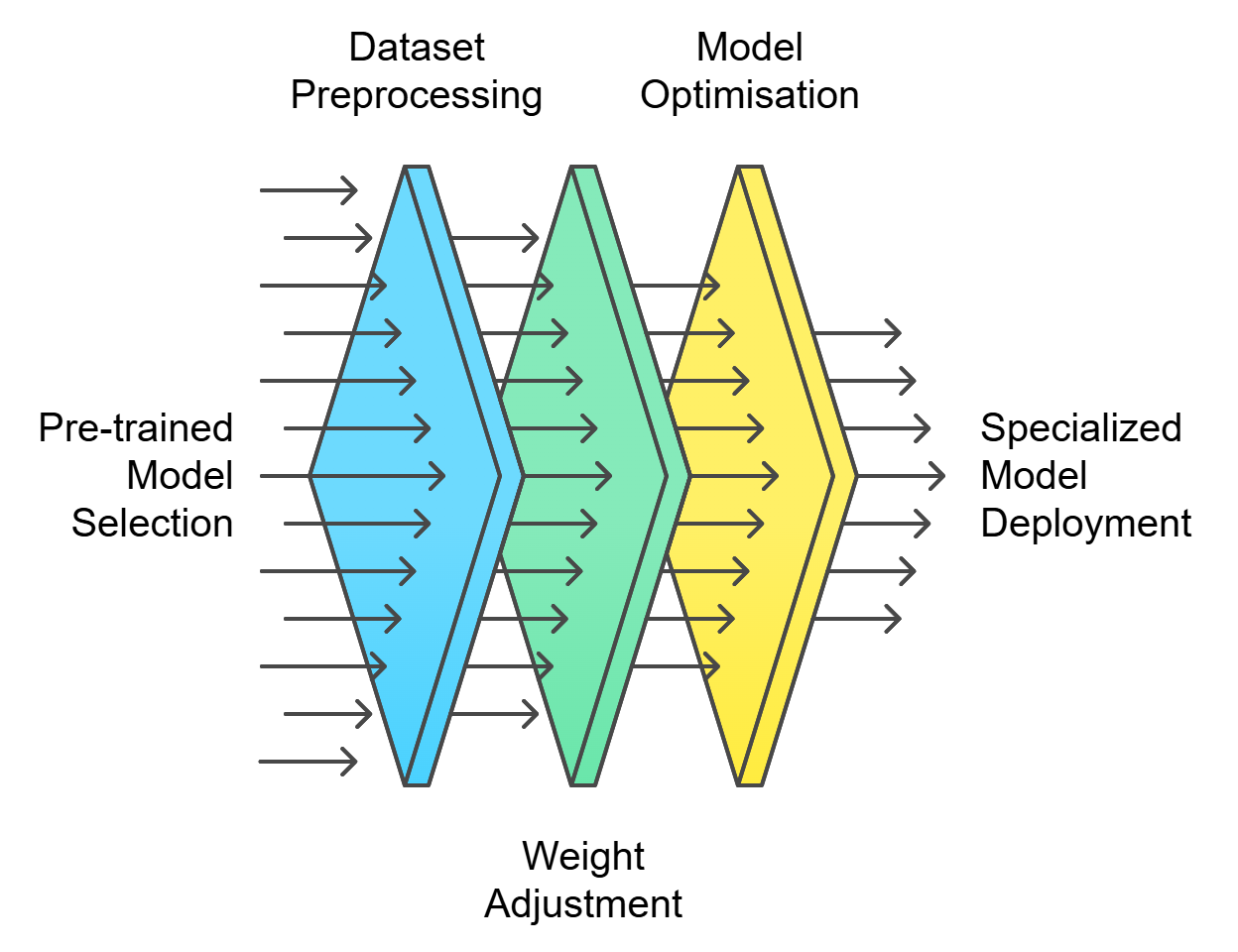

Fine-tuning efficiently creates specialized models by leveraging pre-trained models like GPT-2. The process involves selecting a pre-trained model, preprocessing a task-specific dataset, and adjusting the model’s weights using high-performance hardware or cloud services. Techniques such as quantization or Q-LORA reduce model size and computational demands. After fine-tuning, the model is evaluated and optimized through hyperparameter tuning before deployment. This approach cuts training costs and time while achieving high specialization for various applications.

Fine-Tuning LLM

Parameter Efficient Fine-Tuning (PEFT)

As models grow larger, full fine-tuning becomes infeasible on consumer hardware. Additionally, storing and deploying fully fine-tuned models for each task is costly since they are the same size as the original pre-trained model. Parameter-Efficient Fine-Tuning (PEFT) addresses both issues. PEFT approaches fine-tune only a small number of additional model parameters while freezing most of the pre-trained LLM’s parameters, significantly reducing computational and storage costs. This method also prevents catastrophic forgetting, which occurs during full fine-tuning of LLMs. The reduced size in PEFT comes from only saving the fine-tuned parameters, not from quantization. There are various methods for achieving parameter-efficient fine-tuning. Low-Rank Adaptation (LoRA) and QLoRA are the most widely used and effective approaches.

LoRA modifies pre-trained models by learning low-rank updates to the model’s weight matrices, producing small adapters that can be combined with the original model during inference. QLoRA further optimizes this process by quantizing weights to lower precision, typically 4-bit, to reduce memory requirements while maintaining effectiveness.

These techniques enable efficient adaptation of large language models to specific tasks, making fine-tuning more accessible and cost-effective.

How to Fine Tune GPT-2 to build an Instruct Model

Fine-tuning GPT-2 to create an instruct model involves a series of precise steps that leverage the Stanford Question Answering Dataset (SQuAD) for task-specific pretraining and PEFT technique to optimize parameter efficiency. SQuAD, a comprehensive reading comprehension dataset, consists of 107,785 question-answer pairs derived from 536 Wikipedia articles. This dataset serves as the foundation for training, where each question is answered by a specific text segment from the related article or is deemed unanswerable.

The data format is crucial for training language models like GPT-2. When creating an instruct model from GPT-2, adapting the training process is essential. Normally, GPT-2 predicts the next token based on the previous ones to generate coherent text. To train it as an instruct model, special tokens like <system>, <assistant>, and <user> are added to the dataset. These tokens define specific parts or functions in the text, helping GPT-2 produce more structured and targeted responses. This method ensures the model generates responses in the desired format and style.

For example,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

<|system|> You are a question answer assistant chatbot named "QA Bot". Your expertise is exclusively in providing information and advice related only to the given content. This includes content related question answer queries. You do not provide information outside of this scope. If a question is not about the content, respond with, "I specialize only in content related queries". <|end|> <|assistant|> QA Bot here! Ask me anything related to the content you provide. Content only, though! <|end|> <|user|> Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary. <|end|> <|assistant|> Ask questions regarding this content. <|end|> <|user|> To whom did the Virgin Mary allegedly appear in 1858 in Lourdes, France? <|end|> <|assistant|> Saint Bernadette Soubirous <|end|> |

Moreover, advanced techniques like Parameter-Efficient Fine-Tuning (PEFT), Low-Rank Adaptation (LoRA), and Quantized Low-Rank Adaptation (QLoRA) are employed to enhance the model’s performance efficiently.

Here’s how we can fine-tune GPT-2 to create an instruct model using the Stanford Question Answering Dataset (SQuAD) and Hugging Face’s Transformers library. This process will enhance GPT-2’s ability to understand and respond to questions,capable of generating precise and contextually appropriate responses, tailored to the specific needs of instruction-based tasks. Let’s break down the key steps:

- Environmental Setup: Prepare the necessary tools and libraries, including Python, PyTorch, and Hugging Face’s Transformers library, to create a suitable development environment for fine-tuning GPT-2.

1 |

!pip install bitsandbytes accelerate datasets peft protobuf evaluate transformers |

- Loading the SQuAD Dataset: Download and process the Stanford Question Answering Dataset (SQuAD).

1 2 3 4 5 6 7 8 9 |

from datasets import load_dataset # Loading Squad dataset dataset_name = 'rajpurkar/squad' dataset = load_dataset(dataset_name) print(dataset["train"][0]) # output format # {'id': '5733be284776f41900661182', 'title': 'University_of_Notre_Dame', 'context': 'Architecturally, the school has a Catholic character. Atop the Main Building\'s gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend "Venite Ad Me Omnes". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.', 'question': 'To whom did the Virgin Mary allegedly appear in 1858 in Lourdes France?', 'answers': {'text': ['Saint Bernadette Soubirous'], 'answer_start': [515]}} |

- Apply Bits and Bytes Quantization: Implement Bits and Bytes Quantization to compress the model to 8-bit or 4-bit precision. This technique substantially reduces memory usage, though it may slightly impact accuracy. It’s particularly useful when combined with QLoRA for fine-tuning large language models efficiently.

1 2 3 4 5 6 7 8 9 |

'''Compute data type to float16 (half-precision floating-point format) to reduce memory usage and potentially increase computational efficiency.''' compute_dtype = getattr(torch, "float16") # Configure the Bits and Bytes quantization settings for the model bnb_config = BitsAndBytesConfig(load_in_4bit=True, # loading the model with 4-bit precision weights to reduce memory requirements bnb_4bit_quant_type='nf4', # quantization format, which is optimized for neural networks bnb_4bit_compute_dtype=compute_dtype, # for computational accuracy bnb_4bit_use_double_quant=False, # Disable double quantization to keep the computational complexity lower ) |

- Loading and Preparing the Pretrained Model: Initialize the pre-trained GPT-2 (small) model and its associated tokenizer from the Hugging Face model hub, setting it up for fine-tuning on the SQuAD dataset.

1 2 3 4 5 6 7 |

from transformers import GPT2TokenizerFast, GPT2LMHeadModel, BitsAndBytesConfig # LLM Model: GPT-2 llm_model_name = 'openai-community/gpt2' tokenizer = GPT2TokenizerFast.from_pretrained(llm_model_name) tokenizer.pad_token = tokenizer.eos_token gpt2_model = GPT2LMHeadModel.from_pretrained(llm_model_name, quantization_config = bnb_config) # model is loaded in 4-bit using the `BitsAndBytesConfig` |

- Preprocessing the Dataset: Transform the SQuAD data into a format suitable for GPT-2 fine-tuning, which may include tokenization, adding special tokens to delineate questions and answers, and creating input-output pairs that match the model’s expected format.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

def create_chat_format(data): # Template for the chatbot's introduction and instruction to the user model_chat_template = """ <|system|> You are a question answer assistant chatbot named "QA Bot". Your expertise is exclusively in providing information and advice related only to the given content. This includes content related question answer queries. You do not provide information outside of this scope. If a question is not about the content, respond with, "I specialize only in content related queries". <|end|> <|assistant|> QA Bot here! Ask me anything related to the content you provide. Content only, though! <|end|> <|user|> """ template = """ <|assistant|> Ask question regarding this content.<|end|> <|user|> """ context = data["context"] question = data["question"] answer = data["answers"]["text"][0] similar_sentences = context.split('.') input_to_model = ( model_chat_template + "\n" + ".".join(similar_sentences) + "<|end|>" + template + "\n" + question + "<|end|>" "" ) # Tokenize the input text for the model input_encodings = tokenizer(input_to_model, max_length=512, truncation = True, return_tensors = "pt", padding='max_length') # Tokenize the answer text answer_encoding = tokenizer(answer, max_length=512, truncation = True, return_tensors = "pt", padding='max_length') # Return the tokenized input and labels as dictionaries return {"input_ids": input_encodings["input_ids"].squeeze().tolist(), "labels": answer_encoding["input_ids"].squeeze().tolist()} train_dataset = dataset['train'] test_dataset = dataset['validation'] print("Tokenizing train dataset...") tokenized_train_dataset = train_dataset.map(create_chat_format, batched = False, remove_columns=dataset["train"].column_names) print("Tokenizing test dataset...") tokenized_test_dataset = test_dataset.map(create_chat_format, batched = False) |

- Preparing the model for QLoRA & setup PEFT for Fine-Tuning: Configure the GPT-2 model for Quantized Low-Rank Adaptation (QLoRA) and set up Parameter-Efficient Fine-Tuning (PEFT) to optimize the fine-tuning process, reducing memory requirements and computational costs while maintaining performance.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from peft import LoraConfig, get_peft_model, prepare_model_for_kbit_training # Using the prepare_model_for_kbit_training method from PEFT original_model = prepare_model_for_kbit_training(gpt2_model) # Preparing the model for QLoRA (Quantized Low-Rank Adaptation) using LoRAConfig config = LoraConfig( r = 32, # Rank of the low-rank adaptation matrices lora_alpha = 32, # Scaling factor for the low-rank adaptation matrices target_modules=[ "attn.c_attn", "attn.c_proj" ], bias = "none", lora_dropout = 0.05, # Dropout rate for the LoRA layers to prevent overfitting task_type = "CAUSAL_LM", ) # Enabling gradient checkpointing to reduce memory usage during fine-tuning original_model.gradient_checkpointing_enable() # Applying the LoRA configuration to the original model to create a PEFT (Parameter-Efficient Fine-Tuning) model peft_model = get_peft_model(original_model, config) |

The model is prepared for QLoRA training using the prepare_model_for_kbit_training() function, which initializes the necessary configurations.

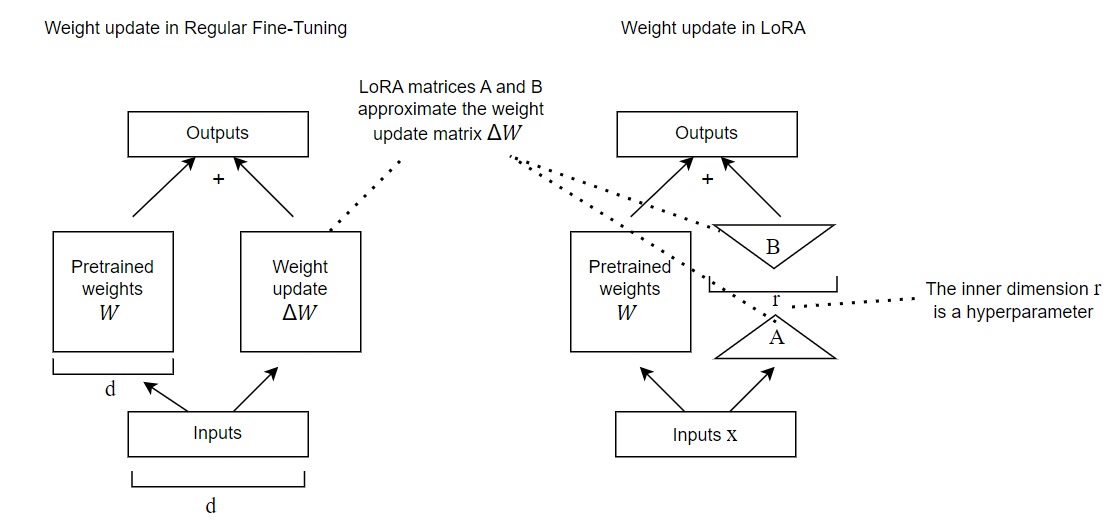

In LoRA, during backpropagation, a weight matrix ? is updated by Δ? to minimize the loss function:

?updated = ? +Δ?

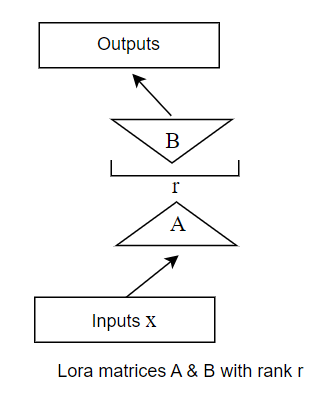

The LoRA method approximates Δ? with two small matrices ? and ?:

?updated = ? + ?⋅?

After LoRA fine-tuning, the original LLM stays the same, and a small “LoRA adapter” is created. During inference, this adapter combines with the original LLM, reducing memory requirements.

Regular Finetuning (left) & LoRA Finetuning (right)

Key Hyperparameters:

- Rank (r): Determines the number of trainable parameters during training. A smaller ‘r’ leads to fewer parameters, faster training, and lower computational requirements. A higher ‘r’ increases expressivity but requires more computation.

- Lora_alpha: A scaling factor for the learned weights, controlling the influence of new changes on the original model weights. During training, LoRA modifies the original weights, and alpha scales these low-rank matrices before they are added:

?′ = ? + ?/? ( ?⋅? )

-

- Matrix W: Dimensions ?×?

- Matrix B: Dimensions ?×?

- Matrix A: Dimensions ?×?

- Scaling factor (? / ? ): Adjusts the impact on the original weights. A smaller factor keeps the model closer to its pre-trained state, while a higher factor increases the impact of low-rank adaptation matrices.

- Target_modules: Specifies the modules within the model where LoRA will be applied.

- Lora_dropout: A regularization technique to avoid overfitting. A higher dropout rate means more neurons are turned off during each training iteration. Too low a dropout might make the model memorize the training data, reducing its generalization capabilities on unseen data.

1 2 3 4 5 6 7 8 9 10 11 12 |

def print_number_of_trainable_model_parameters(model): trainable_model_params = 0 all_model_params = 0 for _, param in model.named_parameters(): # Add the number of parameters in the current tensor to the total parameter count all_model_params += param.numel() # If the parameter requires gradients (i.e., it's trainable), add its count to the trainable parameter count if param.requires_grad: trainable_model_params += param.numel() return f"\ntrainable model parameters: {trainable_model_params}\nall model parameters: {all_model_params}\npercentage of trainable model parameters:{100 * trainable_model_params / all_model_params:.2f}%" print(f'PEFT model parameters to be updated:\n{print_number_of_trainable_model_parameters(peft_model)}\n') |

Once everything is set up and PEFT is prepared, you can use the print_trainable_parameters() helper function to see the number of trainable parameters in the model.

- Train PEFT Adapter: Execute the fine-tuning process using the prepared SQuAD dataset, updating only the PEFT adapter parameters while keeping the base GPT-2 model frozen, allowing for efficient and focused learning of the question-answering task.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

# Output directory for saving model checkpoints and other outputs output_dir = f'./peft-dialogue-summary-training' # Define the training arguments for the Trainer peft_training_args = TrainingArguments( output_dir = output_dir, per_device_train_batch_size = 16, # Batch size for training on each device (e.g., GPU) per_device_eval_batch_size = 4, max_steps = 10000, # Total number of training steps to perform learning_rate = 2e-4, # Initial learning rate for the optimizer optim = "paged_adamw_8bit", # Use the 8-bit version of the AdamW optimizer to reduce memory usage save_strategy = "steps", # Strategy to save the model checkpoint save_steps = 1000, # Save the model checkpoint every 100 steps evaluation_strategy = "steps", # Strategy to evaluate the model during training eval_steps = 1000, # Evaluate the model every 100 steps do_eval = True, # Perform evaluation during training gradient_checkpointing = True, # Enable gradient checkpointing to reduce memory usage overwrite_output_dir = 'True', # gradient_accumulation_steps = 2, # Number of steps to accumulate gradients before performing a backward/update pass prediction_loss_only = True, # eval_accumulation_steps = 1, fp16 = True ) # Disable the use of cache in the PEFT model configuration to reduce memory usage during training peft_model.config.use_cache = False # Instantiate the Trainer class with the PEFT model, tokenized datasets, and training arguments peft_trainer = transformers.Trainer( model = peft_model, train_dataset = tokenized_train_dataset, eval_dataset = tokenized_test_dataset, args = peft_training_args, data_collator = transformers.DataCollatorForLanguageModeling(tokenizer, mlm = False), compute_metrics = compute_metrics ) print("Starting training...") peft_trainer.train() print("Training completed...") |

- Evaluate the Model using the F1 Metric: Implement the F1 score to assess the fine-tuned model’s performance. It helps to evaluate the model’s responses in various LLM applications.

1 2 3 4 5 6 7 8 9 10 11 |

# F1 Evaluation Metric metric = evaluate.load("f1", trust_remote_code = True) # Compute evaluation metrics def compute_metrics(eval_pred): with torch.no_grad(): torch.cuda.empty_cache() # Clear GPU cache predictions, labels = eval_pred predictions = predictions.argmax(dim=-1) true_labels = [label for pred, label in zip(predictions, labels) if label != -100] true_preds = [pred for pred, label in zip(predictions, labels) if label != -100] return metric.compute(predictions=true_preds, references=true_labels) |

Conclusion

Fine-tuning GPT-2 with the SQuAD dataset using PEFT methods like QLoRA is an efficient way to build specialized language models. It leverages the model’s pre-trained knowledge while adapting it for tasks like question-answering with fewer resources. With proper data preparation and model configuration, developers can create high-performance, task-specific models.

PEFT’s flexibility enables the development of tools like chatbots, virtual tutors, or medical assistants, even on consumer hardware. These techniques open new opportunities across industries—from personalized learning in education to clinical support in healthcare and efficient customer service solutions.

Experiment with these methods using your own datasets and unlock new possibilities. Share your results in the comments to inspire the AI community!

About the Author

Karthika Rajan Nair completed a Data Science internship with the Machine Learning team at Founding Minds, where she collaborated closely with the team and authored this blog as a result of her work. With a strong foundation in Python, machine learning frameworks, and cloud technologies, Karthika is passionate about advancing her skills to drive innovation and address complex data challenges. She is pursuing a Master’s degree in Data Science at the University at Buffalo, New York.