Ever stared at your perfectly tuned machine learning model and thought, “Now what?” You’ve conquered the training data, mastered the hyperparameters, and your accuracy scores are through the roof. But there’s that nagging question keeping you up at night: How do you turn this mathematical marvel into something that actually delivers value in the real world?

Welcome to the thrilling and sometimes terrifying world of ML deployment. It’s where brilliant models either soar to new heights or crash and burn. And let’s be honest – we’ve all heard the horror stories. Remember that recommendation engine that worked flawlessly in testing but crumbled under Black Friday traffic? Or that chatbot that went from helpful assistant to public relations nightmare in just 48 hours?

But here’s the million-dollar question: What separates successful ML deployments from expensive disasters? Is it just technical prowess, or is there something more to this puzzle?

The truth? ML deployment is an art form disguised as a technical challenge. It’s where data science meets the harsh realities of production environments, where elegant algorithms collide with messy real-world data, and where your model’s performance isn’t just about accuracy scores anymore – it’s about survival in the wild.

Think your model is ready for prime time? Ask yourself:

- Can it handle unexpected input without breaking a sweat?

- Will it scale when your user base explodes overnight?

- How quickly can you update it when the real world inevitably changes?

In this guide, we’re diving deep into the strategies that separate successful ML deployments from cautionary tales. Whether you’re a solo data scientist ready to launch your first model or a team leader plotting a company-wide ML revolution, you’re about to discover the battle-tested approaches that actually work in the trenches.

Ready to transform your model from a local hero to a production warrior? Let’s begin this journey together.

Key stages of ML deployment

Imagine you’ve trained a machine learning model to recommend personalized playlists for a music streaming service like Spotify. The deployment stage is like taking this model from your local computer and setting it up to work for millions of users worldwide.You need to optimize the model so it can handle the huge influx of user data, set up powerful servers to run it 24/7, and ensure it can create playlists in milliseconds for users across different devices and countries. It’s about transforming your brilliant idea into a robust feature that millions can enjoy without a hitch. So, the goal of deployment is to make the model available to end-users or other systems in a way that is scalable, reliable, and efficient.

ML deployment consists of:

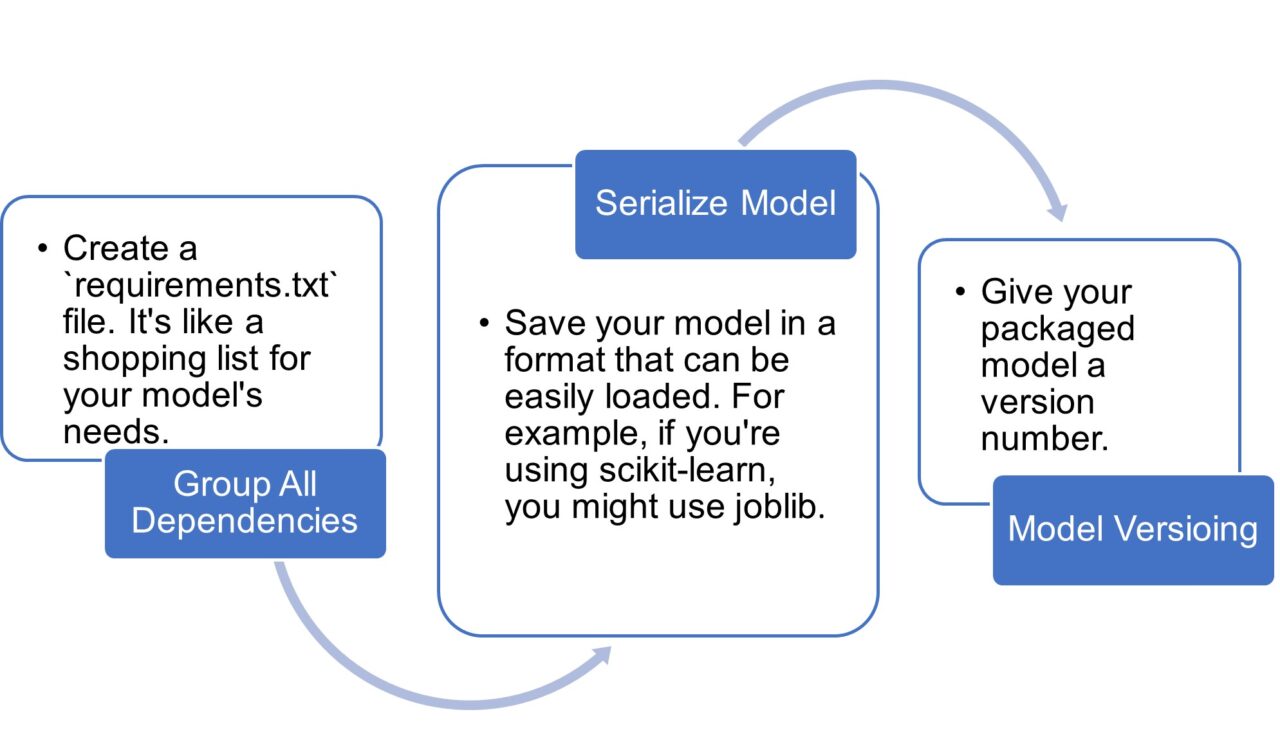

Wrapping up dependencies: Model packaging

Packaging is all about making sure your model has everything it needs to run smoothly in its new home.

Setting up the Ground: Model Serving

Model serving includes choosing the right infrastructure for your model simply like choosing the perfect vehicle for different terrains. A successful model serving is understanding your specific needs – performance, scalability, security, and cost.

Imagine your ML model as a globe-trotting superstar, ready to perform on any stage! In the cloud, it’s living the high life, scaling to meet adoring fans (users) on-demand and basking in the glow of managed services. On-premises, it’s more of a hometown hero, enjoying full creative control and keeping its secrets close. At the edge, our star goes incognito, slipping into tiny devices to deliver real-time performances without a Wi-Fi connection. In a hybrid setup, your model gets the best of both worlds, seamlessly switching between massive cloud concerts and intimate local gigs. Whether your ML model prefers the limitless skies of the cloud, the cozy comfort of on-premises servers, the thrill of edge devices, or the versatility of a hybrid approach, there’s a perfect stage for every performance. The key is picking the right venue to let your model’s talents shine!

Talking to an application requires a common language – a way for the two sides to communicate. And when it comes to this, RESTful APIs have long reigned supreme. Why? Their simplicity and widespread adoption make them a natural fit, especially for standard CRUD (create, read, update, delete) operations and web services. When HTTP-based communication just feels right, RESTful APIs are the go-to choice. But they’re not the only kids on the block. Upstart challengers like WebSocket-based frameworks, GraphQL, gRPC, RabbitMQ, and Apache Kafka are vying for your attention too.

So how do you decide? It often comes down to a careful evaluation of your specific needs and constraints:

- Do you have demanding performance requirements?

- How about your team’s existing expertise?

- What does your current infrastructure look like?

- Are scalability needs a major factor?

- And don’t forget about client platform compatibility!

Weigh all these considerations, and the right communication approach for your application will emerge. It may be the reliable workhorse of RESTful APIs. Or perhaps one of the rising stars will capture your imagination. The choice is yours to make – just be sure to choose wisely!

Containerize the model

Think of containers as magical lunch boxes that pack not just your model, but its entire environment: dependencies, libraries, and even specific Python versions, all sealed in a perfect, portable package. With tools like Docker, your model becomes a self-sufficient traveler, ready to run consistently whether it’s on your laptop, a powerful cloud server, or that quirky edge device in the field. No more dependency nightmares, no more version conflicts, and definitely no more late-night debugging calls. But here’s the real kicker – containers aren’t just about portability. They’re your first line of defense in scaling operations. Need to handle sudden spikes in prediction requests? Spin up more containers. Want to test a new model version without risking the current one? Containers make that a breeze. It’s like giving your ML model a passport to travel anywhere while speaking the same language every time.

Model Deployment Strategies

Just as a master chef doesn’t cook every dish the same way, not every ML model should be deployed using the same strategy. The approach that worked perfectly for that low-risk content recommendation system might spell disaster for a critical fraud detection model. So, what’s an ML engineer to do?

Ever wondered how AI models serve predictions? Just like a restaurant can serve meals in different ways – from fast food to fine dining – machine learning models have various methods to deliver their predictions to users.

Like a made-to-order dish, your model serves instant predictions. Imagine your phone unlocking with face recognition – that’s real-time inference in action. Quick, direct, and one prediction at a time.

Picture a meal-prep service delivering weekly packages. Your model processes predictions in bulk – perfect for tasks like calculating customer churn scores for thousands of users over the weekend.

Think of a restaurant’s continuous cooking line during peak hours. Your model processes data as it flows in – ideal for catching fraudulent transactions as they happen.

Deployment Strategies

Imagine you’ve got your model up and running smoothly, like a reliable food truck serving up daily specials. A few months in, you discover an exciting new ingredient—a fresh feature or model framework upgrade. Now, you’re faced with the challenge of making this update without disrupting service. How do you serve up your model’s new version seamlessly? You’ll need a solid strategy to roll out this update without blocking the line.

Picture running A/B tests between your new recipe and the original. It’s like testing two menu options on a focus group to see if the new dish gets rave reviews. You need to ensure the update performs at least as well, ideally better, against set test cases. And if you want to keep your hungry audience happy, controlled tests under specific conditions are a must.

This is where CI/CD practices come into play, acting as your kitchen’s efficient prep line. These strategies keep your model running reliably, even with ongoing upgrades and testing. Let’s dig into a few essential strategies to ensure your model’s success.

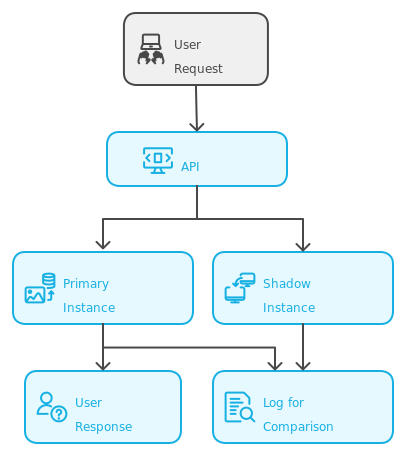

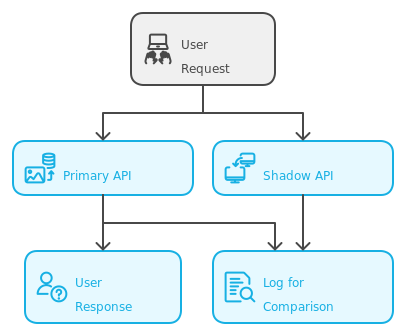

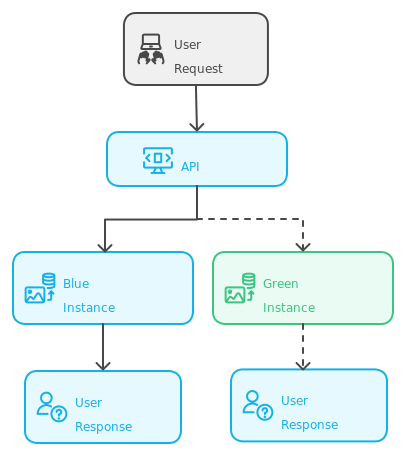

Imagine having a secret twin of your live application – one that can experiment freely without anyone knowing. That’s exactly what shadow deployment offers! It’s like having a parallel universe where your new code can stretch its legs and prove itself using real-world data, all while your users continue to interact with the tried-and-true production version.

Fig: Shadow Deployment using Single API and Two Independent API’s.

Think of it as a rehearsal stage that exists right next to the main performance. Your application’s understudy (the shadow version) acts out every scene in perfect sync with the star (your production system), but the audience never sees these practice runs. Every click, every transaction, and every user interaction is mirrored and processed by both versions, but only the production system’s responses reach the users.

This isn’t just another testing environment – it’s your production environment’s identical twin, experiencing the same real-world chaos, traffic spikes, and user behaviors. Want to know if your new feature can handle Black Friday traffic? Curious how your optimized algorithm performs under actual user loads? Now you can know for sure, without betting your user experience on it.

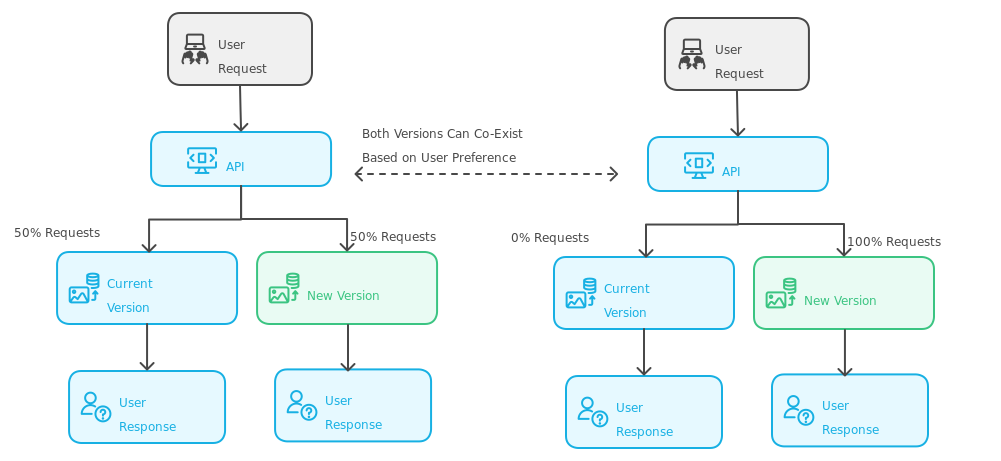

Picture a grand theater with two identical stages side by side. While the current show (let’s call it “blue”) entertains the audience, the crew is busy setting up tomorrow’s performance on the other stage (“green”). When everything’s perfect on the green stage, they simply redirect the spotlight. The audience seamlessly transitions to the new show without even realizing the switch. That’s the magic of blue-green deployment!

Think of it as having a cosmic “undo” button for your software releases. Your application lives simultaneously in two parallel universes – the “blue” universe serving your current version, while you prepare the next version in the “green” universe. When you’re confident the green environment is ready for showtime, you simply flip a switch (usually a routing mechanism), and your traffic gracefully flows to the new version.

The beauty? If your new version starts throwing unexpected tantrums (bugs, performance issues, or just plain chaos), you can instantly flip back to the blue environment – like having a time machine that takes you back to when everything worked perfectly! It’s the software equivalent of having a backup parachute – you hope you won’t need it, but you’re incredibly glad it’s there if you do.

This approach is particularly brilliant because:

- Your users never experience downtime – they’re simply redirected from one healthy environment to another

- Testing becomes more reliable because you’re validating changes in an identical production environment

- The stress level of your operations team drops dramatically knowing they can instantly rollback if needed

- You can take your time verifying the new environment before making the switch

It’s like having a fancy hotel where you prepare a new room before asking the guest to move, rather than trying to renovate their room while they’re still in it. And if they don’t like the new room? No problem – their old room is still exactly as they left it!

Imagine you’re hosting a cooking showdown, where two chefs prepare the same dish, but with their unique twist. That’s what A/B deployment is for your application—a live, head-to-head competition between two versions to see which one your users prefer. It’s like having two secret versions of your app running in parallel, each with a slight difference, ready to show you what really wins with your audience.

Picture this: one group of users gets served the tried-and-true recipe (the “A” version), while another group gets a taste of something new (the “B” version). Every click, every scroll, and every purchase helps decide the better experience, showing which version pulls ahead in real-world scenarios. Maybe the B version has a faster checkout flow or a fresh recommendation algorithm, and with every user interaction, you’re gathering evidence on which one delivers more value.

A/B deployment lets you experiment without a full commitment. It’s like performing a survey in disguise—your users interact naturally, not even aware they’re part of the experiment. By comparing conversion rates, engagement metrics, or user satisfaction scores, you get a clear view of which version comes out on top. Ready to roll out a new feature? A/B deployment ensures it’s proven with data before it ever becomes the main course.

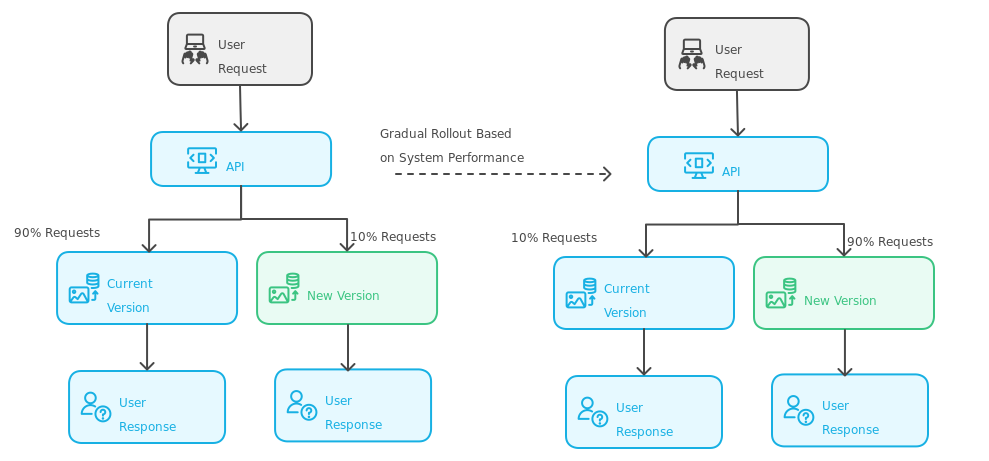

Remember those old coal mines where miners would bring canaries as their tiny, feathered life-savers? If dangerous gases were present, the canary would show signs of distress before the levels became fatal to humans. That’s exactly the inspiration behind canary deployment – except instead of birds, we’re using a small group of users as our early warning system!

Think of it as being the world’s most cautious party host. Instead of opening all the doors and letting everyone rush in at once, you first invite a select group of VIP guests (your “canaries”) to test the waters. These brave pioneers help you spot if the punch is too strong or if the dance floor is too slippery before the main crowd arrives. If your VIPs are having a great time, you gradually let in more guests. But if they start showing signs of distress – whoops! – you can quickly shut things down before it becomes a full-blown party disaster.

What makes canary deployment particularly brilliant:

- You start with a tiny slice of traffic (maybe 1-10% of users) to test your new version.

- Like a skilled tightrope walker, you can inch forward or quickly step back based on real-world feedback.

- Your monitoring systems are laser-focused on this small group, making issues easier to spot and fix.

- Risk is naturally contained – if something goes wrong, you’ve only impacted a small portion of users.

The real beauty lies in its gradual nature. Like dipping your toe in the pool before jumping in, you’re testing the waters with minimal risk. Maybe your new feature works perfectly in testing but crumbles under real-world conditions – better to discover that with 1% of your users than with everyone at once! And if things do go south, you can pull the plug faster than a DJ can change a bad song.

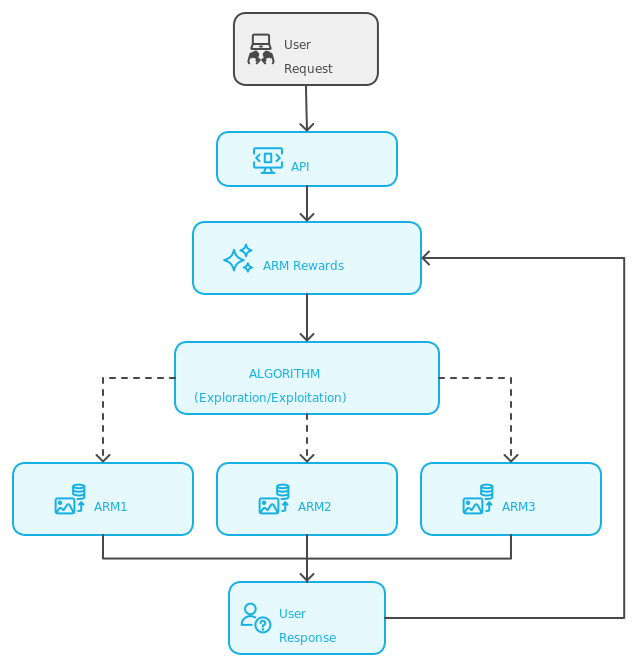

Ever wondered what would happen if Darwin’s theory of evolution met modern software deployment? Meet Multi-Armed Bandit deployment – the savvy casino player of the software world that’s revolutionizing how we roll out features!

Picture yourself as a master chef opening a new restaurant. Instead of picking one menu and sticking to it, you’re serving different versions of your signature dish simultaneously. But here’s the twist – you have an intelligent waiter who keeps track of which version gets the most “oohs” and “aahs,” automatically recommending that version to more customers. Yet, they’re smart enough to occasionally suggest other versions too, just in case there’s an undiscovered gem!

This isn’t your grandmother’s A/B deployment – it’s A/B deployment with a PhD in statistics and a minor in psychology! While traditional deployment methods are like playing chess with predetermined moves, MAB deployment is like having a grandmaster who adapts their strategy with every move. It’s constantly learning, adjusting, and optimizing in real-time.

What makes this approach particularly fascinating:

- Instead of waiting weeks to declare a winner, it starts optimizing from the very first user interaction.

- Your deployment becomes a self-learning system, like a robot that gets smarter with every conversation.

- You’re not just finding what works best now, but continuously adapting to changing user preferences.

- Risk is automatically managed – poorly performing versions get less traffic without any manual intervention.

Think of it as having a wise investment advisor who doesn’t just split your portfolio 50-50 between stocks and bonds, but dynamically adjusts your investments based on market performance while still keeping an eye out for new opportunities. It’s the perfect blend of “show me the money” and “let’s try something new!”

The real magic happens in the balance: while the system naturally favors what’s working (like giving your best-selling dish prime menu placement), it never completely stops experimenting (like keeping those daily specials interesting). It’s like having a DJ who knows exactly when to play the crowd favorites and when to introduce that hot new track!

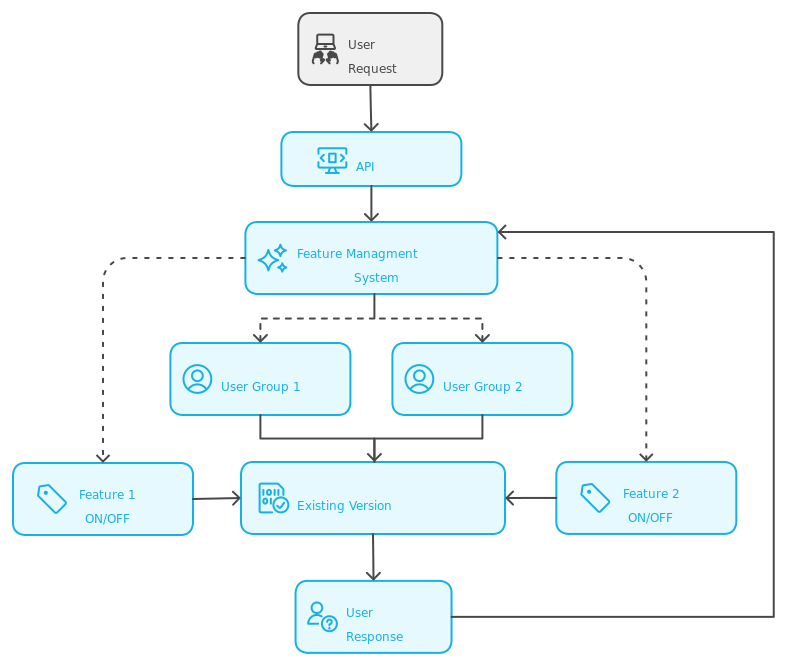

Imagine having a hidden switchboard behind the scenes of your application, where each lever controls a new feature, ready to go live with a single flip. That’s the magic of feature flags—like secret controls that let you roll out new features or updates to select users instantly and without a full deployment.

Picture it like this: your app’s newest features are waiting quietly in the wings, enabled only for specific users or regions at your command. Maybe it’s a new recommendation algorithm or an enhanced checkout experience—only beta testers or a specific customer segment get access. With each flag, you control who gets to experience these updates, all while your stable version continues to serve the rest of your users uninterrupted.

Feature flags let you test, tweak, or turn off features in real-time based on feedback or performance. Facing unexpected issues? No problem—just toggle the flag off, and the feature is instantly hidden without code changes or redeployment. This setup is like having a dimmer switch on innovation: you can roll out new experiences gradually, measure their impact, and give them a smooth, safe launch only when they’re ready to shine.

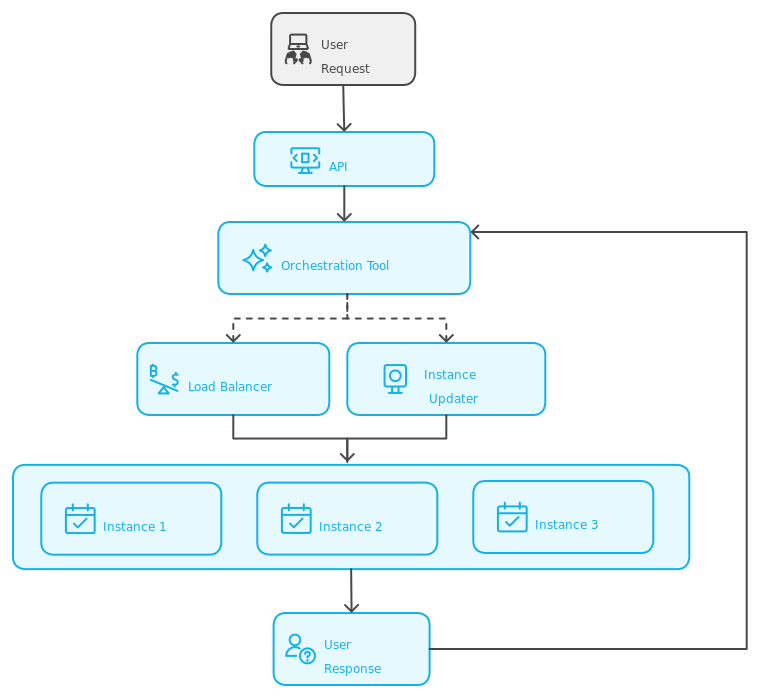

Imagine a smooth train journey where each car is added gradually, ensuring the entire system is in sync before reaching full speed. That’s the essence of rolling deployment—a strategy that lets you update your application piece by piece, minimizing disruption while ensuring stability.

Picture this: instead of flipping the switch for a massive update all at once, you release the new version to a small portion of your servers first. As the initial cars of the train start moving, they deliver the latest features to a select group of users. Monitoring performance closely, you ensure everything is running smoothly before adding more cars to the train, gradually scaling up the rollout.

Rolling deployment is like a series of carefully planned dance steps, where each step is tested and refined before moving on to the next. If any issues arise, you can easily pause the rollout, troubleshoot, and fix problems without affecting the entire user base. This method not only reduces risk but also allows you to gather real-time feedback from early users, helping you make informed decisions about future releases.

With rolling deployment, your application evolves seamlessly, adapting to user needs while maintaining the reliability your audience expects. It’s the perfect blend of innovation and stability, ensuring your software keeps pace with the demands of a dynamic environment.

Monitoring and Optimization

Imagine your deployed ML model as an Olympic athlete. Getting to the games (production) is a massive achievement, but the journey doesn’t end there. Just like athletes need constant monitoring of their vital signs and optimization of their training routines, your model needs careful observation and fine-tuning to maintain its winning performance.

Think of model monitoring as your ML system’s health dashboard. Just as a doctor monitors a patient’s vital signs, you’re keeping an eye on critical metrics: prediction accuracy, response times, resource usage, and data drift patterns. When your model’s “blood pressure” (prediction confidence) starts dropping, or its “heart rate” (latency) becomes irregular, your monitoring system sounds the alarm before these issues impact users.

However, monitoring without optimization is like having a diagnosis without treatment. That’s where the “personal trainer” aspect comes in – continuous optimization. Maybe your model is spending too much time “warming up” (high initialization time), or perhaps it’s “burning out” under heavy load (resource spikes during peak traffic). Through careful optimization – whether it’s model compression for faster inference, batch size tuning for better throughput, or resource allocation adjustments – you’re keeping your model in top competitive shape.

And just as athletes adapt their training to new challenges, your optimization strategy needs to evolve. Yesterday’s perfect configuration might not handle today’s traffic patterns. That’s why successful ML teams treat monitoring and optimization as a continuous feedback loop: monitor, identify bottlenecks, optimize, repeat. It’s like having a constant training camp where your model keeps getting stronger, faster, and more efficient with each iteration.

Conclusion

As we navigate through the evolving landscape of ML deployment, it’s clear that success lies not in choosing a single “perfect” strategy, but in orchestrating a symphony of approaches that dance to your project’s unique rhythm. Whether you’re testing the waters with canary deployments, conducting experiments through Multi-Armed Bandits, ensuring safety with blue-green switches, or fine-tuning control with feature flags, each strategy adds its own flavor to the deployment recipe. Like a master chef combining different cooking techniques to create the perfect dish, you might use feature flags within a canary deployment, or blend blue-green switching with shadow deployment for maximum safety and flexibility. Think of these methods as tools in your ML toolkit – sometimes you need a hammer, other times a scalpel, but mastering when to use each one transforms the complex challenge of ML deployment into an art form. The key isn’t just getting your model into production; it’s about crafting a deployment strategy that’s as dynamic and adaptable as the ML models themselves, ensuring reliability, safety, and continuous improvement in our ever-evolving technological landscape.

About the Author

Krishnadas, a Post-Graduate Engineer, is currently applying his skills as part of the dynamic Machine Learning (ML) team at Founding Minds. With a strong foundation in Data Science and Artificial Intelligence, Krishnadas transitioned into this field after pursuing a course in AI to align his career with emerging technologies. He specializes in the end-to-end testing of ML systems, particularly within agile environments. Fueled by a passion for exploration, Krishnadas enjoys a variety of hobbies, from taking road trips and singing to experimenting in the kitchen.