In the fast-paced world of machine learning, deploying applications efficiently and reliably is crucial for unlocking their full potential. This blog explores how to streamline the deployment process using FastAPI and Docker, with resources updated to and fetched from AWS (Amazon S3). We can ensure that machine learning applications achieve their highest potential by integrating these technologies. We’ll focus on deployment in an Amazon Linux environment, providing a clear and practical approach to setting up the machine learning applications.

Why FastAPI and Docker?

FastAPI is a modern web framework for building APIs quickly and easily. It serves as a tool to create interfaces through which your ML model can interact with other systems. Known for its speed and ease of use, FastAPI is ideal for serving ML models.

Docker, on the other hand, is a containerization tool. It helps you package your application into a container, including your ML model and all its dependencies. This container acts like a virtual box that includes everything your app needs to run, ensuring consistent performance across different environments.

Together, FastAPI and Docker provide a powerful combination for ML model deployment. FastAPI offers the speed and simplicity needed for efficient API creation, while Docker ensures your model and its environment can be easily replicated and scaled.

Leveraging AWS for Cloud Deployment

Deploying FastAPI applications using Docker containers on local servers or self-managed infrastructures offers flexibility and control but comes with significant challenges. Manual scaling, high maintenance costs, and limited availability can hinder your ability to handle increased traffic or variable workloads. Managing infrastructure can become a bottleneck, especially when running resource-intensive machine learning applications.

By leveraging AWS cloud services, specifically AWS Elastic Container Registry (ECR) and AWS Lambda, you can bypass these limitations. ECR securely stores and manages your Docker images, ensuring they are always ready for deployment. AWS Lambda then executes your containerized FastAPI applications on-demand, automatically scaling based on your traffic and computing needs. This powerful combination provides a streamlined, scalable, and cost-efficient solution for deploying machine learning models without the overhead of managing servers.

Environment Setup

Tools Required

To set up this environment, the following tools and resources are needed:

- Python 3.7 or higher

- FastAPI

- Docker

- AWS Account with S3 Access

- Pre-trained machine learning model

Installing FastAPI

Assuming Python and pip package manager are installed, use ‘pip’ to install FastAPI by opening the terminal or command prompt and running the following command:

1 |

pip install fastapi |

Installing Docker

Follow the instructions on the Docker website to install Docker on your system.

Creating an S3 Bucket

You’ll need an S3 bucket to store and retrieve images. Here’s how to create an S3 bucket.

What We’ll Build

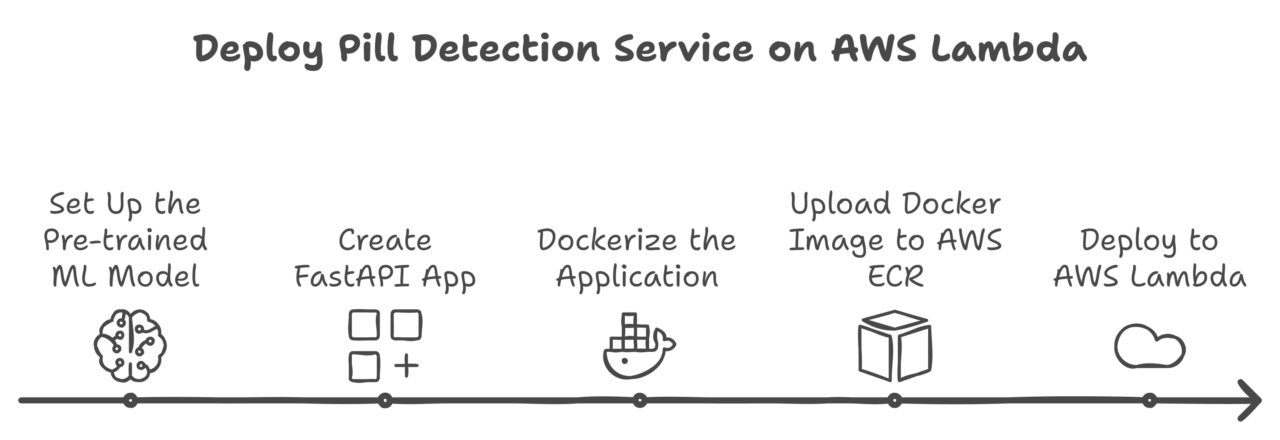

This guide outlines the steps to build an in-house pill-counting application. The app allows users to upload an image, which is processed by a pre-trained YOLO machine-learning model to detect and count pills. The image is stored in an AWS S3 bucket, and the system returns the pill count through a web interface. It’s important to break down the process into manageable tasks to achieve this, ensuring that each component works efficiently together.

Key Steps in Implementation:

-

Set Up the Pre-trained Machine Learning Model

Train and develop the necessary models and Python inference scripts to enable predictions using a pre-trained YOLO model for detecting pills in images.

-

Set Up the FastAPI Application

Develop a FastAPI application that handles image uploads and processes them using the ML model.

- Create FastAPI App

-

- Implement an endpoint to upload images to AWS S3.

- Process the uploaded images using the pre-trained YOLO model to detect pills.

- Return the pill count as the response.

- Dockerize the Application

-

- Define the Dockerfile that specifies the environment and dependencies.

- Build the Docker image to package the app and required libraries.

- Upload Docker Image to AWS ECR

-

- Create an AWS ECR repository to store the Docker image.

- Authenticate Docker with AWS ECR, tag the image, and push it to the repository.

- Deploy to AWS Lambda

-

- Create a new Lambda function using the container image.

- Configure the function to use the Docker image from AWS ECR, and set the required environment variables and permissions.

Implementing the FastAPI Application

With the steps laid out, it’s time to dive into the actual implementation. Let’s start by walking through the FastAPI application code, which will handle the core functionalities of image uploading, processing with a pre-trained YOLO model, and returning the pill count to the user. Here, we will explore different code segments from the Python file(named deploy.py) hosting the FastAPI deployment code.

1. Initializing the FastAPI Application

1 2 |

from fastapi import FastAPI app = FastAPI() |

Next, we load the pre-trained YOLO model, which will later be used to detect objects (in this case, pills) in images. The model path is retrieved from the configuration file. The YoloInference class is responsible for handling the inference process and is initialized with the model path.

1 2 3 4 |

from src.inference import YoloInference from asset.config import config # Initialize the YOLO detector with the model path from configuration detector = YoloInference(config.get("model", {}).get("MODEL_PATH", ")) |

2. Uploading Images via API Endpoint

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

from fastapi import UploadFile, File @app.post("/upload_image") async def upload_image_endpoint(file: UploadFile = File(…)): """ Endpoint to upload an image to S3. Args: file (UploadFile): The file to be uploaded. Returns: JSONResponse: A JSON response indicating success or failure. """ try: image_data = await file.read() key = file.filename response = upload_image_to_s3(image_data, key) return JSONResponse(content=response) except Exception as e: logging.error(f"Error uploading image: {e}") raise HTTPException(status_code=500, detail="Image upload failed") |

3. Uploading Images to S3

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

def upload_image_to_s3(image: bytes, key: str): """ Upload an image to an S3 bucket. Args: image (bytes): The image data to be uploaded. key (str): The key (filename) for the image in S3. Returns: dict: A response dictionary indicating success or failure. """ region_name = os.environ.get('REGION') bucket_name = os.environ.get('BUCKET_NAME') s3_client = boto3.client('s3', region_name) try: s3_client.put_object(Bucket=bucket_name, Key=key, Body=image) return {"message": "Image uploaded successfully"} except Exception as e: logging.error(f"Error uploading image to S3: {e}") return {"error": "Failed to upload image"} |

4. Downloading Images from S3

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

import cv2 import numpy as np import boto3 import os def download_image_from_s3(path: str): """ Download an image from an S3 bucket and convert it to an OpenCV frame. Args: path (str): The key (filename) of the image in S3. Returns: np.ndarray: The image frame as a NumPy array, or None if the download fails. """ region_name = os.environ.get('REGION') bucket_name = os.environ.get('BUCKET_NAME') s3_client = boto3.client('s3', region_name) try: response = s3_client.get_object(Bucket=bucket_name, Key=path) image_data = response['Body'].read() np_array = np.frombuffer(image_data, np.uint8) frame = cv2.imdecode(np_array, cv2.IMREAD_COLOR) return frame except Exception as e: logging.error(f"Error downloading image from S3: {e}") return None |

5. Processing Images via API Endpoint

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from fastapi import HTTPException from fastapi.responses import JSONResponse @app.get("/process_frame") async def process_frame_endpoint(key: str): """ Endpoint to process an image frame from S3. Args: key (str): The key (filename) of the image in S3. Returns: JSONResponse: A JSON response containing the count of detected objects. """ frame = download_image_from_s3(key) if frame is None: raise HTTPException(status_code=400, detail="Could not read the image file.") count = process_frame(frame) return JSONResponse(content={"count": count}) |

6. Processing Image Frames

1 2 3 4 5 6 7 8 9 10 11 |

def process_frame(frame): """ Process the given frame using the PillTracker and YOLO detector. Args: frame (np.ndarray): The image frame to be processed. Returns: int: The count of detected objects. """ pill_tracker = PillTracker(frame, detector) count, processed_frame = pill_tracker.process_frame() return count |

7. Creating a Welcome Page

1 2 3 4 5 6 7 8 9 10 11 12 13 |

from fastapi.templating import Jinja2Templates from starlette.requests import Request templates = Jinja2Templates(directory="templates") @app.get("/") def welcome(request: Request): """ Welcome page rendered with an HTML template. Args: request (Request): The request object for the page. Returns: TemplateResponse: The HTML template response. """ return templates.TemplateResponse("index.html", {"request": request}) |

8. AWS Lambda Integration

1 2 3 |

import mangum # Create Mangum handler for AWS Lambda integration lambda_handler = mangum.Mangum(app) |

Dockerize the Application

This section will focus on writing a Dockerfile to containerize our FastAPI application for efficient deployment. We are using a multi-stage container, a Docker technique that allows you to use multiple stages in a Dockerfile, where each stage focuses on different tasks like building and packaging the application. We’re using it to reduce the final image size by only including the essential runtime dependencies, improving efficiency and security. When naming a Dockerfile, the most common practice is to simply name it Dockerfile without any extension.

Dockerfile

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

# Stage 1: Build stage # Use the official AWS Lambda Python 3.10 base image from Amazon ECR as the build stage FROM public.ecr.aws/lambda/python:3.10.2024.08.09.13 AS build # Install necessary system libraries for OpenGL (required for some packages like OpenCV) RUN yum install -y mesa-libGL mesa-libGL-devel # Set the working directory inside the container for the build stage WORKDIR /app # Copy only the requirements.txt file to the working directory # This allows Docker to cache the installed dependencies, speeding up subsequent builds COPY requirements.txt . # Install the Python dependencies listed in requirements.txt without caching them RUN pip install --no-cache-dir -r requirements.txt # Stage 2: Final stage # Use the official AWS Lambda Python 3.10 base image from Amazon ECR as the final stage FROM public.ecr.aws/lambda/python:3.10.2024.08.09.13 # Set the working directory inside the container for the final stage WORKDIR /var/task # Copy the installed packages from the build stage to the final stage COPY --from=build /var/lang/lib/python3.10/site-packages /var/lang/lib/python3.10/site-packages # Copy the entire project directory from your host machine into the container COPY . /var/task/ # Set the CMD to specify the Lambda function handler to be invoked by AWS Lambda CMD ["deploy.lambda_handler"] |

Requirements File

The requirements.txt file lists all the Python dependencies needed for your FastAPI application.

requirements.txt

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# PyTorch packages -f https://download.pytorch.org/whl/cpu/torch_stable.html torch==2.3.1+cpu torchvision==0.18.1+cpu # FastAPI and related packages fastapi==0.95.0 # Use the latest stable version of FastAPI mangum==0.12.0 # Use the latest stable version of Mangum for AWS Lambda integration python-multipart # Required for handling multipart form data in FastAPI # AWS SDK boto3==1.26.0 # Adjust based on AWS SDK requirements # Other packages dill==0.3.6 # Serialization library Jinja2==3.1.2 # Templating engine ultralytics # Adjust version as needed |

Build and Deploy Dockerized FastAPI App

With the Dockerfile and requirements.txt set up, you can build and run your Docker container. These commands will create a Docker image and then start a container based on that image.

1. Build the Docker Image

Create a Docker image from your Dockerfile:

docker build -t <image-name> . |

2. Authenticate Docker to AWS ECR

Authenticate Docker with your AWS ECR registry:

aws ecr get-login-password --region <your-region> | docker login --username AWS --password-stdin <aws_account_id>.dkr.ecr.<region>.amazonaws.com |

3. Create an ECR Repository

Create a new ECR repository if you haven’t already:

aws ecr create-repository --repository-name <repository-name> --region <your-region> |

4. Tag and Push Docker Image to ECR

Tag your Docker image:

docker tag <image-name>:latest <aws_account_id>.dkr.ecr.<region>.amazonaws.com/ <repository-name>:latest |

Push the image to your ECR repository:

docker push <aws_account_id>.dkr.ecr.<region>.amazonaws.com/<repository-name>:latest |

Note: You can obtain the specific push commands for your setup by navigating to the AWS Management Console, going to your ECR repository, and clicking on “View push commands.”

5. Deploy the Dockerized FastAPI App to AWS Lambda

5.1 Create a Lambda Function

5.1.1 Navigate to AWS Lambda in the AWS Management Console.

5.1.2 Click “Create function” and select “Container image.”

5.1.3 Choose the ECR image as the source and configure other Lambda settings as needed.

5.2 Configure Lambda Function

5.2.1 Set Environment Variables: Add necessary environment variables, such as S3 bucket names, keys, or other configurations.

5.2.2 Set Up IAM Role: Ensure the Lambda function’s IAM role has the necessary permissions to access the S3 bucket and other AWS services.

Conclusion

By using FastAPI and Docker, we’ve built a lightweight, efficient, and scalable machine-learning application. AWS S3 allows for quick and seamless storage of images while deploying the app using AWS ECR and AWS Lambda simplifies the overall deployment process. This solution not only ensures consistency across different environments but also provides automatic scaling, making it ideal for production. FastAPI’s rapid development capabilities combined with Docker’s containerization and AWS’s scalable infrastructure create an adaptable framework for efficiently managing machine learning workflows.

This MLOps approach ensures high availability, lower costs, and the ability to quickly deploy models across different environments. As a result, it offers businesses and developers a robust solution to keep their machine-learning applications performant and scalable.

About the Author

Aravind Narayanan is a Software Engineer at FoundingMinds with over 3 years of experience in software development, specializing in Python backend development. He is actively building expertise in DevOps and MLOps, focusing on streamlining processes and optimizing development operations. Aravind is also experienced in C++ and Windows application development. Among his versatile skill set, he is excited to explore the latest technologies in MLOps and DevOps, always seeking innovative ways to enhance and transform development practices.