With all the swift advancements in the AI industry, one might feel disoriented with all the new buzzwords and novel topics. This post hopes to clear some of the fog and demystify the true nature of the current most trending topic in technology called GenAI. Let us start off by viewing some frequently encountered concepts in this space.

GenAI (GAI)

Generative Artificial Intelligence refers to AI that generates outputs (text, image, video, audio, etc.) as response to prompts from humans or other AI. These prompts are predominantly in the form of text but could also be image, video, audio, etc. (eg: ChatGPT, Claude, Gemini, etc.)

LLM

Large Language Models are at the core of every modern text-based GenAI system. They are complex artificial neural networks whose input and output is natural language. The key factor that makes LLMs the game-changer is the transformer model allowing them to understand language and achieve context-awareness.

RAG

Retrieval Augmented Generation is a technique used to augment the knowledge of LLMs by appending additional contextual information. This is essential when developing conversational bots that provide knowledge on specialized topics.

Public LLMs are trained on publicly available data from the open internet so their knowledge is limited. Private firms could further tune these LLMs to fit their needs by utilizing RAG.

Note: Differentiating RAG from fine-tuning: RAG is used when an LLM lacks knowledge on a specific topic. Fine-tuning is used when the LLMs behavior needs to be tweaked to adjust the output.

Agents

An agent is basically an entity in an AI system that is powered by LLMs of its own to perform a set of predetermined tasks by conversing with other agents. Think of it as multiple mini-chatbots, conversing with each other through natural language and accomplishing the goal set by the overseer.

Vector embeddings

Vector embeddings are numerical representations of text (words & sentences, unstructured data) intended for machine interpretation (structured data). They are basically a multidimensional list of numbers that group words and sentences with similar meanings together in the vector space.

Vector stores

Vector stores are used to store vector embeddings for LLMs to query by performing vector searches. They are fundamental here for optimally storing knowledge and contextual information.

Multimodality

Multimodality refers to the capability of an AI to understand different forms of sensory input like text, image, audio, video, etc. This is a concept that is on the bleeding edge so only some models are capable of it.

LangChain, LlamaIndex, CrewAI

These three are among the many frameworks for aiding in GenAI development. For developers, the noteworthy point here is that they are open-source and have good community support.

LangChain harnesses the power of LLMs through several layers of abstractions for beginners and experts alike. The docs are recommended to get started. For a thorough grasp on the concepts, take a look at the conceptual guide.

LlamaIndex mainly focuses on indexing and optimized vector searches in vector databases (docs).

CrewAI specializes in building agents, configuring their knowledge base and linking them to each other to form a ‘crew’ of AI agents (docs).

| Feature | LangChain | LlamaIndex | CrewAI |

| Open source | Yes | Yes | Yes |

| Flexibility | High | Medium | Low |

| Agents | Supported | Supported | Specialized support |

| RAG, Indexing & Data retrieval | Supported | Specialized support | Not supported |

| Multimodality | Limited support | Limited support | Not supported |

Amazon Bedrock, Azure OpenAI

Amazon Bedrock is a fully-managed service offered by AWS to aid in the development of GenAI applications. It provides a variety of popular foundational models (FMs) like Amazon Titan, Claude, Llama, Jurassic, Stable Diffusion, etc. which could either be used out of the box or customized and fine-tuned.

Azure OpenAI similarly provides a plethora of FMs including GPT4.

The choice between the two depends on several factors, mainly the pricing, autoscaling and integration with existing services. The LLM model chosen and the customizations done will contribute the most to the billing. The rest will be from the supporting infrastructure.

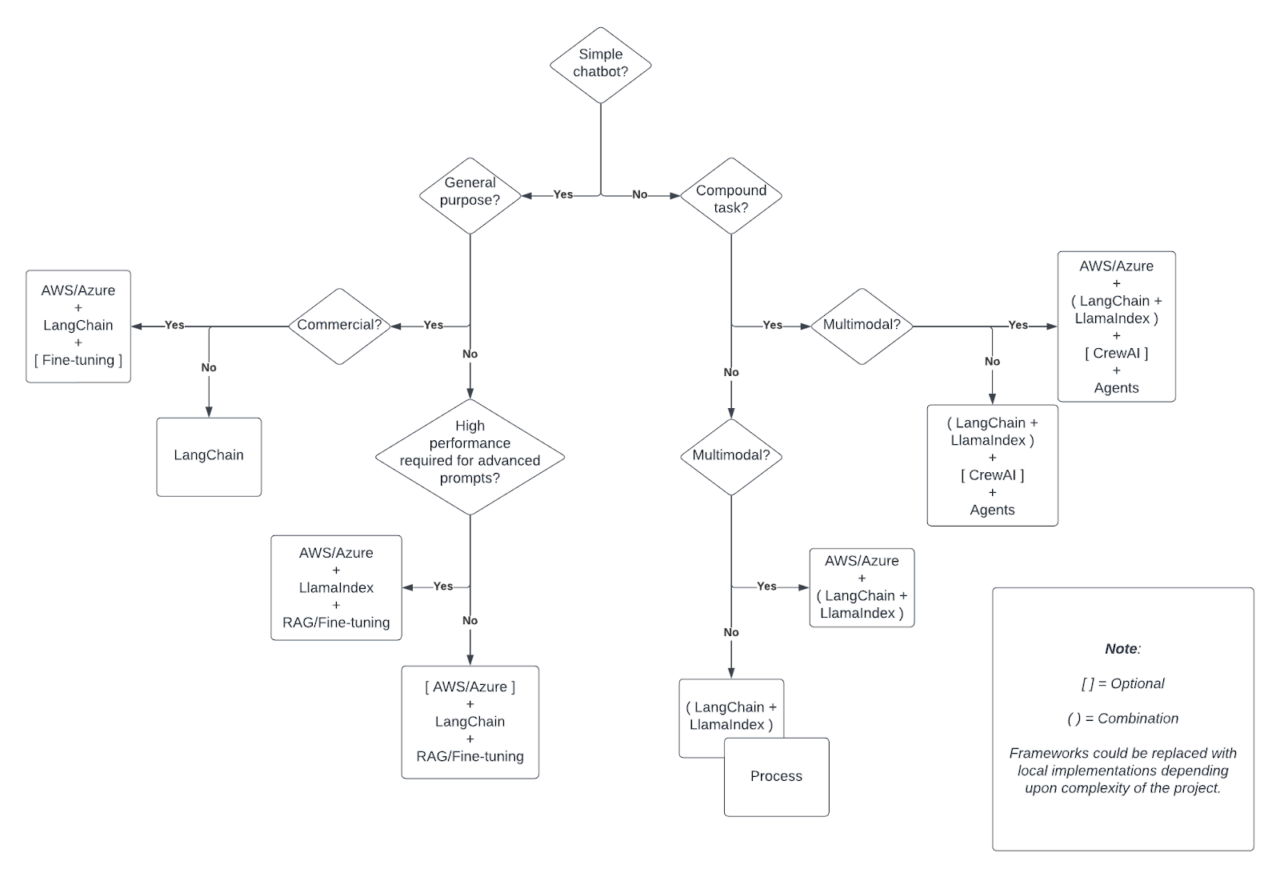

Finding the right tools

All the tools listed so far are perfectly suitable for fulfilling many use cases, be it a single framework or a mix of frameworks and services. Here are some pointers to find an ideal stack:

Amazon Bedrock Pricing: https://aws.amazon.com/bedrock/pricing/

Azure OpenAI Pricing: https://azure.microsoft.com/en-us/pricing/details/cognitive-services/openai-service/

Note: The definition of a ‘token’ varies from model to model depending on their tokenization method. Some models measure one word as one token, some include spaces as tokens. It is helpful to keep this in mind while navigating the pricing sections. For approximations, one word as one token is sufficient.

Conclusion

Whether for experienced GenAI developers or beginners, utilizing the right tools goes a long way. If the use case is on the lighter side (like chatbots with minimal RAG and infrastructure), frameworks could be the choice for swift development. What is imperative is choosing the right LLM model and fine-tuning it. On the other hand, for a long-term project that may undergo major changes in its lifecycle, it is advisable to keep the dependencies on third party AI frameworks to a minimum.

As the frameworks are still maturing, they may not be robust enough, so careful planning is necessary. Another possibility is the middle route where the best features of each framework are integrated into the pipeline. But, in case of large commercial products, tightly coupling AI services with third-party AI frameworks might lead to performance and dependency pitfalls in the future, so native implementations might be required.

Reference

- LangChain – https://python.langchain.com/v0.2/docs/introduction/

- LlamaIndex – https://docs.llamaindex.ai/en/stable/

- CrewAI – https://docs.crewai.com/

- Amazon Bedrock – https://docs.aws.amazon.com/bedrock/latest/userguide/what-is-bedrock.html

- Azure OpenAI – https://learn.microsoft.com/en-us/azure/ai-services/openai/

- Amazon Bedrock Pricing – https://aws.amazon.com/bedrock/pricing/

- Azure OpenAI Pricing – https://azure.microsoft.com/en-us/pricing/details/cognitive-services/openai-service/

- Amazon Bedrock vs Azure OpenAI: A Costly Affair? – https://www.stork.ai/blog/amazon-bedrock-vs-azure-openai-a-costly-affair

- Amazon Bedrock vs Azure OpenAI: Pricing Considerations – https://www.vantage.sh/blog/azure-openai-vs-amazon-bedrock-cost

About the Author

Jeril Monzi Jacob is passionate about exploring cutting-edge technologies at the forefront of industry trends, allowing him to bring innovative solutions to his work. Jeril works on various Artificial Intelligence and other initiatives at Founding Minds. During his free time, he likes to work out, build DIY side-projects, play and develop games, and watch movies and animes.