Tired of generic chatbots? Let’s build one that truly understands your data. By combining Large Language Models (LLMs) with modern technologies like Vector Databases and Retrieval-Augmented Generation (RAG), we can create an AI assistant that gives precise, context-aware responses without hallucination.

In this guide, you’ll learn to:

- Harness the power of VectorDB, RAG, LLMs, and Gradio

- Build a chatbot that delivers accurate answers from your private data

- Deploy your intelligent assistant step-by-step

Ready to create an AI that thinks just for you? Let’s dive in!

Vector Databases (VectorDB)

A vector database is a specialized type of database optimized for storing, retrieving, and managing vector embeddings, handling high-dimensional data unlike traditional databases designed for structured data. They are silent powerhouses for advanced ML and NLP tasks.

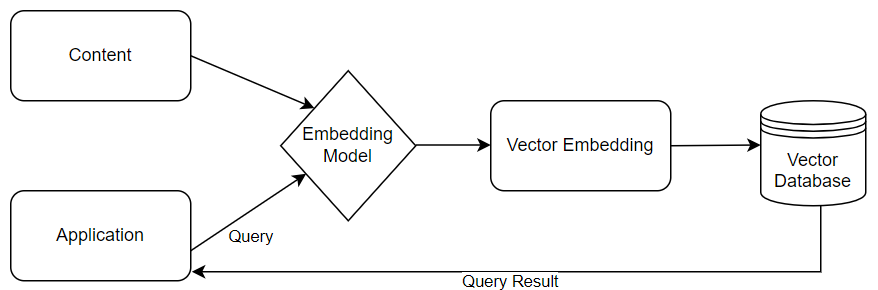

Workflow of VectorDB

Key components of VectorDB

- Vector Embeddings: Numerical representations of data in high-dimensional vectors capturing semantic meaning, applicable to words, sentences, and documents.

- Embedding Model: A machine learning model that converts raw data (text, images, or audio) into high-dimensional vectors (embeddings). Examples include Word2Vec and BERT for text and CNNs for images.

- Similarity Search: Finding vectors in a vector database that are most similar to a query vector using metrics like Euclidean distance, cosine similarity, or inner product.

FAISS VectorDB

In our blog, we leverage FAISS (Facebook AI Similarity Search) to handle efficient similarity search and clustering of dense vectors. FAISS is a powerful library designed to search through large sets of vectors, even those exceeding RAM capacity. This capability is crucial for our needs, as it ensures that we can perform fast and accurate searches across extensive datasets. Additionally, FAISS provides robust tools for evaluation and parameter tuning, enabling us to optimize our search algorithms for better performance.

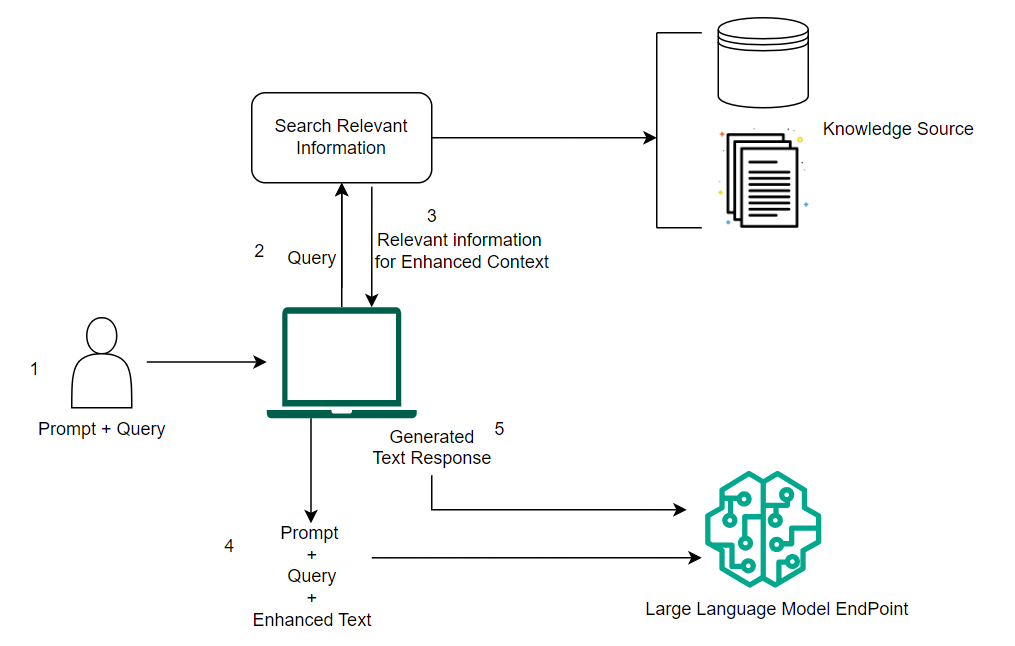

Retrieval-Augmented Generation (RAG)

RAG integrates information retrieval systems with generative large language models (LLMs) to provide contextually relevant and accurate responses. This approach addresses issues of hallucination and context in LLMs, ensuring more reliable and coherent outputs.

RAG = Retrieval-based model + Generative-based model

- Retrieval-based models: Extract information from external knowledge sources like databases, articles, or websites.

- Generative-based models: Generate text using language generation capabilities.

Key components of RAG

- The User

- VectorDB (technique used for retrieval)

- Generative AI System (LLMs)

Workflow of RAG

LLM Model

To build an effective and interactive chatbot, we are utilizing the “microsoft/Phi-3-mini-4k-instruct” model from Microsoft’s Phi-3 series. This model is a compact, efficient transformer-based model optimized for various NLP tasks, especially instruction-based applications. Fine-tuned on instructional data, it generates detailed, contextually appropriate responses, making it ideal for educational tools, customer support, content creation, and interactive applications. Despite its small size, it delivers high-quality text generation, demonstrating versatility across different use cases. The model and its tokenizer are easily integrated and deployed via Hugging Face.

Phi-3-mini Model Specifications

- Architecture: 3.8B parameters, dense decoder-only Transformer model.

- Fine-tuning: Supervised Fine-tuning (SFT) and Direct Preference Optimization (DPO) ensure alignment with human preferences and safety guidelines.

- Inputs: Text. It is best suited for prompts using a chat format.

- Context length: 4K tokens

- Outputs: Generated text in response to the input

Chat Format

1 2 3 4 5 |

<|system|> You are a helpful assistant.<|end|> <|user|> How to explain Internet for a medieval knight?<|end|> <|assistant|> |

where the model generates the text after

1 |

<|assistant|> |

Gradio: Simplifying Machine Learning Interfaces

Gradio is an open-source Python package that transforms complex machine-learning models into accessible, user-friendly applications. While machine learning traditionally requires specialized hardware, software, and technical expertise, Gradio breaks down these barriers by enabling developers to create intuitive interfaces with just a few lines of code. These interfaces can be easily embedded in Python notebooks or shared through URLs, making ML models more collaborative and accessible. The framework supports a wide range of customizable UI components compatible with popular ML frameworks like TensorFlow and PyTorch, as well as standard Python functions.

How to build a Chatbot given Contextual and Non-Contextual inputs

Now that we understand our key components, let’s bring them together to build our intelligent chatbot. Our implementation follows a logical three-step architecture.

First, we’ll implement a vector database (VectorDB) as our foundation, leveraging the embedding capabilities we discussed to enable efficient similarity search.

Next, we’ll connect this VectorDB to our chosen LLM, creating a bridge between our stored knowledge and the generation capabilities we explored earlier.

Finally, we’ll wrap everything in a Gradio interface, using the UI components we covered to create an accessible user experience. This architecture ensures that our chatbot can not only understand and retrieve relevant information but also present it in a way that’s intuitive for users.

Let’s dive in!

1. Implementing a vector database (VectorDB)

- Environmental Setup: Prepare the necessary tools and libraries, including Python, FAISS, and KeyBert library, to create a suitable development environment for implementing Vectordb.

1 |

!pip install faiss-gpu keybert |

- Data Cleaning: Data cleaning is a crucial preprocessing step that optimizes text data for machine learning applications. By removing URLs, HTML tags, special characters, and redundant whitespaces, we create a streamlined dataset that improves model performance in several ways. Clean data enables more accurate tokenization, reduces processing overhead, and minimizes storage requirements. This sanitization process also adds a layer of security by filtering out potentially malicious elements like harmful links.

1 2 3 4 5 6 7 8 9 |

import re # Function to clean the given text def clean_text(text): text = re.sub(r'http\S+|www\S+|https\S+', '', text, flags = re.MULTILINE) # Remove URLs text = re.sub(r'<.*?>', '', text) # Remove HTML tags text = re.sub(r'[^a-zA-Z\s]', '', text) # Remove special characters and numbers text = re.sub(r'\s+', ' ', text).strip() # Remove extra whitespace return text |

- FAISS Implementation:

- Initialize the variables and reset the global variables: To maintain a clean and predictable state in your application, particularly when handling multiple operations or datasets sequentially, it’s essential to reset global variables. Resetting the global variables ensures that previous data does not interfere with new operations, providing a clean slate for each new dataset.

1 2 3 4 5 6 7 8 |

keywords_list = [] # List of keywords we get from the Keybert model embeddings_chunks = [] # List of embeddings # Function to reset the global variables def reset_global_variables(): global chunk_index, embeddings_chunks embeddings_chunks = [] # Ensures previous embeddings data is cleared, so the next operation doesn't mix new data with old data chunk_index = None # Prevents any misalignment or errors in processing sequences of data. |

-

- Embedding Model & Tokenizer: The BERT-base-uncased model serves as our text embedding solution, offering bidirectional context understanding through its 12-layer Transformer architecture. This pre-trained model, available via Hugging Face, processes up to 512 tokens and handles text in lowercase format for improved efficiency. We’ll utilize both the model and its corresponding tokenizer for converting text into meaningful vector representations.

1 2 3 4 5 6 |

from transformers import BertTokenizer, BertModel # Embedding Model embedding_model_name = 'bert-base-uncased' embedding_model = BertModel.from_pretrained(embedding_model_name) # Load the BERT model embedding_tokenizer = BertTokenizer.from_pretrained(embedding_model_name) # Load the BERT tokenizer |

-

- Text Chunking: Check the number of tokens and split the text into chunks: Vectors in a Vector Database have a predefined size (e.g. 1536 bytes), and each vector should be the same size. The most common solution is to split the given text into “chunks”, create an embedding for each chunk, and store all the chunks into the vector database.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# Function to check whether the given text is too long def is_text_too_long(text, max_length=512): tokens = embedding_tokenizer.encode(text, add_special_tokens = False) # Tokenize the text without adding special tokens num_tokens = len(tokens) return f"Number of tokens in the given text: {num_tokens}" # Function to split the given text into chunks def split_text_to_chunk(text): overlap = 50 # Set the overlap length between chunks, ensuring context across chunks are preserved max_length = 512 tokens = embedding_tokenizer.encode(text, add_special_tokens=False) # Tokenize the text without adding special tokens max_length -= embedding_tokenizer.num_special_tokens_to_add(pair=False) # Adjust max_length for special tokens, ensures the token length of each chunk(including special tokens) does not exceed the max length chunks = [] for i in range(0, len(tokens), max_length - overlap): chunk = tokens[i:i + max_length] # Extract a chunk of tokens chunk_text = embedding_tokenizer.decode(chunk, clean_up_tokenization_spaces=True) # Decode the chunk back to text chunks.append(chunk_text) print(chunks) return chunks |

-

- Generate the vector embeddings & implement vectordb: We will generate vector embeddings for our text chunks to prepare them for insertion into the vector database. These embeddings transform text into numerical representations that FAISS can efficiently search and cluster.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# Function to generate embeddings chunks embeddings_chunks = [] # List of embeddings of the chunks def embed_text(sentence_or_chunk): for chunk in sentence_or_chunk: inputs = embedding_tokenizer(chunk, return_tensors='pt', truncation=True, padding=True) # Tokenize the chunk with truncation and padding, return tensors with torch.no_grad(): # Disable gradient calculation for efficiency outputs = embedding_model(**inputs) # Get the model outputs for the tokenized inputs cls_embedding = outputs.last_hidden_state[:, 0, :] # Extract the CLS token embedding from the last hidden state embeddings_chunks.append(cls_embedding) return embeddings_chunks # Function to create an index in vectordb using the embeddings array def generate_embeddings(embeddings_chunks): embeddings_array = np.vstack(embeddings_chunks) # Stack the list of embeddings into a single numpy array chunk_index = vector_db(embeddings_array) # Create an index in the vector database using the embeddings array return chunk_index |

For example, using an embedding framework, text like ‘name’ can be transformed into a numerical representation like:

1 |

[-1.12725616e-01 -5.19371144e-02 -6.94938377e-02 2.93748770e-02 -7.56825879e-02 8.83060396e-02 -5.42510450e-02 -1.52141722e-02] |

1 2 3 4 5 6 7 8 9 |

import faiss # Function to implement the FAISS VectorDB def vector_db(embeddings_array): dimension = embeddings_array.shape[1] chunk_index = faiss.IndexFlatL2(dimension) # Create a FAISS index for L2 distance with the given dimension chunk_index.add(embeddings_array) print(f"Number of chunks indexed: {chunk_index.ntotal}") return chunk_index |

-

- Create Search vector and get the relevant content: We’ll use KeyBERT for keyword extraction, which will help us identify key phrases within the text. These keywords can then be used as search queries to find the most relevant information in our vector database.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

from keybert import KeyBERT # Function to get the main keyword from the given text def searchquery_keyword(text): kw_model = KeyBERT() # Initialize the KeyBERT model keywords = kw_model.extract_keywords(text, top_n=1) # Extract the top keyword from the text first_keyword = keywords[0][0] if keywords else None return first_keyword import numpy as np # Function to get the indices of the relevant chunks given the search query def search_query(searchquery, chunk_index, k=5): global keywords_list keywords_list.append(searchquery) keyword_to_search = keywords_list[-1] inputs = embedding_tokenizer(keyword_to_search, return_tensors='pt', truncation=True, padding=True) # Tokenize the keyword with truncation and padding, return tensors # Generate embedding for the query with torch.no_grad(): outputs = embedding_model(**inputs) query_embedding = outputs.last_hidden_state[:, 0, :].numpy().reshape(1, -1) # Extract and reshape the CLS token embedding distances, indices = chunk_index.search(query_embedding, k=k) # Search the index for the top k similar embeddings return distances, indices # Function to extract the similar chunks given the indices def get_similar_sentences(chunks, indices): print(indices) similar_text = [chunks[idx] for idx in indices[0]] print(similar_text) return similar_text |

2. Integrating VectorDB with Generative AI System (LLMs)

- LLM Model:

1 2 3 4 5 6 |

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline # LLM Model llm_model_name = 'microsoft/Phi-3-mini-4k-instruct' phi3_model = AutoModelForCausalLM.from_pretrained(llm_model_name, torch_dtype="auto", trust_remote_code=True) # Load the language model phi3_tokenizer = AutoTokenizer.from_pretrained(llm_model_name) # Load the language tokenizer |

Check if GPU support is available or not:

1 2 3 4 5 6 7 |

# Check if GPU support (CUDA) is available or not if torch.cuda.is_available(): device = torch.device("cuda") phi3_model.to(device) # Move the model to the GPU else: device = torch.device("cpu") print(f"Running on device: {device}") |

- Text Generation Pipeline: Pipelines offer an efficient way to use models for inference, especially when we need to handle large-scale data or deploy models on devices with limited resources.

- Quantization: It is a technique used to reduce the computational load and memory footprint of a neural network model by representing its weights and activations with lower precision data types, such as bfloat16, int8 instead of the usual float32. By reducing the precision from 32-bit floats to 16-bit floats (bfloat16), the model consumes less memory, which is critical when deploying large models on devices with limited resources.

- Generating and Processing Text Responses: With the text generation pipeline set up, we can now focus on generating and processing text responses based on contextual and non-contextual inputs.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# Function for creating text generation pipeline def text_gen_pipeline(): text_generation_pipeline = pipeline( "text-generation", # Specifying the task model = phi3_model, # Use the previously loaded model tokenizer = phi3_tokenizer, # Use the previously loaded tokenizer device = 0 if torch.cuda.is_available() else -1, # Set device to GPU if available, else CPU max_length = 4000, # Set the maximum length for the generated text do_sample = False, # Disable sampling (use greedy decoding) top_k = 2, # Set top_k to 2 for limiting next token selection to top 2 tokens num_return_sequences = 1, # Generate one sequence per request max_new_tokens = 4000, # Set the maximum number of new tokens to generate truncation=True, # Enable truncation of inputs that exceed the maximum length eos_token_id = 32007, # Set the end-of-sequence token ID model_kwargs = {"torch_dtype": torch.bfloat16}, # Set model keyword arguments, torch_dtype specifies the data type to use for the model's tensors config = {"temperature": 0.0} # Set the temperature to 0.0 for deterministic output ) return text_generation_pipeline # Function to process the response we get from the model def process_response(generator, input_to_model ): output = generator(input_to_model, return_full_text=False) # Generate text using the generator, without returning the full text generated_text = output[0]['generated_text'] # Extract the generated text from the output return generated_text |

-

- Contextual Input: It involves providing specific information on a particular topic, allowing you to ask the chatbot questions related solely to that given information. The bot will then generate answers based exclusively on the provided content.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# Function to get the answer of the question given a specific context that is passed to the model def get_answer_given_context(question, similar_sentences): # Chat template that the model accepts model_chat_template = """ <|system|> You are a question answer assistant chatbot named "QA Bot". Your expertise is exclusively in providing information and advice only to the given content. This includes content-related question-answer queries. You do not provide outside this scope. If a question is not about the content, respond with, "I specialize only in content-related queries".<|end|> <|assistant|>QA Bot here! Ask me anything related to the content you provide. Content only, though!<|end|><user|> """ template = """<|assistant|>Ask questions regarding this content.<|end|> <|user|> """ input_to_model = ( model_chat_template + "\n" + ".".join(similar_sentences) + "<|end|>" + template + "\n" + question + "<|end|>" ) response = process_response(text_gen_pipeline(), input_to_model) return response |

-

- Non-Contextual Inputs: It allows you to ask the chatbot questions without providing any prior specific information. The bot will generate answers based on general knowledge and available data, without being confined to a specific context.

1 2 3 4 5 |

# Function to get the answer of the question if no context is given def get_answer_without_context(question): model_chat_template = "<|user|>" + question + "<|end|>" # Chat template that model accepts response = process_response(text_gen_pipeline(), model_chat_template) return response |

3. Implementing UI for the Chatbot service using Gradio

- Environmental Setup:

1 |

!pip install gradio |

- Initialize required variables:

1 2 3 4 |

text_index = None intermediate_sentences = None # Relevant sentences previous_questions_with_context = [] # List of previous questions with context previous_questions_without_context = [] # List of previous questions without context |

- Process the given text to get a relevant context: Process the input text and retrieve relevant context.

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# Function to process the text def process_text(text): global intermediate_sentences cleaned_text = clean_text(text) check_token_size = is_text_too_long(cleaned_text) print("Texts are split into chunks") chunks = split_text_to_chunk(cleaned_text) embeddings_chunks = embed_text(chunks) text_index = generate_embeddings(embeddings_chunks) searchquery = searchquery_keyword(text) distances, indices = search_query(searchquery, text_index, k=5) intermediate_sentences = get_similar_sentences(chunks, indices) return "Text processed. You can now enter your question." |

- Format the UI Interface:

1 2 3 4 5 6 7 |

# Function to add the formatting to the UI interface def format_history(history): formatted_history = "".join([ f"<div style='text-align: right;'><div style='display: inline-block; background-color: #000000; border: 1px solid white; border: 1px solid white; border-radius: 15px; padding: 10px; margin: 5px;'><strong>Q:</strong> {q}</div></div>" f"<div style='text-align: left;'><div style='display: inline-block; background-color: #000000; border: 1px solid white; border: 1px solid white; border-radius: 15px; padding: 10px; margin: 5px;'><strong>A:</strong> {a}</div></div>" for q, a in history]) return formatted_history |

- Process the question given a specific context: process the user’s question when a specific context is provided.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# Function to process the question given a specific context intermediate_sentences = None # Relevant sentences previous_questions_with_context = [] # List of previous questions with context def process_question(user_quest): global intermediate_sentences global previous_questions_with_context if user_quest: if intermediate_sentences is None: chunks = split_text_to_chunk(cleaned_text) embeddings_chunks = embed_text(chunks) text_index = generate_embeddings(embeddings_chunks) searchquery = searchquery_keyword(text) distances, indices = search_query(searchquery, text_index, k=5) intermediate_sentences = get_similar_sentences(chunks, indices) ans = get_answer_given_context(user_quest, intermediate_sentences) previous_questions_with_context.append((user_quest, ans)) # Keep track of previously asked questions with context formatted_history = format_history(previous_questions_with_context) return ans, formatted_history, "" return "Please enter a question.", format_history(previous_questions_with_context), user_quest |

- Process the question if no context is given: Handles cases where the user asks a question without any specific context.

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# Function to process the question without a context previous_questions_without_context = [] # List of previous questions without context def process_question_without_context(user_quest): global previous_questions_without_context if user_quest: ans = get_answer_without_context(user_quest) previous_questions_without_context.append((user_quest, ans)) # Keep track of previously asked questions without context formatted_history = format_history(previous_questions_without_context) return ans, formatted_history, "" return "Please enter a question.", format_history(previous_questions_without_context), user_quest |

- Implement the functionality of Clear button: Clears the text and reset the interface state.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# Function to implement clear option in the UI interface def clear_text(): return "" # Function to implement clear option for the in the UI interface given the text with context def clear_text_with_context(): global intermediate_sentences global previous_questions_with_context intermediate_sentences = None previous_questions_with_context = [] reset_global_variables() return "", "Cleared. You can enter new text." # Function to implement clear option for the in the UI interface def clear_questions_with_context(): global previous_questions_with_context previous_questions_with_context = [] return "", "" # Function to implement clear option for the in the UI interface without context def clear_questions_without_context(): global previous_questions_without_context previous_questions_without_context = [] return "", "" |

- Launch Gradio: Launch the Gradio interface, allowing users to interact with our chatbot.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

import gradio as gr with gr.Blocks() as demo: gr.Markdown("# Text and Question Processing Interface") # Tab for Chat with Text Context with gr.Tab("Chat with Text Context"): gr.Markdown("## Enter the text to process it, then ask your question") text_input = gr.Textbox(lines=5, placeholder="Enter the text here...", label="Text") with gr.Row(): submit_text_button = gr.Button("Submit Text") clear_text_with_context_button = gr.Button("Clear Text") text_status = gr.Textbox(label="Status", interactive=False) answer_with_context = gr.HTML(label="Chat History") user_question_with_context = gr.Textbox(lines=1, placeholder="What is your question?", label="User Question") with gr.Row(): clear_question_with_context_button = gr.Button("Clear Question") # Link buttons and functions for chat with text context submit_text_button.click(process_text, inputs=[text_input], outputs=text_status) clear_text_with_context_button.click(clear_text_with_context, outputs=[text_input, text_status]) user_question_with_context.submit( process_question, inputs=user_question_with_context, outputs=[answer_with_context, answer_with_context, user_question_with_context] ) clear_question_with_context_button.click( clear_questions_with_context, outputs=[user_question_with_context, answer_with_context] ) # Tab for Chat without Text Context with gr.Tab("Chat without Text Context"): gr.Markdown("## Directly ask your question without entering any text context") answer_without_context = gr.HTML(label="Chat History") user_question_without_context = gr.Textbox(lines=1, placeholder="What is your question?", label="User Question") with gr.Row(): clear_question_without_context_button = gr.Button("Clear Question") # Link buttons and functions for chat without text context user_question_without_context.submit( process_question_without_context, inputs=user_question_without_context, outputs=[answer_without_context, answer_without_context, user_question_without_context] ) clear_question_without_context_button.click( clear_questions_without_context, outputs=[user_question_without_context, answer_without_context] ) if __name__ == "__main__": demo.launch(share=True) # Launch the Gradio interface and enable sharing |

Conclusion

We’ve just walked through creating a chatbot that’s not just another generic AI—it’s one that truly understands your world. By combining the power of Vector Databases, RAG, and Gradio, you can now build an AI assistant that gives precise, contextual responses instead of generic answers. Think of it as giving your AI a personalized knowledge base to work with!

Ready to revolutionize your customer service or streamline your data operations? The best part is, you don’t need to be an AI expert to get started. With these tools in your arsenal, you’re well-equipped to create intelligent chatbots that can transform how you interact with your data.

What will you build with your new AI companion? The possibilities are endless!

About the Author

Karthika Rajan Nair completed a Data Science internship with the Machine Learning team at Founding Minds, where she collaborated closely with the team and authored this blog as a result of her work. With a strong foundation in Python, machine learning frameworks, and cloud technologies, Karthika is passionate about advancing her skills to drive innovation and address complex data challenges. She is pursuing a Master’s degree in Data Science at the University at Buffalo, New York.